This week marks the tenth anniversary of the World IPv6 Launch, an event that saw many ISPs and content providers turn on IPv6 access to their services for the first time. In this post, I will reflect on an IPv6 use case that’s now seeing 100Gbps of IPv6 traffic traversing the Janet network.

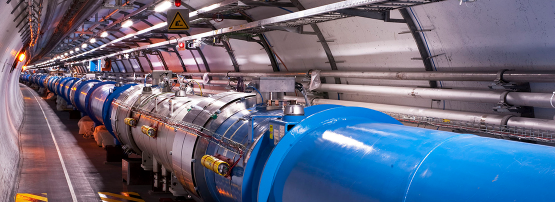

The Large Hadron Collider (LHC) at CERN has recently restarted, which is likely to result in larger volumes of particle physics data than ever being collected by the four main experiments prior to being transferred to participant sites for processing and analysis.

In the UK, some 19 universities and research sites are part of the Worldwide Large Hadron Collider Computing Grid (WLCG). These sites, together forming the UK GridPP community, have some of the most demanding requirements for data transfers over the Janet network. One, the Rutherford Appleton Laboratory (RAL), is now connected to Janet at 2x100G, while some others have been, or are in the process of being, upgraded to 100G.

One of the universities that connected to Janet at 100G is Imperial College London, which is on the CMS and LHCb experiments at the LHC. Imperial College has one 100G link to its data centre at the shared Slough DC facility, and one 100G connection to its London campus in South Kensington. Imperial is also one of the leading Janet sites for IPv6 deployment on campus, having dual-stack IPv4/IPv6 through most of its infrastructure. This is of particular interest as the WLCG has, over the past few years, been moving its networking to both support and prefer the use of IPv6, with the aim of running IPv6-only in the future.

The status of the WLCG’s move towards IPv6 is reported on its IPv6 Working Group site shows 15 of the 19 UK sites have IPv6, and that overall over 80% of the Tier 2 WLCG storage sites have IPv6 enabled. The main driver is simply address space, to ensure the compute and storage nodes can all communicate directly, and not be impaired by NAT. It’s a significant achievement, and an exemplar for other communities who may choose to follow.

But what does this mean for Imperial’s WLCG traffic? Imperial is connected to the LHC overlay network, LHCONE, which is essentially a virtual network (a VRF) sitting on top of the general research and education networks, but only carrying WLCG data. There is also a separate dedicated optical network, LHCOPN, connecting the Tier 1 sites to CERN, of which RAL is one.

At the end of March 2022, a bulk file transfer to Imperial filled the incoming 100G link at Slough, such that 100% of the traffic coming in over LHCONE was over IPv6. Until recently, that 100% figure hadn’t been reached. But a recent change to the dCache software used to manage the disk servers at Imperial, in which the Java elements were configured to ensure they prefer IPv6, has pushed the inbound transfers to now be predominantly IPv6.

The plot below of Imperial’s monitoring system at Slough (Figure 1) shows the two-hour period where the 100G link was filled and where (the green line below) 100% of the LHCONE traffic at that time was IPv6.

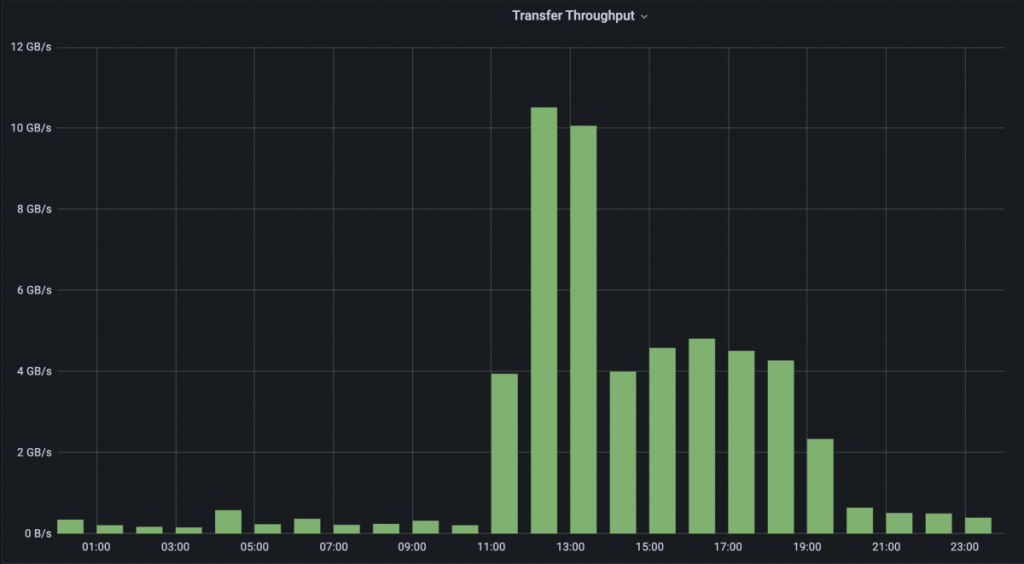

The traffic levels seen in the network view correspond to those seen by the WLCG File Transfer Service (FTS) visualization tools (Figure 2). It’s useful to consider both views, to ensure the network performance is correctly viewed as part of the overall true end-to-end transfer picture. The FTS view is also a reminder that while network administrators think in bits per second, researchers think in bytes, so make sure you’re comparing Gbit/s and GB/s correctly!

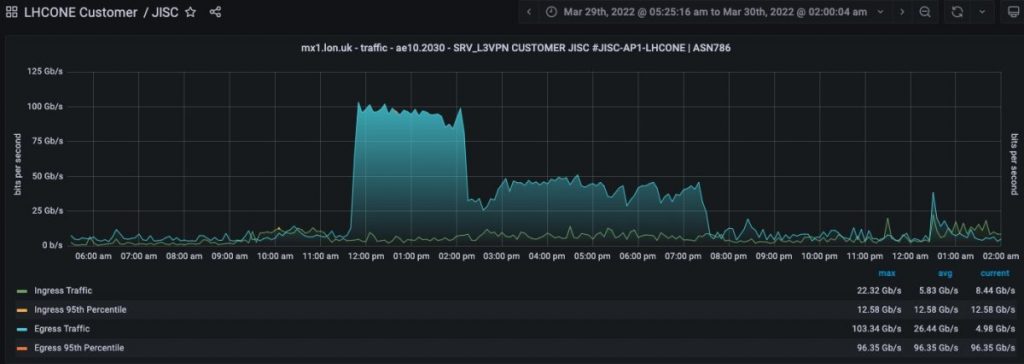

It was also interesting to see this traffic reflected in BRIAN, the new monitoring platform for the GÉANT pan-European research and education backbone network (Figure 3). GÉANT provides connectivity across Europe for Jisc and the other National Research and Education Networks (NRENs) and internationally to other continents and regions. BRIAN has both public views and login-restricted views of different traffic paths, including the Janet traffic to/from GÉANT and the LHCONE traffic to/from Janet.

Seeing 100Gbit/s of traffic and 100% of the LHCONE CERN data, coming into Imperial over IPv6 is a great demonstration of both the performance and capability of IPv6 and Janet.

Adapted from the original post featured on Shaping the future of Janet.

Tim Chown is a Network Development Manager at Jisc, a not-for-profit company that provides network and IT services and digital resources in support of higher education institutions and research in the United Kingdom.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.