In the previous posts in this series (Part 1, Part 2), we had NAT traversal in a state where we couldn’t get through everything but had a backup for when we failed. If you’re not satisfied with good enough, there’s still a lot more we can do!

What follows is a somewhat miscellaneous set of tricks, which can help us out in specific situations. None of them will solve NAT traversal by itself, but by combining them judiciously, we can get incrementally closer to a 100% success rate.

The benefits of birthdays

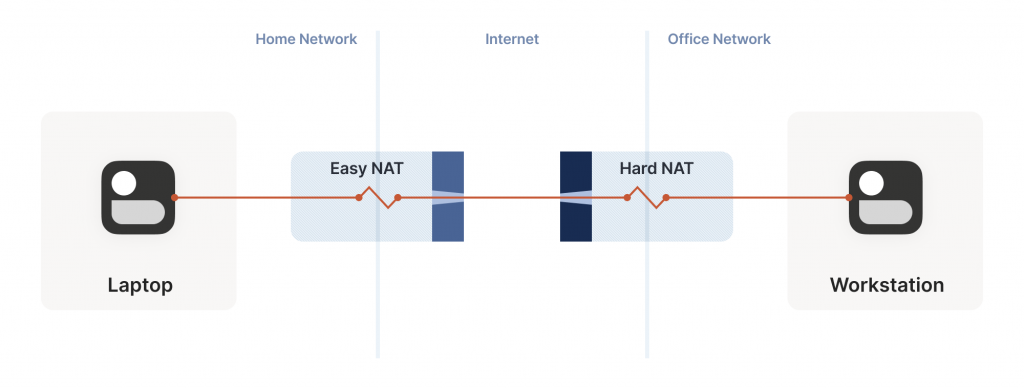

Let’s revisit our problem with hard NATs. The key issue is that the side with the easy NAT doesn’t know what ip:port to send to on the hard side. But must send to the right ip:port in order to open up its firewall to return traffic. What can we do about that?

Well, we know some ip:port for the hard side, because we ran STUN. Let’s assume that the IP address received is correct. That’s not necessarily true, but let’s run with the assumption for now. As it turns out, it’s mostly safe to assume this. (If you’re curious why, see REQ-2 in RFC 4787.)

If the IP address is correct, our only unknown is the port. There are 65,535 possibilities; could we try all of them? At 100 packets/sec, that’s a worst case of 10 minutes to find the right one. It’s better than nothing, but not great. And it really looks like a port scan (because in fairness, it is), which may anger network intrusion detection software.

We can do much better than that, with the help of the birthday paradox. Rather than open one port on the hard side and have the easy side try 65,535 possibilities, let’s open, say, 256 ports on the hard side (by having 256 sockets sending to the easy side’s ip:port), and have the easy side probe target ports at random.

I’ll spare you the detailed maths, but you can check out the dinky python calculator I made while working it out. The calculation is a very slight variant on the ‘classic’ birthday paradox, because it’s looking at collisions between two sets containing distinct elements, rather than collisions within a single set. Fortunately, the difference works out slightly in our favour! Here’s the chances of a collision of open ports (that is, successful communication), as the number of random probes from the easy side increases, and assuming 256 ports on the hard side:

| Number of random probes | Chance of success |

| 174 | 50% |

| 256 | 64% |

| 1,024 | 98% |

| 2,048 | 99.90% |

Table 1 — Chance of an open port collision.

If we stick with a fairly modest probing rate of 100 ports/sec, half the time we’ll get through in under two seconds. And even if we get unlucky, 20 seconds in we’re virtually guaranteed to have found a way in, after probing less than 4% of the total search space.

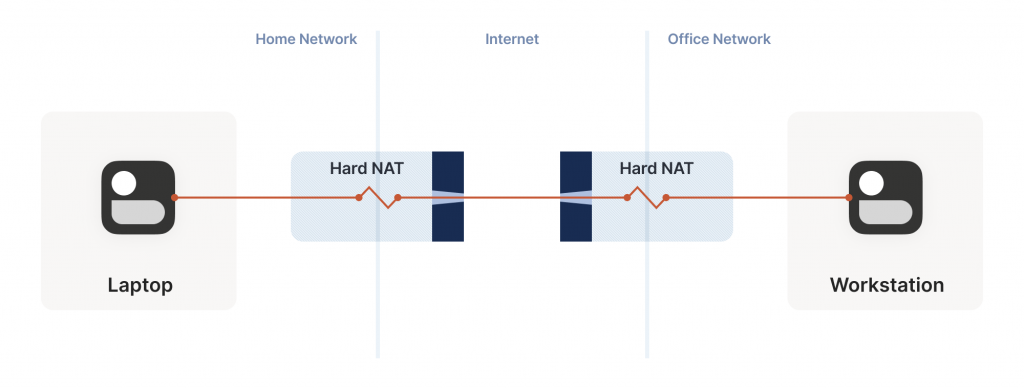

That’s great! With this additional trick, one hard NAT in the path is an annoying speedbump, but we can manage. What about two?

We can try to apply the same trick, but now the search is much harder. Each random destination port we probe through a hard NAT also results in a random source port. That means we’re now looking for a collision on a {source port, destination port} pair, rather than just the destination port.

Again, I’ll spare you the calculations, but after 20 seconds using the same regime as the previous setup (256 probes from one side, 2,048 from the other), our chance of success is 0.01%.

This shouldn’t be surprising if you’ve studied the birthday paradox before. The birthday paradox lets us convert N ‘effort’ into something on the order of sqrt(N). But we squared the size of the search space, so even the reduced amount of effort is still a lot more effort. To hit a 99.9% chance of success, we need each side to send 170,000 probes. At 100 packets/sec, that’s 28 minutes of trying before we can communicate. Fifty percent of the time we’ll succeed after ‘only’ 54,000 packets, but that’s still nine minutes of waiting around with no connection. Still, that’s better than the 1.2 years it would take without the birthday paradox.

In some applications, 28 minutes might still be worth it. Spend half an hour brute-forcing your way through, then you can keep pinging to keep the open path alive indefinitely — or at least until one of the NATs reboots and dumps all its state, then you’re back to brute forcing. But it’s not looking good for any kind of interactive connectivity.

Worse, if you look at common office routers, you’ll find that they have a surprisingly low limit on active sessions. For example, a Juniper SRX 300 maxes out at 64,000 active sessions. We’d consume its entire session table with our one attempt to get through! And that’s assuming the router behaves gracefully when overloaded. And this is all to get a single connection! What if we have 20 machines doing this behind the same router? Disaster.

Still, with this trick we can make it through a slightly harder network topology than before. That’s a big deal, because home routers tend to be easy NATs, and hard NATs tend to be office routers or cloud NAT gateways. That means this trick buys us improved connectivity for the home-to-office and home-to-cloud scenarios, as well as a few office-to-cloud and cloud-to-cloud scenarios.

Partially manipulating port maps

Our hard NATs would be so much easier if we could ask the NATs to stop being such jerks and let more stuff through. Turns out, there’s a protocol for that! Three of them, to be precise. Let’s talk about port mapping protocols.

The oldest is the Universal Plug and Play Internet Gateway Device (UPnP IGD) protocol. It was born in the late 1990s, and as such uses a lot of very 90s technology (XML, SOAP, multicast HTTP over UDP — yes, really) and is quite hard to implement correctly and securely — but a lot of routers shipped with UPnP, and a lot still do. If we strip away all the fluff, we find a very simple request-response that all three of our port mapping protocols implement: ‘Hi, please forward a WAN port to lan-ip:port,’ and ‘okay, I’ve allocated wan-ip:port for you.’

Speaking of stripping away the fluff — some years after UPnP IGD came out, Apple launched a competing protocol called NAT Port Mapping Protocol (NAT-PMP). Unlike UPnP, it only does port forwarding, and is extremely simple to implement, both on clients and on NAT devices. A little bit after that, NAT-PMP v2 was reborn as Port Control Protocol (PCP).

So, to help our connectivity further, we can look for UPnP IGD, NAT-PMP and PCP on our local default gateway. If one of the protocols elicits a response, we request a public port mapping. You can think of this as a sort of supercharged STUN. In addition to discovering our public ip:port, we can instruct the NAT to be friendlier to our peers, by not enforcing firewall rules for that port. Any packet from anywhere that lands on our mapped port will make it back to us.

You can’t rely on these protocols being present. They might not be implemented on your devices. They might be disabled by default, and nobody knew to turn them on. They might have been disabled by policy.

Disabling by policy is fairly common because UPnP suffered from a number of high-profile vulnerabilities (since fixed, so newer devices can safely offer UPnP, if implemented properly). Unfortunately, many devices come with a single ‘UPnP’ checkbox that actually toggles UPnP, NAT-PMP and PCP all at once, so folks concerned about UPnP’s security end up disabling the perfectly safe alternatives as well.

Still, when available, it effectively makes one NAT vanish from the data path, which usually makes connectivity trivial. But let’s look at the unusual cases.

Negotiating numerous NATs

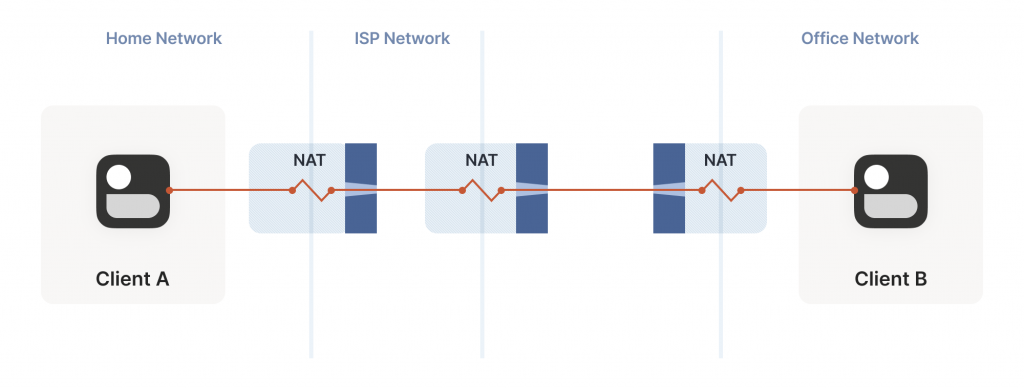

So far, the topologies we’ve looked at have each client behind one NAT device, with the two NATs facing each other. What happens if we build a ‘double NAT’, by chaining two NATs in front of one of our machines?

In this example, not much of interest happens. Packets from client A go through two different layers of NAT on their way to the Internet. But the outcome is the same as it was with multiple layers of stateful firewalls; the extra layer is invisible to everyone, and our other techniques will work fine regardless of how many layers there are. All that matters is the behaviour of the ‘last’ layer before the Internet because that’s the one that our peer has to find a way through.

The big thing that breaks is our port mapping protocols. They act upon the layer of NAT closest to the client, whereas the one we need to influence is the one furthest away. You can still use the port mapping protocols, but you’ll get an ip:port in the ‘middle’ network, which your remote peer cannot reach. Unfortunately, none of the protocols give you enough information to find the ‘next NAT up’ to repeat the process there, although you could try your luck with a traceroute and some blind requests to the next few hops.

Breaking port mapping protocols is the reason why the Internet is so full of warnings about the evils of double-NAT, and how you should bend over backwards to avoid them. But in fact, double-NAT is entirely invisible to most Internet-using applications, because most applications don’t try to do this kind of explicit NAT traversal.

I’m definitely not saying that you should set up a double-NAT in your network. Breaking the port mapping protocols will degrade multiplayer on many video games, and will likely strip IPv6 from your network, which robs you of some very good options for NAT-free connectivity. But, if circumstances beyond your control force you into a double-NAT, and you can live with the downsides, most things will still work fine.

This is a good thing, because do you know what circumstances beyond your control force you to double-NAT? In the next post we’ll talk Carrier-Grade NAT.

David Anderson is a software engineer at Tailscale, interested in distributed systems and cluster management, electronics, and writing write open-source software.

This post is adapted from the original at Tailscale Blog.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.