The Internet architecture, by which I mean the layered arrangement of Internet functionality and its basic service model of best-effort packet delivery, has remained almost completely unchanged since its introduction. However, to accommodate an increasingly stringent set of application requirements — including lower latency, higher reliability, tighter security, and improved privacy — we are amid an historic reshaping of our Internet infrastructure.

This is most notably driven by cloud and content providers (CCPs), many of whom, in addition to having high-capacity backbone networks connecting their data centres, have recently built user-facing networks with a large number of points-of-presence (PoPs) positioned at the network edge close to their clients. In-network packet processing is applied within these PoPs to implement functionalities such as flow termination, load balancing, caching, and DDoS protection (scrubbing).

These private networks carry a significant fraction of Internet traffic, so their impact on the overall Internet is substantial. In addition, there are edge service providers (ESPs) — such as Fastly, Akamai, and Cloudflare — whose PoPs apply similar in-network processing. The functionality added by ESP and CCP private networks is not part of an Internet-wide standard but instead is idiosyncratic to each provider.

These developments demonstrate beyond doubt that Internet users can be better served by various forms of in-network packet processing. However, there are three issues with the recent deployment of these in-network packet processing services:

- Such services are beyond the reach of the Internet architecture, which mandates that the network merely forwards IP packets.

- These in-network services are limited to those that can be applied to traffic from unmodified clients that are unaware of these services.

- The companies desiring the benefits of in-network services (that is, better service for the clients of their applications) are turning to CCPs and ESPs, not the traditional Internet Service Providers (ISPs).

The challenge before us is to fully realize this vision of in-network processing in an architecturally coherent manner so that it can:

- Go beyond the current limitations of backwards-compatibility by extending the Internet’s service model to encompass a wider set of functionality, and

- Be offered by traditional ISPs (not just CCP private networks), making it available to all.

A collaboration involving colleagues at the University of Washington, Mount Holyoke College, and New York University are attempting to meet this challenge. This post outlines our approach by describing an architectural design that naturally incorporates in-network processing, what features this design might support, and why we think this proposal might have a chance at adoption.

Enabling architectural change

Changing any large infrastructure is difficult, but the Internet’s architectural stagnation has a more fundamental cause. In the canonical Internet architecture, layer three (L3) plays two roles:

- It allows all L2 networks to interconnect via a universal protocol and thus must be deployed in all routers. This widespread deployment makes it essentially impossible to change L3’s basic semantics, both now and in the future. All other layers are extensible in that they can accommodate different incarnations, but L3 is the unchanging narrow waist whose functionality is largely fixed.

- As the interface to L4, it provides the service model (best-effort packet delivery) on which transport protocols and applications must be built. We would like the service model to be extensible, like the other layers, so we can address new application requirements as they arise, but those extensions must be implemented in L3.

Thus, L3 is constrained by being the interface to L2 since it must be deployed in all routers and is, therefore, impossible to change. It also has the responsibility of being the interface to L4 to satisfy application requirements. This creates the architectural paradox of the Internet — the only layer that is positioned to meet new application requirements is the only layer that is not extensible. This is why the Internet, which is otherwise so extensible at the higher and lower level layers, has a rigid and unchanging service model.

While this critique might sound generally familiar, this particular formulation points directly to a solution. We should split L3 into two layers: the old L3 continues to connect L2 networks as it does now, and a new in-network layer, called the service layer or L3.5, is tasked with supporting applications.

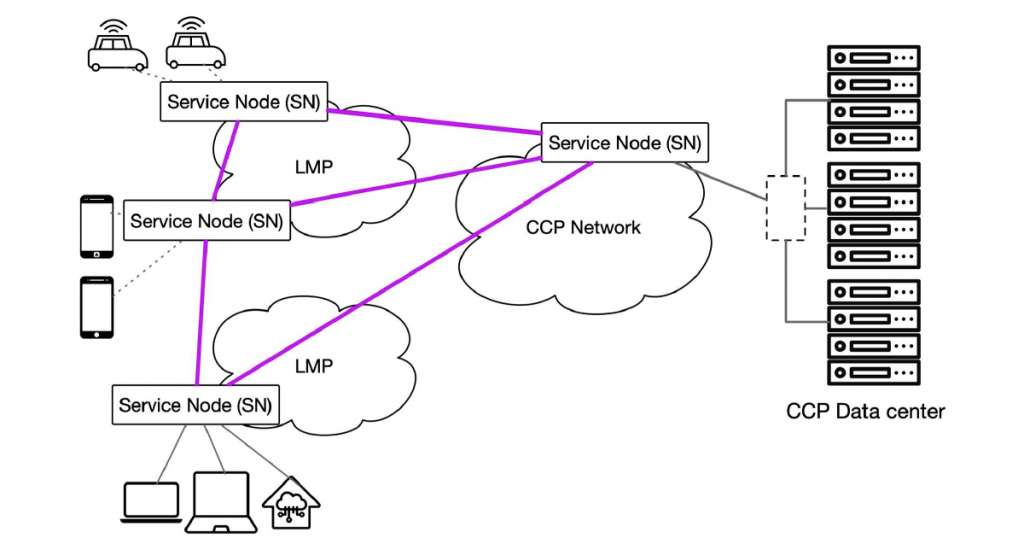

The service layer is layered on top of L3, but it need not be implemented on all routers. Instead, we propose that the service layer be implemented on clusters of servers called service nodes placed at the network edge relatively close to clients, such as in current PoPs. Every host is associated with one or more nearby service nodes that function as the host’s first-hop at the service layer (Figure 1).

For point-to-point delivery models, the typical communication pattern at the service layer is for a packet to travel from the source, to a service node associated with the source, to a service node associated with the destination, to the destination. All service layer communication is tunnelled over L3 (that is, IP).

The service layer implements a set of in-network services, similar to but more general than the services currently offered in private CCP networks. The tunnelling protocol allows the source to specify which service to invoke at the service layer. This explicit invocation means that new services are no longer required to be backwards-compatible and makes the service model fully extensible. We, therefore, call this approach the Extensible Internet (EI).

EI’s services are selected by some governance body, such as the IETF, and are implemented in software, so the standards are open-source code, not written specifications. Each service node runs a standardized execution environment — supporting a small set of basic primitives (for example, packet in/out, read/write stable storage, read/write ephemeral storage) — which provides a Write-Once-Run-Anywhere (WORA) interface for service implementation. Service nodes must also have a runtime or orchestration framework to deal with scaling and failures, but this need not be standardized.

There is nothing technically novel in this approach, but EI’s innovation lies in its deployment model. EI is completely backwards-compatible (that is, unchanged hosts or domains can continue to function using the current IP infrastructure) and leverages WORA software modules to rapidly deploy new services on all Internet service nodes. This is in stark contrast to how one would roll out a new L3 protocol today, which would require major infrastructure changes and rely on a set of domain-by-domain adoption decisions.

This is merely a brief sketch of EI’s design and we have left out many details (for example, how to deal with service node failures, interdomain routing, and composite services). However, one aspect to note is we assume that future service nodes will have secure enclaves and a variety of hardware accelerators (for example, SmartNICs, GPUs, and cryptographic accelerators), with the only requirement being that each service module that has code for such accelerators also comes with code that can be run on commodity CPUs.

Potential EI-supported services

Given this architecture, we now ask what services might it support; that is, in what ways can EI expand the Internet’s service model? The design space is vast, so here we review only a few possibilities that might provide immediate benefit, grouped into broad categories:

- Basics: EI’s services will obviously include the services already widely offered on private networks, such as flow termination, load balancing, caching, and DDoS protection (scrubbing). It will also include some basic packet processing functions such as encryption, compression, filtering, aggregation, and simple querying.

- New delivery models: More interestingly, EI could also offer various additional delivery models, including multicast, anycast, pub/sub, mobility support, redirection, and QoS (more like SD-WAN than IntServ). One might argue that services like multicast and anycast have long been part of IP, so we do not need EI. However, there has never been a convincing interdomain design for IP multicast, nor a scalable version of anycast, whereas it is straightforward to implement these and other services at the service layer with its more flexible packet processing capabilities.

- Security and privacy: The most important class of new services may be improvements to security and privacy. For instance, there are DDoS protection schemes that are more effective than scrubbing that can be deployed on EI. More fundamentally, with secure enclaves in all service nodes, EI could support an attestation-checking service that would ensure, at a scale that is not practical in today’s Internet, that clients are exchanging traffic with correctly operating servers. In addition, privacy-preserving designs resembling oDNS, ToR, and private relays could build into the very fabric of the Internet the ability to hide a user’s name lookup and communication patterns; these functions are now only available using special-purpose infrastructures, the presence of which indicates significant user interest in such capabilities.

- Frameworks: Moving up the stack, EI could deploy frameworks like Istio, making service meshes integral to the Internet infrastructure. EI could also support policy frameworks like OPA and telemetry services that could help users understand various performance problems.

- Common use cases: Combinations of these services could support various use cases. For instance, autonomous vehicles could use mobility support for connectivity and redirection to find local resources. AR/VR and gaming applications might use pub/sub to reach nearby users, and aggregation and compression to send data to the application’s backend. IoT applications might use simple queries over data and aggregation and compression before sending data to their backend.

- Radical new designs: EI could easily deploy radically new approaches such as Information-centric network (ICN) designs. An ICN design could be deployed as a standardized service, supported by service modules in each service node, and therefore become available to all users. We are sure there are other innovations in our future, and allowing us to easily deploy services we cannot currently imagine is the greatest long-term benefit of EI.

Why EI, why now, and what’s next?

Given the failure of previous attempts to significantly change the Internet architecture, why do we have any hope that EI will be adopted? There are four key reasons.

- EI was expressly designed to be the minimal change that would resolve the Internet’s architectural paradox, and the insertion of the service layer allows the current Internet to continue functioning without change.

- EI can be implemented on the edge computing resources that many carriers have already or are planning to deploy, so this architecture is largely consistent with their future physical infrastructure.

- New services are embodied in standardized software that can be deployed on all service nodes, so there are no per-vendor and per-domain deployment delays.

- Most fundamentally, EI is built on conjectures — that applications can benefit from in-network processing at PoPs and that this processing can be done in software, not ASICs — that have been fully validated by the current CCP/ESP private networks.

Now is an auspicious time to launch this effort because the superior functionality of the CCP private networks currently poses a clear threat to the dominance, and perhaps even the future viability, of the public Internet.

The EI proposal would allow traditional ISPs, who naturally have resources at their network edge, to provide an assortment of generic in-network services that would be a part of the Internet’s new expanded service model. This saves the CCPs from having to extend the reach of their user-facing private networks, while allowing them to continue implementing custom functionality in their backbone networks to meet the special demands of their inter-data centre traffic.

In addition, society benefits because now all users and applications have access to an ever-expanding set of in-network services that are now part of the Internet architecture. Thus, adopting EI benefits all parties, ISPs, CCPs, and society, and preserves the role of the public Internet by transforming it into an extensible platform for application support.

As for future plans, we are currently finishing an early prototype of EI and plan to deploy it on research testbeds and various clouds. This will create an experimental platform where we can explore how best to use in-network processing to support a wide class of Internet applications.

We invite all parties — the research community and industry — to participate in this exploratory phase and help us take the next step towards building an Extensible Internet. Please contact us at shenker@icsi.berkeley.edu if you are interested in participating.

I would like to acknowledge my co-leads on this project who are doing all the real work while I write blog posts: Arvind Krishnamurthy (University of Washington), James McCauley (Mount Holyoke College), Aurojit Panda (New York University).

Scott Shenker is a researcher at the International Computer Science Institute and a professor of the Graduate School at the University of California, Berkeley.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.