In February 2020, RIPE NCC announced the availability of software probes to improve RIPE Atlas coverage. With software probes, instead of installing a physical network device (the probe) to your network, you install the software package for the probe on a machine connected to your network. RIPE provides packages to install the probe at CentOS and Debian distributions. There is even a Docker image developed by the community. I used the latter option to host another probe on an OrangePI.

One might reasonably wonder though: ‘Hey, do you trust that this device won’t intercept your local network traffic, or tamper with your other devices in any way?’ As stated in the FAQ, the probe executes measurement commands against the public Internet only. For the security cautious, it is advised that the probe should be installed outside the local network (such as on a DMZ network).

I had followed this recommendation with my hardware probe before, but how am I going to perform such isolation in Docker? To make matters worse, I am using the CPE provided by my ISP, which has very limited capabilities in terms of configuring new networks. One possible solution is to reject any traffic from my probe’s network to internal networks via iptables. However, this would require additional steps when redeploying the RIPE Atlas probe. Ideally, my goal is to deploy the software probe out-of-the-box using a Docker compose manifest, without any additional commands.

So, what if the network traffic of our software probe container can be proxied to another service, which in turn tunnels it to the public Internet? In this post we are going to describe how to achieve this, by spinning up a dedicated Point-to-Point Protocol (PPP) connection with our ISP in Docker and using it as a ‘gateway’ to our software probe container.

Design

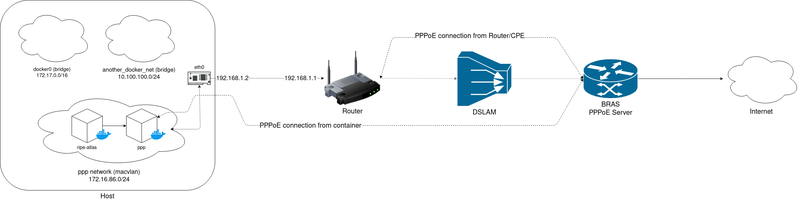

Deploying a RIPE Atlas software probe via PPP is depicted in Figure 1.

Our goal is to initiate a new PPPoE connection from a container inside an isolated network, so our RIPE Atlas probe can only perform its measurements via a PPP connection, without interfering with adjacent containers on the same host, as depicted in Figure 1.

Some ISPs (like mine) supply the username and password on paper along with CPE equipment. If yours did not, you can retrieve credentials from a configuration backup of your CPE.

The required PPP configuration can be easily generated using the pppoeconf tool. It is an ncurses based tool that helps you populate /etc/ppp/peers/dsl-provider and /etc/ppp/chap-secrets with the correct values. The next step is to dockerize the PPP client. I created a small Docker image that simply invokes the PPP client. Providing our configuration is under a file named ‘dsl-provider’ and located under /etc/ppp/peers directory, we could invoke the container as follows:

$ docker run --name ppp -d --privileged \

--device=/dev/ppp \

- v/etc/ppp:/etc/ppp \

tzermias/ppp call dsl-provider

The PPP client requires access to /dev/ppp device to work properly, so we need to expose it accordingly. Furthermore, you need to use the macvlan network driver for the ppp container to work properly. A dedicated macvlan network can be created using the command below.

$ docker network create -d macvlan \

--subnet=172.16.86.0/24 \

--gateway=172.16.86.1

-o parent=eth0 ppp

The ppp container can be attached to the network by adding the –network ppp flag on the docker run command above.

If everything went well, logs of the ppp container would indicate a successful call is performed and a new IPv4 address is assigned to the ppp0 interface of our container.

$ docker logs ppp Plugin rp-pppoe.so loaded. PPP session is 394 Connected to d8:67:d9:48:XX:XX via interface eth0 Using interface ppp0 Connect: ppp0 <--> eth0 PAP authentication succeeded peer from calling number D8:67:D9:48:XX:XX authorized local LL address fe80::dead:beef:dead:beed remote LL address fe80::beef:beef:dead:dead replacing old default route to eth0 [172.16.86.1] local IP address XX.XX.XX.XX remote IP address YY.YY.YY.YY

The PPP client will also create a resolv.conf file under /etc/ppp, containing DNS nameservers obtained from our peer. For this, you need to have the usepeerdns option in your /etc/ppp/peers/dsl-provider file.

Container networking

Now comes the interesting part. How can we ensure that any network traffic from our ripe-atlas container will only pass through the ppp container we started earlier? The answer is to share the network stack of this container. Apart from the usual bridge, host, or none options that one can pass to -network setting, there’s a special option named container, which allows you to use the network stack of another container. According to docker run reference:

…

Example running a Redis container with Redis binding to localhost then running the redis-cli command and connecting to the Redis server over the localhost interface.

With the ppp container running, we can easily validate that our setup works, just by spinning up a test container as shown below and performing some tests (such as a traceroute). Don’t forget to mount the /etc/ppp/resolv.conf file in this container, to have proper DNS resolution.

$ docker run -it --network ppp \

-v /etc/ppp/resolv.conf:/etc/resolv.conf:ro \

busybox:latest traceroute google.com

RIPE Atlas in Docker

I used a ripe-atlas image by jamesits for this purpose. Probe installation and registration are straightforward and well documented. On the first run, the probe generates an SSH keypair and saves it under /var/atlas-probe/etc/probe-key.pub. Then, you simply create a new directory where any relevant ripe-atlas files will be stored and mount it to the container.

$ mkdir -p atlas-probe

$ docker run --network "container:ppp" \

--detach --restart=always --log-opt max-size=10m \

--cpus=1 --memory=64m --memory-reservation=64m \

--cap-add=SYS_ADMIN --cap-add=NET_RAW --cap-add=CHOWN \

--mount type=tmpfs,destination=/var/atlasdata,tmpfs-size=64M \

-v ${PWD}/atlas-probe/etc:/var/atlas-probe/etc \

-v ${PWD}/atlas-probe/status:/var/atlas-probe/status \

-v /etc/ppp/resolv.conf:/etc/resolv.conf:ro \

-e RXTXRPT=yes --name ripe-atlas \

jamesits/ripe-atlas:latest

After uploading the public key to RIPE Atlas WebUI, the probe is ready to accept new measurements.

IPv6 support

For those who wish the probe run IPv6 measurements, you can enable IPv6 support by performing the following modifications:

Firstly, you need to enable IPv6 support in the Docker daemon. Ensure that your /etc/docker/daemon.json contains the following:

{

"ipv6": true,

"fixed-cidr-v6": "2001:db8:1::/64"

}

Use $ systemctl restart docker to ensure that the Docker daemon is aware of those settings.

The next step is to make sure that our ppp container has IPv6 support enabled. For this we need to start our container with net.ipv6.conf.all.disable_ipv6=0 set.

And the last step (eventually) is to enable IPv6 support when performing the PPP call. This can be achieved by adding the following two options in our /etc/ppp/peers/dsl-provider config.

+ipv6 ipv6cp-use-persistent

Putting it together

To sum up, one should execute the following commands to have the probe and the PPP client up and running:

# Create network

docker network create -d macvlan --subnet=172.16.86.0/24 \

--gateway=172.16.86.1 -o parent=eth0 ppp

# Create ppp container

docker run --name ppp --network ppp --restart unless-stopped\

-d --privileged --device=/dev/ppp \

--sysctl net.ipv6.conf.all.disable_ipv6=0 \

-v /etc/ppp:/etc/ppp \

tzermias/ppp call dsl-provider

# Create directory for atlas-probe config

mkdir -p atlas-probe

# Create ripe-atlas container

docker run --network "container:ppp" \

--detach --restart=always --log-opt max-size=10m \

--cpus=1 --memory=64m --memory-reservation=64m \

--cap-add=SYS_ADMIN --cap-add=NET_RAW --cap-add=CHOWN \

--mount type=tmpfs,destination=/var/atlasdata,tmpfs-size=64M \

-v ${PWD}/atlas-probe/etc:/var/atlas-probe/etc \

-v ${PWD}/atlas-probe/status:/var/atlas-probe/status \

-v /etc/ppp/resolv.conf:/etc/resolv.conf:ro \

-e RXTXRPT=yes --name ripe-atlas \

jamesits/ripe-atlas:latest

I also created the following docker-compose.yaml file to spin up the network and the containers to avoid typing those commands again and again.

For those who wish to use this probe for their measurements, just use probe #1001487.

Software probes can increase the reach of RIPE Atlas platforms on more networks and geographic locations. With 260 probes currently deployed in Greece, we can clearly observe a large portion of them being deployed in the Athens metro area. Wouldn’t it be nice to have Internet measurements on less densely populated areas, like islands?

Furthermore, the option to share one container’s network stack with another can be leveraged on other applications, like proxying traffic through a VPN tunnel. If you have a RaspberryPi sitting idle on your network, I suggest you give it a try!

Aris Tzermias is an experienced Site Reliability Engineer focusing on automation and deployment of services, with a systems administrations background.

This post was originally published at Aris Tzermias’ blog.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.