Transmission Control Protocol (TCP) is the Internet’s dominant transport protocol, but TCP does have some flaws. There are other protocols that could help resolve these flaws, but deploying them is difficult, largely due to Internet ossification — a progressive but substantial reduction of the Internet’s flexibility to support new protocols above IP which has made new designs unreliable on the global network.

In the mid-nineties, the Secure Socket Layer (SSL) protocol was proposed to secure emerging e-commerce websites and online banking. SSL was designed to operate on top of any reliable transport protocol such as TCP. SSL eventually evolved into Transport Layer Security (TLS), which is now almost ubiquitous. It secures a vast range of Internet communications from bank transfers to this simple blog post.

During the improvements that led to TLS 1.3, another secure and reliable transport protocol based on UDP was born in Google’s research lab. QUIC started as a proprietary protocol used by Google to speed up the transfer of web objects (for example, CSS files, js files, and images), sending them in parallel streams with their own transport context. QUIC combines the features we find in TLS and TCP and tunes them for HTTP.

QUIC works above User Datagram Protocol (UDP). Moreover, QUIC is implemented in user space. This choice gives QUIC a greater ability to update itself since it depends on the lifecycle of the application directly using it rather than the lifecycle of an operating systems such as Linux, Windows or Apple OSes.

READ: A quick look at QUIC

QUIC has, however, several major issues tied to the usage of UDP. The kernel interface sendmsg sends one UDP datagram to the network, and due to ossification, QUIC currently uses 1,350-byte UDP packets. There are methods to speed it up, but even then it remains far away from the performance provided by TLS/TCP with TCP Segmentation Offload (TSO) enabled, and would probably take some time to approach the efficiency of TLS/TCP.

What is TCPLS?

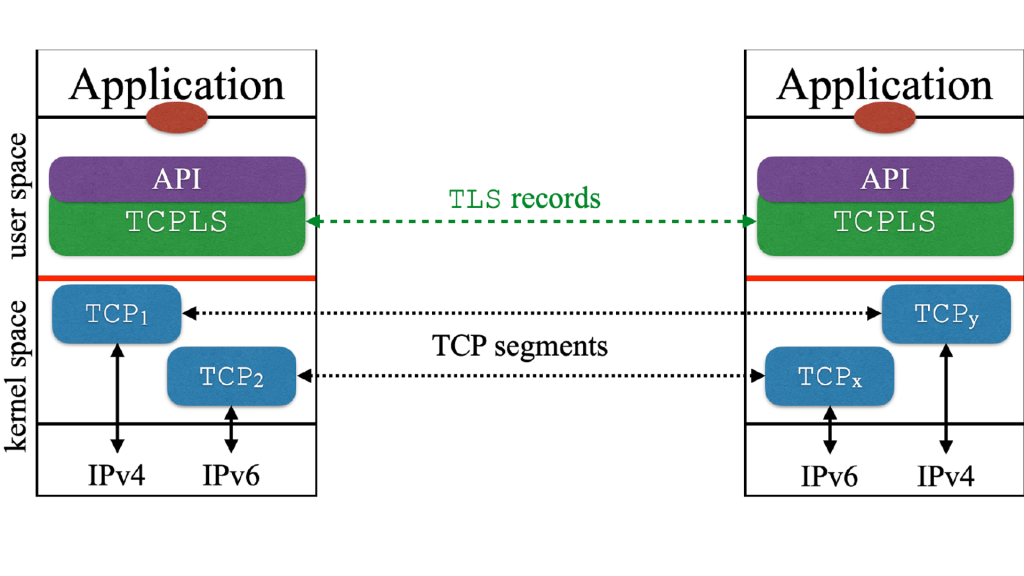

What if we could do this the other way around? What if we found a way to improve the TLS/TCP to approach QUIC’s features and advantages while benefitting from TCP’s decades of performance improvements? That is, in our HotNETs paper TCPLS: Closely Integrating TCP and TLS, we design TCPLS as an intertwined TLS1.3/TCP design to offer similar features (and more!) than QUIC, and we expect it to be faster than QUIC as well. TCPLS’s intelligence is programmed in user space and leverages the TCP kernel’s implementation for fast and reliable transfer. Figure 1 shows an abstract representation of the network stack.

The application can create and attach streams to TCP connections maintained by the TCPLS stack. By doing so, we can mimic QUIC’s ability to avoid Head of Line (HoL) blocking. If the same IP path is used, TCPLS would also require a shared congestion control scheme between the TCP connections for fairness reasons, similar to Multipath TCP (MPTCP). Applications can also multiplex TCPLS streams over the same TCP connection. It is mostly a trade-off between socket consumption and the expected (but still unknown) impact of HoL blocking at scale.

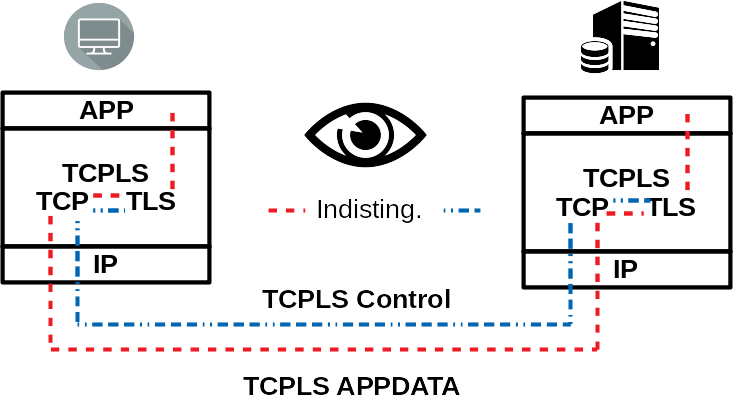

TCPLS also has two kinds of channels: one handles application data expected to be passed to the application, and the other handles protocol control information for TCPLS features and TCP options. Any information sent after the TCPLS handshake phase is encrypted and bitwise indistinguishable from application data TLS 1.3 records.

Indistinguishability is a core requirement to preventing ossification. Indeed, the network elements use the same codepath to process the various TCPLS records because they all look like TLS 1.3 application data to them, hence they cannot interfere with TCPLS extensibility.

But is there still distinguishable TCPLS information?

Yes, in the initial TCPLS handshake, and in a TCPLS Join handshake. In the initial handshake, TCPLS has a simple void TLS 1.3 extension which asks: “Hey, do you TCPLS?”.

This extension is sent unencrypted with the TLS ClientHello. The server answers “Hey, I do!”, and the encrypted extension ConnID and TCPLS cookies that would be used in TCPLS then join handshakes. Note that if the server does not answer “Hey, I do!”, TCPLS can fall back to regular TLS/TCP, similar to QUIC’s behaviour, except that it does it right away instead of wasting another round-trip in the case of QUIC.

TCPLS does not send anything else with the ClientHello. We think it is a bad design to allow applications to distinguish themselves at the transport level (beyond what the TCP port and some TLS options already allow). Protocol-level distinguishability is the technical reason why the Internet is ossified and it is part of the reason why there are efficient techniques for censoring applications and content.

Forcing TCPLS clients to send encrypted and bitwise indistinguishable control data after the handshake helps to prevent these problems.

TCPLS features

After the initial TCPLS handshake takes place, we can use the TCPLS control channel to enable many new features. Among them: streams, bandwidth aggregation, failover, secure and middlebox resilient TCP options, and more.

TCPLS streams

TCPLS streams can be considered as independent cryptographic contexts using the same TLS key. Data is encrypted/decrypted independently in each stream. We can attach a stream to any TCP connection maintained by the TCPLS stack. The variety of IP paths (if multiple IPs) and number of TCP connections can be managed by the application through an API, and the application can choose which of them they wish to attach to the streams.

Multipath features

We believe future transport protocols should have the ability to take advantage of multiple IP paths. There are various ways to leverage multiple paths and IPs. With TCPLS, we offer a grey box architecture that lets the developer tunes the transport to their need, in contrast to the agnostic design in MPTCP and QUIC’s migration.

Bandwidth aggregation

Taking advantage of several IP paths can be useful in several contexts involving different low-throughput IP paths. An application using TCPLS can open streams and attach them to different TCP connections that they would have previously connected to the session through a TCPLS Join handshake, for each of the added TCP connections. The TCPLS Join handshake is lightweight and involves fake ClientHello/ServerHello cryptographic materials and true TCPLS extensions containing a ConnID value and a Cookie value received in the initial handshake to identify and attach the TCP connection to the right TCPLS session.

Multiple paths but no aggregation?

If the aggregated mode is off, there is no global ordering. Each of the local streams has, however, a local ordering guaranteed by the underlying TCP connection. In this mode, the application must take care to send an application-level object entirely over the same stream. However, if the streams are attached to different TCP connections, an application-level object stalling on a given TCP connection won’t impact the other transfers. We still need to be cautious with fairness issues. We expect TCPLS to share a flow control scheme over the different TCP connections in the future.

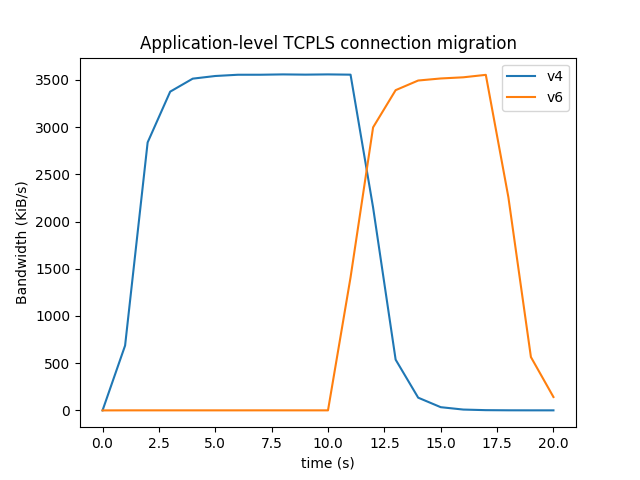

Application-level connection migration

Applications using TCPLS can temporarily take advantage of two available paths to migrate a connection from one path to another. A flexible API design allows applications to migrate the network without stalling the application transfer.

Failover

In QUIC, Connection Migration is designed to bring reliability to the QUIC session in case of a network loss inherently frequent in mobile devices. The Connection Migration process works by playing a challenge-response protocol on a new path with a QUIC connection ID and moving the QUIC traffic over to the new path once confirmed.

In TCPLS, we call this Connection Migration capability ‘Failover’ to avoid any confusion with the Application-level Connection Migration. The Failover logic is internal to the TCPLS stack and exposes an on/off control to the application, as well as some details regarding the protocol. The Failover protocol was under development when we wrote the HotNETs paper.

We are actively improving the TCPLS implementation described in our HotNETs paper. Feel free to contact us if you are interested in more recent information. We will post updates on the project here.

Dr Florentin Rochet is a Privacy, Security and Network Researcher.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.