Online multiplayer gaming is growing rapidly, with free-to-play titles like Fortnite and League of Legends each generating over a billion dollars in revenue via in-game purchases. However, game-play experience is easily affected by network conditions, with poor “lag” causing frustrated gamers to complain on forums and churn between ISPs.

This post takes a deeper look at the network behaviour of a dozen of the most popular multiplayer games, and finds that: (a) game-play flows are (surprisingly) thin, often no more than a few hundred Kbps; and (b) gaming experience is (unsurprisingly) impaired even by transient lag spikes from cross-traffic such as video streaming.

My research colleagues and I argue that enhancing gaming experience by increasing bandwidth is likely to be both expensive and ineffective, and ISPs will have little option but to prioritize game-play flows, much like they do to voice. We recommend the community revise its notions on network neutrality to accommodate openly expressed traffic prioritization policies, rather than universally mandating ‘dumb pipes’ that are agnostic to application experience.

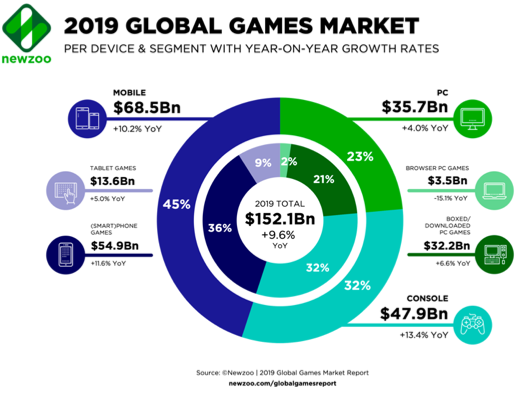

The gaming market

Online gaming is no longer just a niche activity – there are about 1.1 billion active gamers worldwide, who collectively spent USD 137.9 billion on games in 2018. Many games are free-to-play and generate revenue from in-game purchases — indeed, 69% of Fortnite players spent an average of USD 85 each within the game, helping it gross a whopping USD 2.4 billion last year. Gamers and game providers therefore have strong incentives to ensure a superlative gaming experience; however, network conditions are beyond their control, and even temporary latency spikes (aka ‘lag’) can be literally fatal. Players on a ‘laggy’ network may be disconnected and barred for a period from the game (for example, for 30 minutes in CS:GO) so as not to spoil the experience for others, further exacerbating their frustration, often vented at the ISP.

Game behaviour and experience

To understand the network load imposed by gaming, and conversely the impact of network conditions on gaming experience, we first collected and analysed game-play traces of a dozen of the most popular online games today, spanning the shooting (Fornite, CS:GO, PUBG, CoD:BO4, Apex Legends, Overwatch, Team Fortress 2), strategy (League of Legends, Starcraft, DotA2), and sports (FIFA18, Rocket League) genres.

The general pattern of game behaviour is very similar — the game typically starts in a ‘lobby’ state where users can access their collectibles, player stats, game settings, and social network. When the user decides to play, a ‘matchmaking’ service is contacted to group waiting players, and a server is selected on which the online game begins.

The ‘gameplay’ flow itself is typically a single UDP flow, which carries a periodic ‘tick’ of packets between the game server and each player. The tick-rate varies across games, for example 64 packets/sec for CS:GO, 32 for DotA2/FIFA, and 30-40 for Apex Legends. The data rate of an actual game-play flow is therefore quite low, typically in the range of 200-400 Kbps.

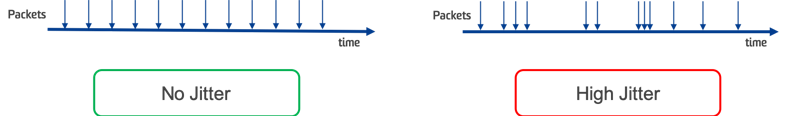

Gaming experience is affected when the steady ‘tick’ of packets to/from the gaming server gets displaced due to interaction with other network traffic. As shown in the figure above, this causes ‘jitter’, which can be numerically quantified as the average displacement of a packet from its ideal position.

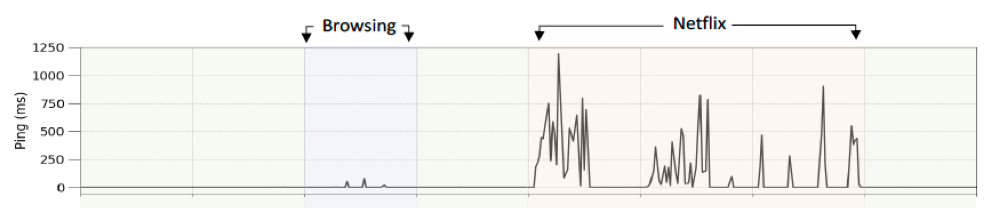

We conducted experiments in which browsing and Netflix streams are started at the same time as a gaming stream in a representative house with a 10 Mbps broadband connection. As shown in the figure below, the gaming jitters show small spikes (few tens of milliseconds) during the browsing, but the jitter spikes reach hundreds of milliseconds and beyond when Netflix begins loading its video buffers and each time it fetches a video chunk. This makes the game unplayable, manifesting itself in the form of gunshots not inflicting damage on the opponent and aerial landings getting displaced from the target, causing extreme user frustration.

The natural reaction (from users and content providers alike) to addressing latency spikes is to demand that the network pipes be made fatter. There are two problems with this approach: first, bandwidth does not come for free — indeed on the Australian NBN, backhaul (Connectivity Virtual Circuit or CVC) bandwidth costs AUD 8 per Mbps, and this additional cost has to be largely borne by the ISP rather than content providers or consumers.

The second and even more concerning aspect is that is no way to ensure that the additional bandwidth is used by the intended application (for example, gaming), since any application (such as adaptive bit-rate video) can grab it in a free-for-all neutral network. We therefore believe that the most cost-effective way to improve gaming experience is for the network to prioritize game-play flows, especially since they are very similar to voice in terms of data rates and value to the user.

Prioritization raises the natural suspicion (from consumers, content providers, and regulators) that the network operator will apply arbitrary policies that may be detrimental to public interest. We therefore advocate that operators be required to openly state their prioritization policies (rather than being mandated to be neutral).

We have proposed a framework in our paper, OpenTD: Open Traffic Differentiation in a Post-Neutral World [PDF], which ensures traffic differentiation can be done in an open, flexible, and rigorous manner. Operators are required to openly declare the number of priority classes supported in their network and the relative bandwidth given to each class at different levels of resourcing (we propose utility functions as a mathematically rigorous construct for this), along with the manner in which traffic streams are mapped to priority classes. Requiring the operator to log and report offered load to each class enables a regulator to monitor that the operator is adhering to their stated policy.

Contributors: Himal Kumar, Hassan Habibi Gharakheili, Sharat Madanapalli and Maheesha Perer.

Vijay Sivaraman received a PhD in Computer Networking from UCLA and worked at an early-stage start-up in Silicon Valley for three years building optical switch-routers. He is now a Professor at UNSW, as well as co-founder & CEO of Canopus Networks. He is passionate about translating academic research to commercial products, and runs a community meetup group to promote the use of Software Defined Networking.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.