In 2009, Google launched its Public DNS service, with its characteristic IP address 8.8.8.8. Since then, this service has grown to be the largest and most well-known DNS service in existence.

Due to the centralization that is caused by public DNS services, large content delivery networks (CDNs), such as Akamai, are no longer able to rely on the source IP of DNS queries to pinpoint their customers. Therefore, they are also no longer able to provide geobased redirection appropriate for that IP.

As a fix to this problem, the EDNS0 Client Subnet standard (RFC 7871) was proposed. Effectively, it allows (public) resolvers to add information about the client requesting the recursion to the DNS query that is sent to authoritative DNS servers. For example, if you send a query to Google DNS for the ‘A record’ of example-domain.com, they will ‘anonymize’ your IP to the /24 level (in the case of IPv4) and send it along to example-domain.com’s nameservers.

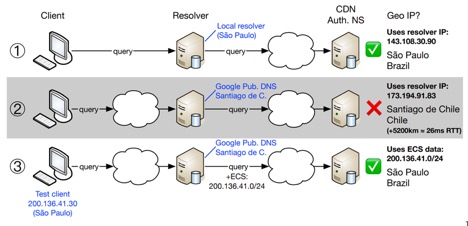

Figure 1 shows an example for a client in São Paulo, Brazil. In situation 1, the client uses a local resolver and is accurately geolocated to São Paulo. In situation 2, the client uses Google Public DNS, but the EDNS0 Client Subnet is not used. Because Google’s nearest Public DNS PoP is in Santiago de Chile, geolocation fails. Finally, situation 3 shows that if the EDNS0 Client Subnet is used, geolocation works again.

Figure 1 — Three examples of clients using different resolvers.

We have collected over 3.5 billion queries that contained this EDNS0 Client Subnet extension that were received from Google, at a large authoritative DNS server located in the Netherlands. These queries were collected over 2.5 years. In this post, we look at the highlights of the things we can learn from this dataset.

Out-of-country answers

Since Google uses anycast for their DNS service, end users can typically not easily see what location their request is being served from. On the other hand, from our perspective we can see the IP address that Google uses to reach our authoritative nameserver, as well as the IP address of the end user (by the EDNS0 Client Subnet).

Google publishes a list of IP addresses that they use for resolving queries on 8.8.8.8, and what geographic location those IPs map to.

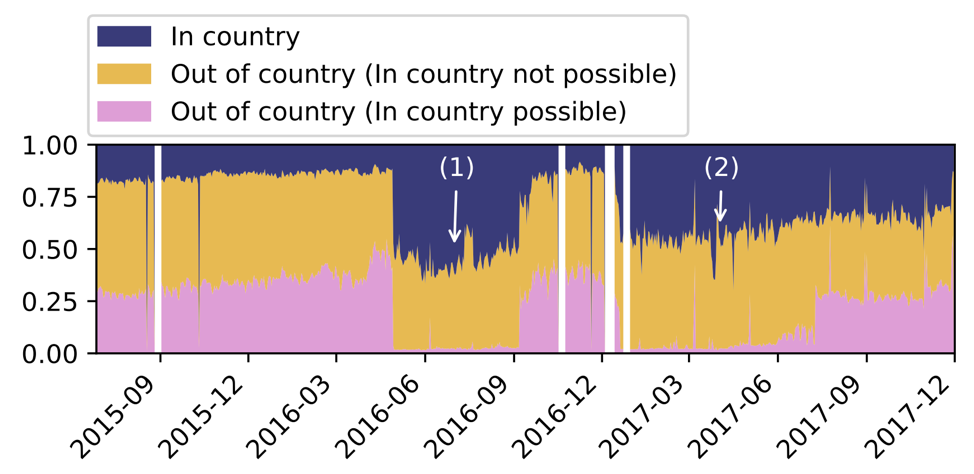

Figure 2 shows the queries from Google divided into three categories:

- Blue: queries served from the same country as the end-user.

- Yellow: queries not served from the same country, and Google does not have an active location in that country.

- Pink: queries not served from the same country, but Google does have an active location in that country.

Figure 2 — Number of queries from Google divided into three categories: blue = in country; yellow = out of country; pink = out of country where Google has an active location.

It’s clear that a non-negligible fraction of queries are unnecessarily served from a location outside the economy of the end-user. Moreover, the end-user is likely not aware of this fact, and might assume that since there is a Google DNS instance in the same economy they are being served from this location.

Further investigation shows that quite often queries are served from countries that are actually far away. During periods 1 and 2 (marked in Figure 2) Google temporarily performed better in terms of ‘query locality’.

Outages drive adoption

Given that DNS is probably a fairly foreign concept to the average user, we were wondering what could drive users to configure Google Public DNS. We had the idea that it was likely that ISP DNS outages might cause this, and set out to verify this.

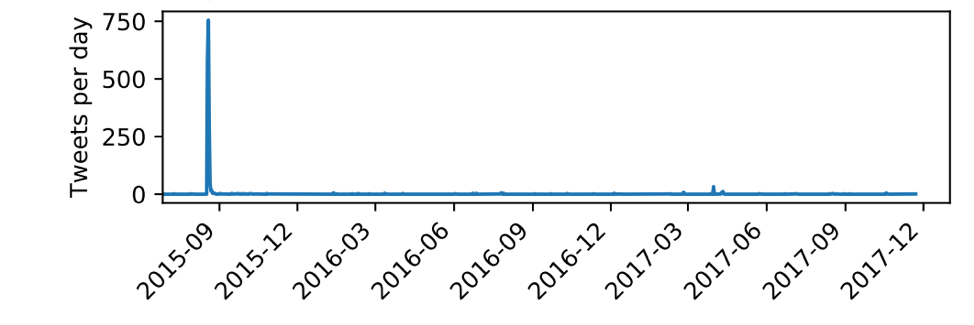

Back in 2015, the company Ziggo (one of the larger ISPs in the Netherlands) had a major DNS outage that lasted several days (caused by a DDoS attack), which prompted a lot of discussion on Twitter (Figure 3)

Figure 3 — Tweets per day mentioning DNS and Ziggo.

This is what happens when an ISP’s DNS resolvers go down…. re: Ziggo pic.twitter.com/RgbJSYbBR6

— Sajal Kayan (@sajal) August 18, 2015

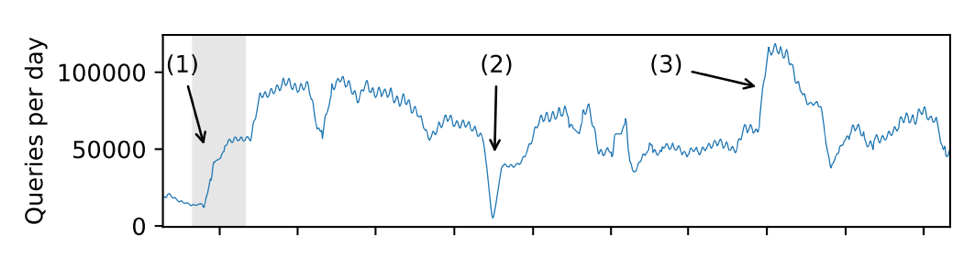

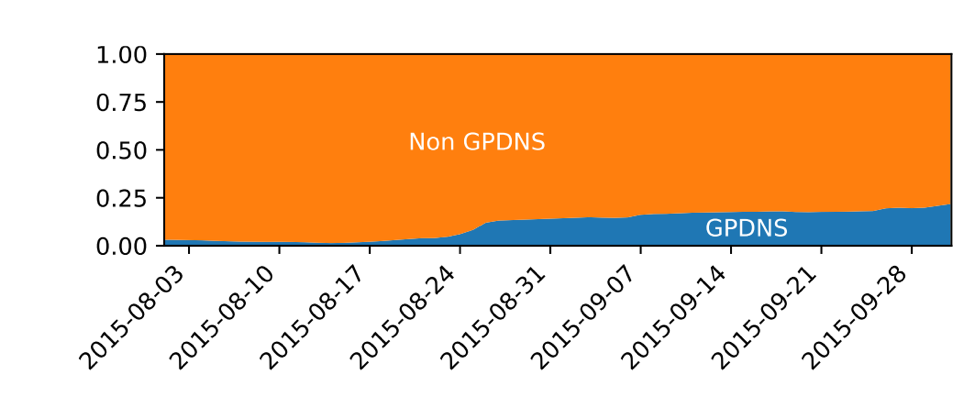

Figure 4 shows the number of queries from Ziggo end-users through Google rising rapidly shortly rising after the incident started (1). This was confirmed by looking at the fraction of ‘Google DNS queries’ and ‘Ziggo DNS queries’ (Figure 5).

Tip: All people in the Netherlands using ziggo: set your DNS to 8.8.8.8 and internet works again. #ghc_sec

— #GHC_sec Central (@Global_hackers) August 19, 2015

Interestingly, the number of queries per day never went down to its original level, except for an outage we had in data collection (Figure 4 (2)). N.B: The increase (Figure 4 (3)), was a DNS flood performed by a single user.

Figure 4 — Queries moving average over -5 and +5 days.

Figure 5 — Total queries (including non-Google) from Ziggo.

Anycast does not always behave as we think it should

We can see that anycast does not always behave as we think it should, and users concerned about their privacy might want to think twice about using a public DNS resolver that uses it — your packets may traverse far more of the Internet than you might expect.

We also see that users are willing to configure a different DNS resolver if their current one is not reliable, and tend to not to switch back.

For a more in-depth analysis, we recommend that you take a look at our paper [PDF 780 KB]. Our dataset is also publicly available.

Wouter de Vries is a PhD student in the Design and Analysis of Communication Systems (DACS) group at the University of Twente.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

1. An additional reason for 8.8.8.8 adoption is that some residential gateways (particularly ADSL modems) are shipped with 8.8.8.8 configured as their default DNS resolver and they ignore the resolvers given to them via the ISP’s DHCP.

2. A particularly concern with 8.8.8.8 is that AUTHs must apply to Google to receive the ENDS0 payload. By default they do not send EDNS0 to auth servers.

Hi MarkD,

We did attempt to find proof of your point 1, as we suspect this also to be the case, but we weren’t able to find any, admittedly, the data set is a pretty big haystack.

Concerning point 2, Google will automatically detect and enable EDNS0 Client Subnet for your Auth when you start supporting it, this may take a few hours to fully ramp up. There is a figure in the original paper that shows a timeline of this.

I have seen modems with 8.8.8.8 pre-configured, but as you suggest, finding them at the moment is non-trivial and quantifying their coverage is hard.

But something is going on as my experience is that around 10% of all auth queries originate from 8.8.8.8 which is way more than one would expect from a few savvy individuals responding to ISP issues.

As for Google automatically doing EDNS0 probes. That is news to me and a welcome change. Initially they were concerned that sending unknown EDNS options had a non-zero risk of tweaking auth bugs, thus their opt-in approach.

A big reason to use 8.8.8.8 in the US is because your ISP is now allowed to sell your browsing data. The law was change under Trump.

I trust Google to not sell this data.

Plus ISPs in the US will inject ads for sites that do not exist and Google does not do this.

An interesting MS Thesis on EDNS Client Subnet by Fajar Ujian Sudrajat at Case Western Reserve University not cited in the linked paper can be found at http://rave.ohiolink.edu/etdc/view?acc_num=case14870349076046

Also, although the Ziggo case makes an interesting argument, the effect is not nearly as permanent as it is made out to be. APNIC data for that AS (https://stats.labs.apnic.net/dnssec/AS9143) shows a far more complex (and less significant) effect, with use of Google Public DNS jumping up in the fall of 2015 but dropping back down a few months into 2016 and continuing to oscillate quite a bit.

More interestingly, the per-AS breakdown for the use of Google in the Netherlands (one of the highest in Europe) shows that a great deal of that comes from hosting sites and data centers, many of the largest of which send anywhere from 50% to almost 100% of their traffic through Google Public DNS.

This is particularly notable given that the APNIC measurement technique uses micro-advertisements that might be expected to provide better coverage of homes and offices than of data centers (which one would not generally expect to attempt to display ad content on web pages).

Hi Alexander,

Thanks for pointing out the thesis! We did not exhaustively cite every publication that touches ECS because it’s not a survey, and space for references was very limited.

Concerning Ziggo, it does not really surprise me that different datasets show different results. As you say, the distribution of clients that we saw is different than what APNIC sees as well as the sample sizes.

As I understand it there are only ~92k samples for Ziggo over the entire measurement period, with 4.25% using Google Public DNS, resulting in a very small sample (roughly 4k). As you can see we record somewhere between 20k and 100k queries per -day- from the Ziggo AS, using Google Public DNS.

Everything here is subspect after you used example-domain.com which is on Yahoo. There’s rfc2606 for this.

Hi Darkdot,

You are of course right, we should’ve used the domain names intended for documentation. We will take care to do this in the future, and thanks for pointing this out!

Hopefully you still find the rest of the blog interesting.

Hi Jack,

Privacy is always a concern, and unfortunately it is very hard to be sure that no one is getting their hands on your data somewhere between you typing in a domain name and the web page showing up on your screen.

Fairly recently some public resolvers (at least Google and Cloudflare that I know of, but certainly there will be others) started offering DNS-over-TLS and/or DNS-over-HTTPS services, which may offer greater privacy. It might be interesting for you to have a look at that!

Isn’t 8.8.8.8 a *caching* resolver? If so, then CDNs aren’t going to get EDNS0 information for every client, only for the ones which can’t be served from Google’s DNS cache.

Google cache keys for EDNS0 queries includes the client subnet so cache answers are only re-used if the querying client comes from the same subnet.

This means that CDNs do get EDNS0 information for every subnet.

In any event, wrt DNS-over-TLS, the privacy aspect is to protect against traffic snoopers, not against what the end cache sees. IOWs it’s solving a different problem.

Hi MarkD and Kevin,

MarkD is right, the CDNs will see a request coming in for each unique EDNS0 Client Subnet that Google sees. Google in essence will have a seperate cache for each subnet (typically /24 or /48 subnets).

A stub resolver (e.g. the “forwarding” resolver in your system) can request a recursive resolver to not include EDNS0 Client Subnet information in its recursive chain. It can do this by adding a EDNS0 Client Subnet with source prefix length zero in the initial query. For more information see RFC7871, it states that: “A SOURCE PREFIX-LENGTH value of 0 means that the Recursive Resolver

MUST NOT add the client’s address information to its queries.” in section 7.1.2 (Stub Resolvers).

The increase in usage of 8.8.8.8 as DNS resolver is simply explained by the fact that the Chrome browser configures it on install — like the next Firefox browser version may set 1.1.1.1.

Hi JoeSix,

Are you sure about this? I can’t find any reference to Chrome setting their DNS settings as default. It certainly hasn’t done that on my system.

Firefox indeed may set the standard DNS-over-HTTPS resolver to 1.1.1.1, I suspect/hope they will over a wider variety of options though.