When browsing the web, chances are that your requests and responses flow through one (or more) proxy server(s). This could be due to various reasons, one of which is load balancing. With load balancing, the (reverse) proxy server will pick one backend server (out of a pool of available servers) to forward incoming requests to. This enables the system administrator to distribute the load of incoming requests over multiple servers.

A fundamental shortcoming of this configuration is that the backend server becomes oblivious to who is actually visiting the website. From the backend server’s perspective, each request originates from the proxy server, making it difficult, for example, to enforce IP-based access controls or maintain an accurate log. In HTTP, this is trivially solved by including the X-Forwarded-For header, which allows the proxy server to communicate the IP address of the client to the backend server. But what about other protocols?

Most protocols do not have the equivalent of an X-Forwarded-For header. To solve this, HAProxy came up with the PROXY protocol, which is a Layer 4 protocol that allows a proxy server to communicate client information to a backend server. While around for a few years already, not much is known about this protocol’s usage in the wild. As such, my colleagues and I from the University of California, Santa Barbara did a large-scale measurement study of the PROXY protocol. In particular, we studied the PROXY protocol in a security context and looked at potential ways in which adversaries can abuse this protocol in the wild.

Dissecting the PROXY protocol

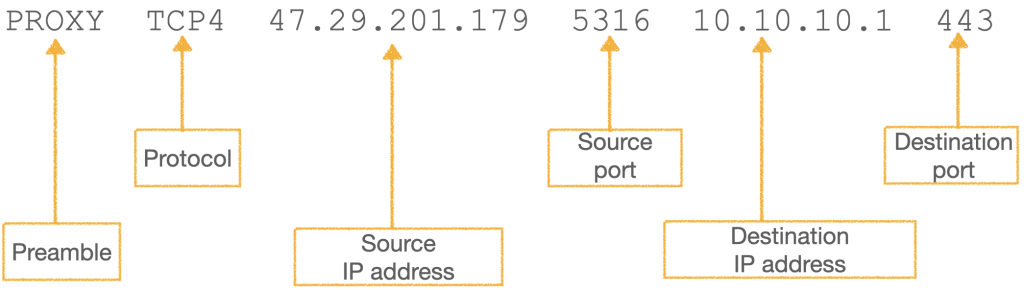

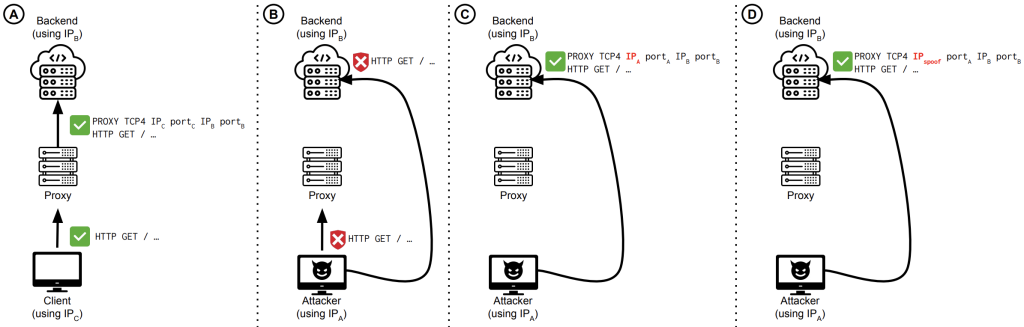

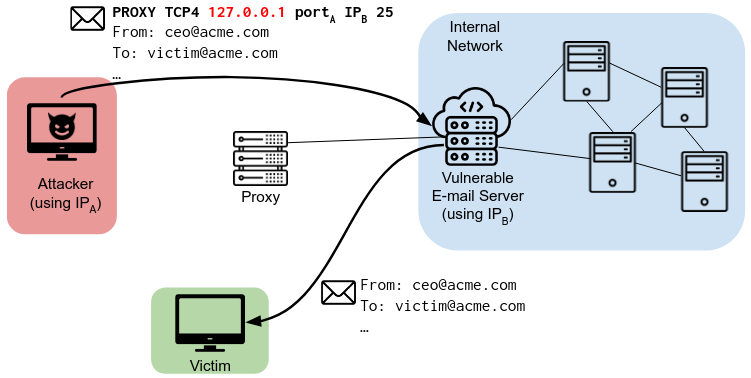

The PROXY protocol works by providing a PROXY header during the connection setup from the proxy server to the backend server. In this header (illustrated in Figure 1), the proxy server includes client information such as the IP address and the source port from which the connection originates. After parsing this information, the backend server can now attribute this connection to the IP address included in the header.

Many server software offer support for the PROXY protocol, and enabling it is often a matter of including a keyword in the server’s configuration file. For example, in Apache, this happens using the RemoteIPProxyProtocol On configuration, and in NGINX, you can simply add proxy_protocol at the end of your listen command (HAProxy provides a list of supported software).

Security model

Besides load balancing, reverse proxies can also enforce security checks before a packet reaches the backend infrastructure. Enabling the PROXY protocol adds an additional layer of security to such a setup, since backend servers now expect the PROXY header to be present during connection setup.

Scenarios A and B in Figure 2 illustrate this concept. In scenario A, we see a legitimate (and typical) interaction between a client and the infrastructure, where the client connects to the proxy, which then forwards the request to the backend server and includes the PROXY header. When an adversary tries to connect to the same proxy server, its access will be denied due to, for example, IP-based access control enforced by the proxy. If the adversary were to bypass the on-path proxy and directly connect to the backend server, the server would not proceed with the connection since it requires the presence of a PROXY header during connection setup.

Unfortunately, the protocol provides no mechanism to prevent an adversary from injecting a PROXY header themselves when connecting to the backend server (this is depicted in scenario C). Rather, it requires a proactive system administrator to maintain a list of trusted proxy servers from which to accept PROXY protocol information. If no such list is present, any adversary can send PROXY headers to the backend server. Moreover, since the PROXY header is now under the attacker’s control, they can easily spoof the IP address value in the header (scenario D), which has further security implications as we will discuss below.

Large-scale measurement study of the PROXY protocol

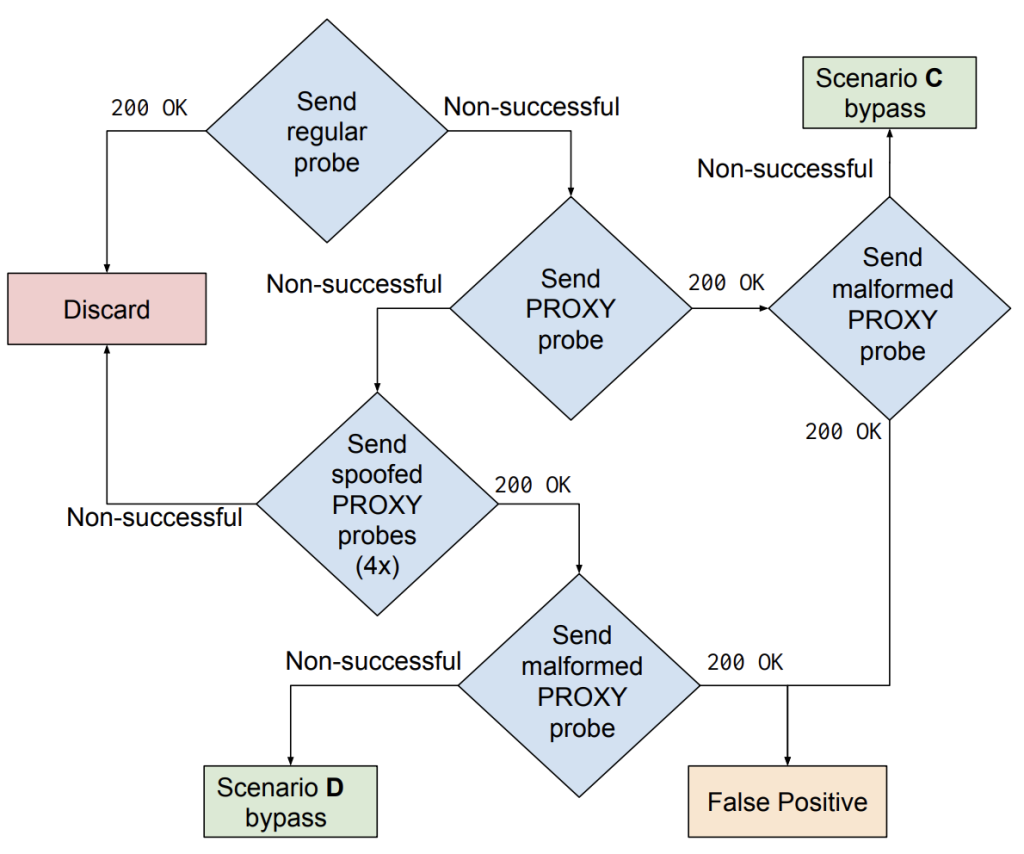

In our work, we measured how servers in the wild react to unsolicited PROXY headers. We did so across the full IPv4 address range for the HTTP, SMTP and SSH protocols. For each protocol, we launched six different tests on each target IP address (see Figure 3). Our first test functioned as a baseline and consisted of a regular probe without an injected PROXY header. For the second test, we injected into each packet a PROXY header that contains the IP address of our scanning machine in the source IP address field of the header. This gave us an initial idea of how servers react when receiving a PROXY header from an arbitrary source on the Internet.

If the baseline probe was unsuccessful, but we received a 200 OK status code with the injected header, we assumed that we potentially bypassed an on-path proxy (scenario C) and obtained access to a resource otherwise only reachable by the on-path proxy server. If this attempt was unsuccessful, we tried again using a spoofed value for the source IP address in the PROXY header.

Specifically, we launched four tests, each with a different IP address in the PROXY header: 127.0.0.1, 10.0.0.10, 172.16.0.10 and 192.168.0.10. By using the localhost loopback address, along with three common internal network addresses, we tested if the receiving server believed that the initial request originated from within the server’s internal network (scenario D). If a target server returned an unsuccessful response to the first two probes, but responded successfully to a probe with a spoofed IP address, we have potentially bypassed the access control checks of the server using the PROXY protocol. Note that we also sent a malformed PROXY header to ascertain whether the observed effect indeed stems from the PROXY header or not (see our paper for more details on this methodology).

Findings

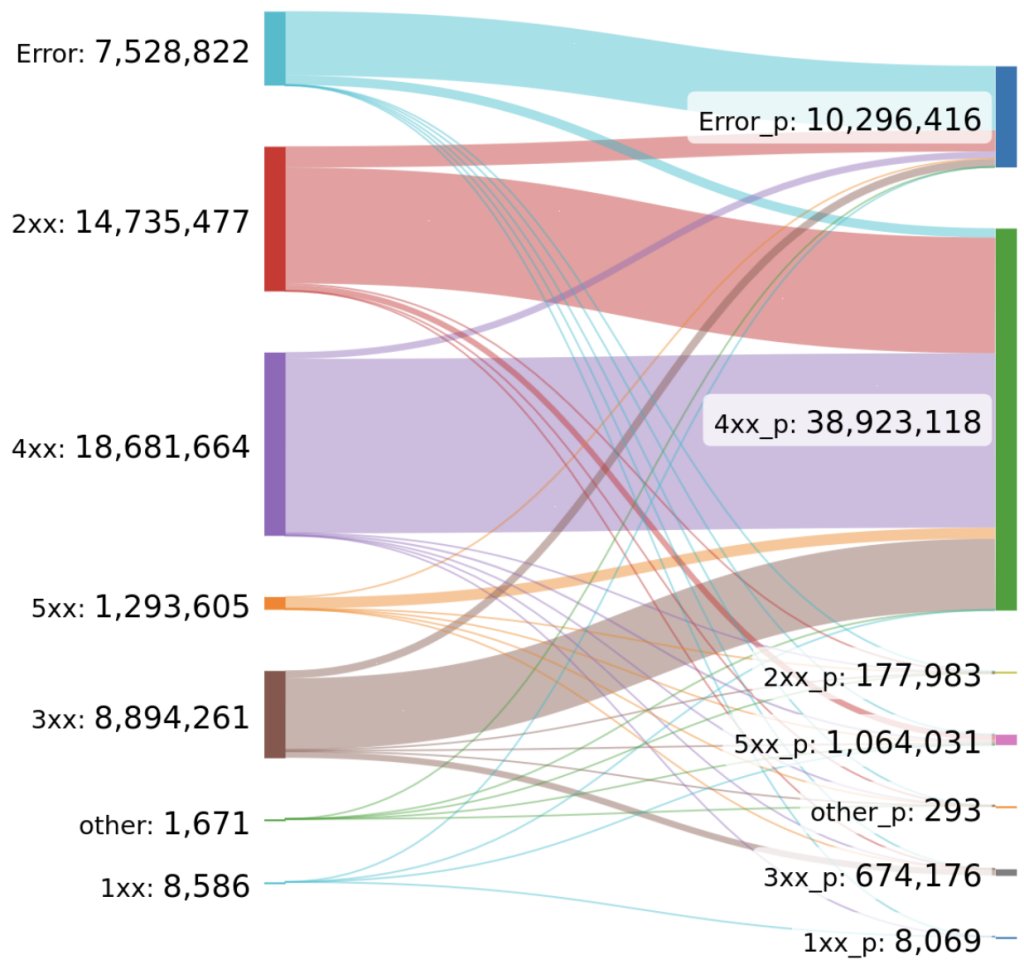

Over HTTP, we found that 177,983 hosts happily accepted our unsolicited PROXY header. For SMTP and SSH, this became 2,332,377 and 2,343,420, respectively.

Figure 4 shows how the same hosts react with different HTTP status codes depending on whether a PROXY header is included or not.

Sensitive web pages

For all 177,983 web pages returned over HTTP, we applied a clustering algorithm to categorize the type of web page hosted on these endpoints. To contrast our findings, we ran the same clustering algorithm on a random set of 177,983 pages that are accessible using regular GET requests (without a PROXY header). The majority of the web pages in our experiment group are either boilerplate pages (pages with a website template but no actual content) or login pages.

About 12,000 pages from our experiment group could not be classified by our algorithm. As such, we sampled 200 web pages from this uncategorized group for a manual analysis. From this analysis, we found that 36% of these web pages provide access to sensitive portals such as home automation systems, temperature sensors, electric vehicle charging station diagnostics, Internet of Things (IoT) sensors, and intrusion alarm monitoring. By contrast, in our control group, no such sensitive pages were present, showing that the PROXY protocol can be abused to obtain access to sensitive control portals.

Access bypass through spoofed PROXY headers

Recall that we also send a series of probes containing a PROXY header with a spoofed source IP address value. We observe from our results that 10,089 hosts initially respond negatively to a regular probe, yet react with a 200 (OK) status code when receiving one of our ‘spoofed probes’. That is, using a spoofed value, we fool the receiving host and obtain access to resources that would otherwise be hidden. This confirms that system administrators use the PROXY header values to perform access control.

Through our SMTP experiment, we confirm that the PROXY header can also expose the internal infrastructure of a network. When establishing an SMTP connection, the host might include its domain during the initial handshake. In our experiments, we encountered 268 hosts advertising a .home Top-Level Domain (TLD). This TLD was deprecated by ICANN in 2018 due to its ambiguity with internal domain names (many organizations use the TLD for their internal network). As a result, no public domain today can be hosted on the .home TLD. Therefore, these 268 instances must be within an internal network.

SMTP open relays

We also found that spoofed PROXY headers can turn email servers into open relays. This is, in part, due to the forwarding policies set by email servers. Postfix, for example, will by default forward any email stemming from the localhost address, since it assumes that authentication has already taken place. By using the localhost address in our PROXY header, we can therefore bypass email authentication and send emails from any email address on the Internet. In our study, we found at least 373 SMTP servers vulnerable to this attack.

More worryingly, while ‘regular’ open relays get taken down rather quickly, the open relays found in our study are persistent since current scanners do not use PROXY headers to search for open relays in the wild.

Conclusion

The PROXY protocol is a useful protocol that can strengthen load-balancing setups. However, system administrators need to be aware of the security issues found in our study. The most effective way to mitigate these issues is to maintain a list of trusted PROXY servers from which to accept this protocol. While trivial, our study shows that many hosts on the Internet do not apply this mitigation in the wild.

If you want more details on this work, including the ethics of our research, please read the full paper at this link or find a presentation of this work at NDSS’25 here.

Stijn Pletinckx is a PhD student in the SecLab at the University of California, Santa Barbara. His research focuses on the intersection of network security and Internet measurements, often incorporating concepts of web security as well. His work aims to empirically study the Internet landscape within a security context.

Christopher Kruegel and Giovanni Vigna contributed to this work.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

The PROXY protocol is important for getting the real client IP. When I’m testing how my server sees client IPs behind a load balancer, I sometimes use an online proxy browser from different locations to simulate.