Cloud storage services like Amazon S3 and Google Cloud Storage offer reliable, scalable, and easy-to-deploy storage to anyone who can pay. Clients simply name a storage ‘bucket’ and set its access control, making the service widely popular — Amazon S3 hosted over 100 trillion files in 2021. However, this flexibility introduces risks. The confidentiality of each bucket is not governed by traditional enterprise security mechanisms, but by proper access control configuration by the bucket operator.

Although many breaches have been publicly linked to misconfigured cloud storage, none have provided detailed documentation on how these attacks were carried out or the methods attackers used to identify the vulnerability. Furthermore, no public database of AWS S3 bucket names exists, so attackers have to guess the name of a bucket exactly.

To determine the extent to which insecure cloud storage is actively targeted for attack, my colleagues and I at UC San Diego and UC Los Angeles deployed hundreds of AWS S3 honeybuckets with different names and content to lure and measure different scanning strategies.

Key points

- Unsolicited visitors find AWS S3 public buckets by guessing their names exactly, not by finding their URLs.

- Buckets named after companies with vulnerability disclosure programs received more unsolicited traffic than buckets named after companies without vulnerability disclosure programs.

- Humans with malicious intent read and interpret sensitive files in public buckets.

Pilot study: How buckets are targeted

We considered multiple methods for finding public buckets, including finding a URL to a bucket (such as URLs in GitHub repositories or URLs in Pastes on Pastebin) or guessing bucket names by searching for key terms of interest (for example, Bitcoin, or Tesla). To discern how buckets were found, we created over 100 public AWS S3 buckets, modulating their naming schemes and whether we leaked their URLs.

To ensure the leaked buckets could only be found via a link — and not by guessing the name of the bucket, we named the buckets alphanumerically (such as ‘q81osr2ba5wnid4g’). Alphanumeric bucket names make it improbable for a visitor to guess the name exactly, thereby giving us high certainty the bucket was found by its link.

We named other buckets using various keywords, including terms related to cryptocurrencies and companies like Tesla and Walmart. To prevent these buckets from being easily discovered through name guessing, we avoided leaking their URLs.

Ultimately, our buckets were found by guessing their names exactly, not via their URLs.

Specifically, buckets named after companies were scanned with the highest number of operations (such as list bucket directory, and download file) and IP addresses. Company buckets were targeted by more IPs and Autonomous Systems (ASes) per day compared to all other bucket types, which is statistically significant.

Our pilot study also answered how bucket-finders interacted with the buckets after discovering them. Once a user lists the bucket directory, they might only choose to download a subset of the files. We uploaded files with a variety of enticing names and contents to each honeybucket to test for file-download preferences among scanning actors. For example, each honeybucket included a ‘Client list’ file to lure scanning actors searching for sensitive client information. The file included fake names, home addresses, and social security numbers. We uploaded files named after sensitive email folder names, SSH private keys, and Google takeout backups.

Once our buckets were found, they were actively interacted with. Over 700 unique IP addresses (of 6,567 total IPs) attempted to download a file from a bucket. The client list file was downloaded the most, with 160 total downloads across 88 unique IP addresses.

Other visitors used the public bucket as an opportunity to upload their own files. Over 100 unique IP addresses (of 6,567 total IPs) uploaded at least one file to a bucket, for a total of 206 files. Through manual investigation, we identified four unique files that hosted malicious content. Only two files, ‘upload.png’ and ‘s3sec.txt’ contained a warning that our bucket was public.

While the pilot experiment revealed key insights into scanning activity, several questions remained unanswered. It was unclear why the actors targeted companies or how they might have exploited the downloaded sensitive data. Additionally, actors could have used multiple IP addresses to mask their activity, making it difficult to determine whether multiple interactions originated from the same actor.

To address these issues, we deployed a new, refined honeybucket experiment.

Exploitation of company-named buckets

There were two main changes in our second study — how we named the buckets and their contents. We named this round of buckets only after two categories of Fortune 500 companies, those with vulnerability disclosure programs and those without.

To attract actors to interact with our honeybuckets, we created a directory containing fake financial data. The directory name was generated using an hourly-updated hashed timestamp, such as ‘update_2022 chargeback_{unix time}’. As a result, an actor who used multiple IP addresses across multiple hours to list bucket contents and download individual files could be identified using the hashed time stamp.

We also wanted to infer whether actors who downloaded sensitive data had malicious intentions, such as using the downloaded information for nefarious purposes. For this goal, we hosted an informative document that included an SSH username, password, and IP address for a honeypot server that we hosted, stating that the encryption key to the sensitive files in the bucket could be found in our SSH honeypot. Notably, we told actors to concatenate their ‘3-digit token’ to the password, knowing that we neither provided them with such a token nor would any username-password combination really grant them entry to the server. We also stated these SSH credentials belonged to a third-party contractor, clearly defining that the server was not owned by the company in the bucket name. We monitored login attempts to see if any attempted SSH credentials matched the credentials provided in the honeybucket.

We tracked the downloads of the informative document and monitored our SSH honeypot for six months. A total of 182 unique IP addresses downloaded at least one bucket’s informative document, which contained an email address (a direct method to contact the bucket owner) and leaked SSH credentials.

Eight unique IPs, traced to eight unique actors, collectively performed over 3,000 login attempts using the leaked SSH credentials. Five unique actors attempted at most eight passwords against our SSH honeypot (in particular, they did not exhaustively enumerate all possible passwords). In one case, a single actor used two IP addresses to find a financial institution bucket, downloaded the informative document, and attempted to log in to the SSH honeypot — all within three minutes.

The IP addresses both resolve to a domain that belongs to a penetration testing, threat and attack surface management company. Even if the financial institution were a customer of the threat management company that authorized them to act on their behalf, neither the credentials we provided nor the domain accessed belonged to the financial institution. Indeed, the downloaded informative document clearly stated that the SSH credentials belonged to a third-party contractor, and not to the financial institution. Notably, the threat management company did not append a 3-digit token to the SSH password, which the informative document instructed to do. While it is conceivable that the threat management company understood that no access would be accomplished, their attempt at unauthorized access violated ethical norms.

Three unique actors attempted all one thousand variations of the password leaked in the informative document. In one instance, an actor used multiple IP addresses to find a company bucket, download the informative document, and attempt to log into the SSH honeypot across 24 hours. However, unlike the above SSH abuse attempt, this actor clearly understood the requirement to append a 3-digit token to the SSH password, as they iterated through all 1,000 possibilities while attempting to log in. It is difficult to construct a credible scenario in which the actor could have believed these actions were authorized.

Identifying colluding IPs using VPNs

In the pilot experiment, thousands of unique IP addresses interacted with our buckets. To identify individual actors who may have used multiple IP addresses to search for buckets and attempted to log into our honeypot, our second experiment updated the SSH password in the informative document every hour.

Specifically, the document stated that a unique 3-digit token should be concatenated with a secure numeric key, where the ‘secure numeric key’ was just a hash of the current timestamp (hence, it is updated frequently). We then used the SSH password as a link between the IP address that obtained the password and the IP address that used it. Similarly, we updated the name of the text document to trace actors who may have used different IP addresses to list directory contents and download individual files.

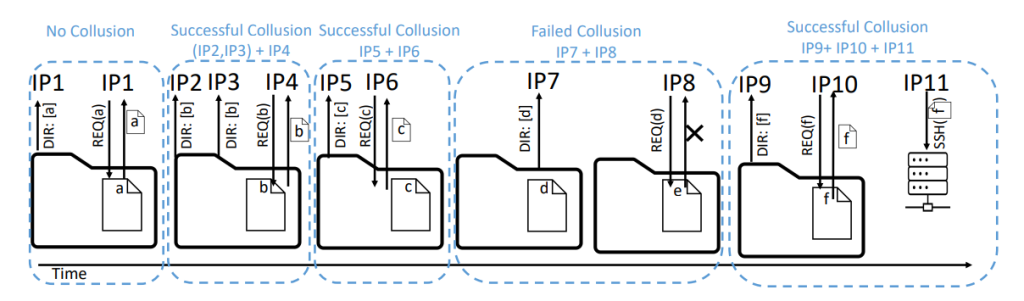

To identify colluding IP addresses, we relied on the hourly updates of unique identifiers in the bucket filenames. These identifiers provided a link between colluding IP addresses that executed operations on behalf of each other.

Concretely, three cases of operations revealed colluding IPs:

- Failure: Without having ever listed the directory, there was an attempt to download a file that used to, but no longer, exists. For example, in Figure 1, IP9 never listed the directory but attempted to download file d, which no longer existed. IP8 was the only IP that listed the directory during file d’s existence, thus IP8 and IP9 must have been colluding IPs.

- Success: Without having ever listed the directory, there was a successful download of a file. For example, in Figure 1, IP6 never listed the directory, but successfully downloaded file c. IP5 was the only IP address that listed the directory after file c was uploaded, but before IP6 downloaded file c. Thus, IP6 must have been colluding with IP5.

- SSH: Without having ever downloaded the informative document, there was an attempt to log in to the SSH honeypot. For example, in Figure 1, IP12 never listed the directory but attempted to log in to the SSH honeypot using the credentials only found in file f. IP11 was the only IP address that successfully downloaded file f, thus IP12 and IP11 must be colluding IPs. We use the success case above to determine that IP10 and IP11 must have been the same actor as well.

Applying this algorithm across all logged bucket operations, the vast majority (94.6%) of actors used only one IP address. In the most extreme case, one actor used 45 unique IPs — a subset of which map to known VPN products and Tor exit nodes — to download files.

Recommendations

Our work demonstrates that buckets named after commercial entities are actively targeted and exploited and that defending against cloud storage attackers is simple — configure buckets with sensitive information to be private. Nevertheless, we propose the following recommendations for decreasing the risk of bucket exploitation.

- We recommend that organizations consistently scan for both known and unknown cloud storage assets to immediately detect misconfigurations. To scan for known assets, organizations can maintain a bucket bookkeeping system that periodically scans all buckets. To scan for unknown assets, organizations should scan for easy-to-guess buckets named after the organization itself.

- Weigh risk according to organization type. Companies with a Vulnerability Disclosure Program (VDP) are more likely to be at risk than universities. We recommend that security services that exist to help organizations trace stray and unknown assets weigh the risk of exposed bucket exploitation according to the organization type. Such services can more aggressively scan and push to patch according to the organization type.

All the data from our Honeybuckets can be found on GitHub. This work was presented at EuroS&P 2024. For more information, read the full paper.

Katherine Izhikevich (UC San Diego B.S. ’22, M.S. ’24) is a Stephen L. Squires Scholar, Gary C Reynolds Scholar, and Computer Science PhD Department Fellow at UC San Diego. Her research interests are at the intersection of networking and security, primarily studying how to detect attackers in enterprise networks.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.