Earlier this year, the IETF standardized HTTP/3, the latest version of the Hyper-Text Transport Protocol (HTTP). While it shares many similarities to its predecessor, HTTP/2, the most notable difference is its use of QUIC instead of TCP as a transport protocol.

While the performance of these two transport protocols has been compared before, their influence on web performance is still not exactly clear as details, for example, HTTP prioritization can now shift. This led my colleagues and me to analyse the influence of prioritization on head-of-line (HOL) blocking and, in turn, on web performance, the results of which we presented in our TMA’22 paper.

The following post seeks to provide some background on the changes between HTTP/2 and HTTP/3 and some of the key outcomes of our study.

- QUIC relieves HOL blocking during loss as it is stream-aware such that it knows which specific streams have been affected.

- In high (random) loss and low bandwidth scenarios, where retransmissions occur often, the performance benefits of reduced HOL blocking of QUIC can be leveraged more often with parallel prioritization strategies.

- Moving streams from the application layer to the transport layer with HTTP/3/QUIC opens a new parameter space for new optimizations.

HTTP/2 to HTTP/3 — what’s changed?

All HTTP versions rely on a request-response pattern to, for example, request web resources such as images, videos and stylesheets, when loading a web page. However, the versions differ in their behaviour when loading these resources. For instance, HTTP/1.1 only transfers one resource at a time over a connection, while HTTP/2 and HTTP/3 use resource streams to allow multiplexing multiple resource transfers over a single connection.

HTTP/2 implemented its own streams on top of a single TCP connection, while HTTP/3 can directly leverage QUIC streams provided on the transport layer. While this may sound like simply moving functionality between layers, changing to transport layer streams tackles a major issue of HTTP/2 — transport HOL blocking.

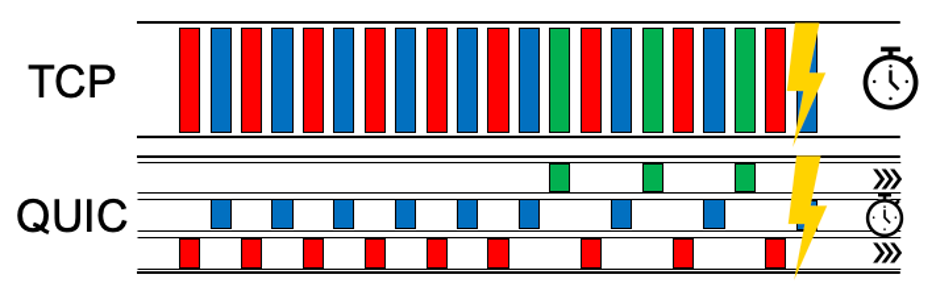

TCP handles a single opaque byte stream and is unaware of the resource streams on top. That means that packet loss influencing an HTTP/2 connection would not only stall one of HTTP/2‘s resource streams but all its current transfers as TCP waits for retransmissions.

QUIC relieves this HOL blocking as it is stream-aware such that it knows which specific streams have been affected. As shown in Figure 1, QUIC knows that only the blue stream is affected and can forward the remaining streams, for example, to support rendering images in the browser, while TCP would halt all resources to wait for the retransmission. However, for this idea to work, multiple streams need to be active.

If you are interested in further information, Robin Marx (who also describes further HTTP/3 / QUIC intricacies) wrote a detailed blog post about this where he goes into much more detail on the theoretical background of HOL blocking and describes possible outcomes.

HTTP prioritization influencing QUIC

The actual implementation of QUIC and the HTTP/3 server influences the number of active QUIC streams. Moreover, browsers can signal resource priorities to the server to announce which resources are potentially most helpful. For example, sending a website’s stylesheet first is more important than an image outside the viewport.

Browsers can also decide whether resources should be sent in parallel, for example, to render multiple images progressively, or sequentially as they cannot be used progressively. As such, the browser can also influence how HOL blocking affects the QUIC connection. More parallel streams, maybe even sent round-robin, could mean less HOL blocking. However, related work generally finds that round-robin scheduling of web resources is worst for performance. Yet, it does not look at the interaction of loss and browser performance.

That means, there may be loss and retransmission scenarios, where round-robin scheduling with QUIC’s stream-awareness allows the browser to render the website faster. However, it is unknown when this is the case or whether round-robin scheduling will always be detrimental. Thus, we set out to investigate this interplay.

Analysing the influence of prioritization on HOL blocking and real-world performance

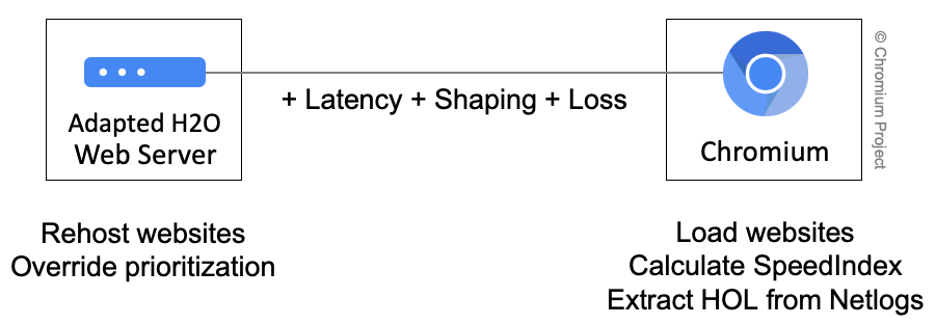

To analyse the interplay between prioritization, HOL blocking and web performance, we replayed 35 websites in a custom testbed (shown in Figure 2). We adapted web resource prioritization and scheduling on the one side, added link effects such as rate shaping, latency or packet loss during transmission in the middle, and loaded the website using Chromium on the other side.

We then measured performance using the SpeedIndex, which measures the visual completeness over time and further calculated how many bytes were HOL blocked, that is, had to wait for retransmission.

For scheduling, we oriented at today’s browser strategies, namely Chrome’s sequential strategy, Firefox’s parallel strategy (once for HTTP/2 and the new EPS signalling) and weighted and normal round robin. As we essentially compare the difference between sequential and more parallel strategies, all results are shown in comparison to Chrome’s sequential strategy.

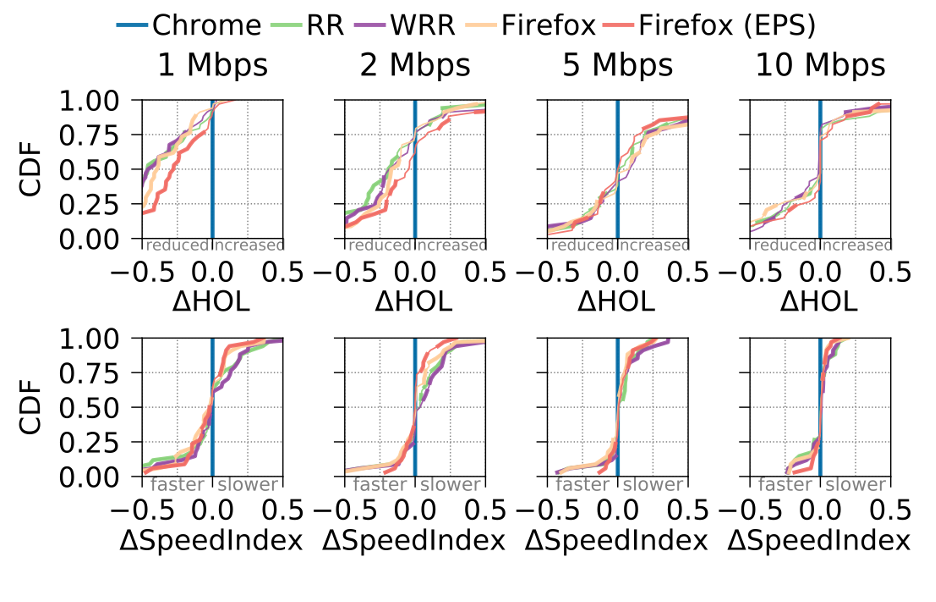

First, we examined the influence of bandwidth on the performance of HTTP/3 and how different resource prioritization schemes impacted the interplay. As such, we configured the bandwidth to 1 to 10Mbps while adding 50ms latency per direction and no artificial packet loss (Figure 3).

For larger bandwidths, we saw less impact on the actual HOL blocking and also a vanishing difference in performance. Here, a lot of the resources were already transferred during the slow start of the connection, such that the browser already received sufficient resources to begin rendering the page.

For 10Mbps, several websites were even transferred completely during a slow start such that no congestion loss at all occurred. Thus, for more than a quarter of all websites, barely any difference can be seen.

For lower bandwidths, we could see more HOL blocking and performance differences between the strategies. That is, in comparison to higher bandwidths, the differences for HOL blocking and performance get stronger. We found that the parallel strategies reduced HOL blocking and achieved a small SpeedIndex improvement for the median case, but there were also performance detriments, which are dampened when not using round robin. That is, in low bandwidth scenarios — for example, in poor-coverage wireless scenarios — a carefully tuned switch to a parallel strategy can improve performance. When looking at poor coverage, there is also a high chance of loss such that we also added artificial, random loss on the link.

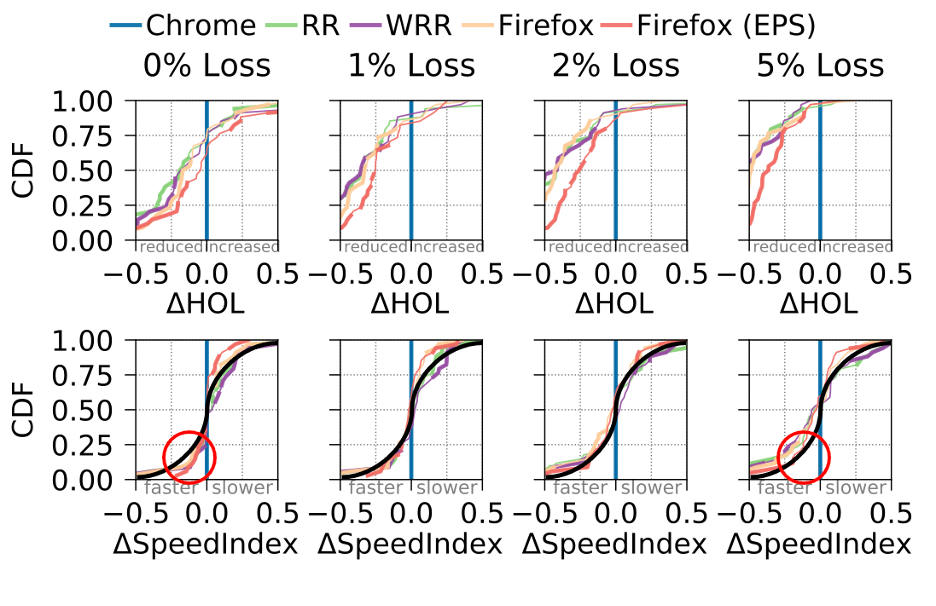

In Figure 4, we can see how the HOL blocking is strongly reduced by parallel prioritization strategies for higher packet loss. Also, the SpeedIndex differences are affected. For example, for the red circles, we can see that with higher loss, more websites benefit from parallelism. However, the changes are more subtle in comparison to the HOL blocking (which is why we added the black S-line here for comparison).

We found that these improvements mainly occur for larger websites, which experienced more lost packets and thus benefitted more strongly from more parallel scheduling during random loss — a finding that is newly enabled by HTTP/3.

Note: The picture changes when the loss gets more bursty, which Robin Marx also already assumed in his blog post. An increase from random loss to bursts of size 5 already pushed the performance improvements below the S-line.

QUIC HOL blocking can influence HTTP/3 performance

We see that in high (random) loss and low bandwidth scenarios, where retransmissions occur often, the performance benefits of reduced HOL blocking of QUIC can be leveraged more often with parallel scheduling.

However, the performance is also still website dependent, for example, depending on the size of the resources. As such, for typical low loss / low RTT / high bandwidth scenarios, sequential scheduling is still fine.

For all other scenarios, we can see that conceptually simple changes, like moving streams from the application layer to the transport layer with HTTP/3/QUIC, can open a new parameter space for new optimizations. These, however, need to be carefully explored due to new interactions, such as the website size, to find improvements.

Read our TMA’22 paper for further details and results.

Constantin Sander is a PhD student at the Chair of Communication and Distributed Systems at RWTH Aachen University in Germany.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.