A rather depressing, but sobering, piece of writing came up in my feed this week: Programming in the Apocalypse by Matthew Duggan.

The premise of this blog post is climate change, specifically human-made climate change, and it focuses on ’what’s the impact in our sector’.

Some things are almost out of the 2020-2022 playbook, because of the pervasive supply of COVID-19 shock consequences, or even the single-event consequence of the Suez Canal blockage (the long-tail effects of intermodal container ship logistics, combined with the pandemic, has been a two-year story of ‘sitting on the dock of the bay’ for almost every product worldwide).

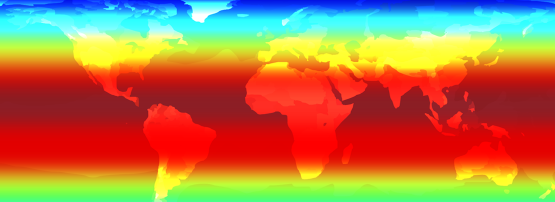

But others are forward-looking imaginings of the risk side of things, such as a less reliable electricity supply market in the face of rising temperatures. It may not be immediately apparent, but the efficiency of a steam boiler generator is directly related to the temperature difference between the high-pressure steam it produces and the final temperature on the other side of the turbines, as the water vapour evaporates into the sky. If global warming increases ambient temperatures, then irrespective of any other consequence, electricity supply generation will drop in efficiency if it depends on steam and air cooling.

Aside from generation, electricity supply lines are built to thermal profiles that assume a certain amount of ‘sag’ caused by temperature expansion of the cables, which were not necessarily built for a 2050 climate, or for the fires that are becoming more common. So, while it’s 30 years out, Matthew’s argument that a 2050 planet has less reliable electricity seems reasonable; pessimistic, but also realistic.

Every data centre worldwide depends on electricity, both to power the computers, routers and switches, and to cool them. There’s that word again — cooling. The data centre cooling efficiency will be hit as equally as electricity supply generation in a perverse effect — they will need more power to cool down, under the conditions of less stable supply.

Matthew considers the finance and risk side — how likely is it that we will be able to fund the technical infrastructure if construction finance capital is now being redirected to sea walls and the structural integrity of the city and roads to deal with mass migration?

The reaction of an industry built into ‘just-in-time’ supply models, as the supply shocks hit home (for memory, disks and CPU, and things as trivial as surface-mount resistors), has already been seen. Many manufacturers are having to warehouse more parts, driving costs up overall as the limited stock of parts has global consequences. The supply of vehicles dependent on chips for engine management systems are competing with the supply of routers and switches for data centre expansion.

So again, a 2050 world with more risk probably has more costs. We’ve been used to the idea of getting more CPU, GPU, memory, and disks for the same (or even lower) prices, but this model may not be sustainable long term; the cost of building and running the Internet may have to rise.

Matthew thinks it’s possible we may have to adapt to situations and actually prefer offline and asynchronous modes of communication, to allow disparate (home? work? international?) teams to manage information flows against unreliable power and data. The predicted ‘death of email’ may turn out to be a premature signal, because this kind of store-and-forward communication is well suited to a less fully online world. We can periodically resynchronize against mail more easily than we can keep instant messages working. And the upside might even be ‘fewer online meetings’, which will please many.

Where I think the story breaks down are his assumptions about the future of programming language choices. I don’t think these choices are defined by the same things as the structural dependency side of what a fully online world requires, and I don’t think we’ll see big shifts in coding languages. We may see big shifts in coding styles caused by reduced power budgets (perhaps less online query loads or more use of cached or precalculated data), and we may well see a shift in the dependency on the network behind things (that is, a retreat from the cloud into more certain costs inside a company data centre operated with its own power and air conditioning).

However, Mathew’s article made me sit up and think about the long-tail consequences of decisions taken long ago, and decisions not taken long ago. We’re now on a path to change that will include effects on the shoreline that every Internet sub-oceanic cable passes through to reach our homes. Even if the homes we live in are not at risk from the rising tide (mine is; I already had a 10-day flood this year!), our data-driven economy certainly is.

A sobering piece of writing. I urge you to examine and think about it.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.