Japan Registry Services Co, Ltd. (JPRS) is the Country Code Top-Level Domain (ccTLD) registry of the .jp domain name and also operates a Generic Top-Level Domain (gTLD) ‘.jprs’, as a research and design platform. JPRS has conducted some research experiments with .jprs in collaboration with Japanese ISPs. More details on these activities are available here.

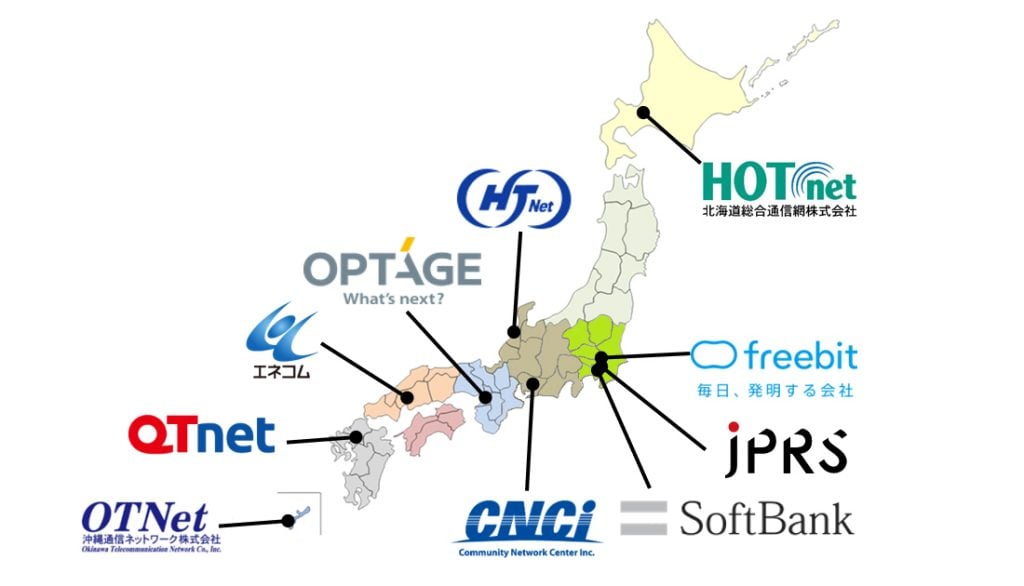

From 2019 – 2020, we conducted an experiment of anti-DDoS functionalities implemented in full-service resolver implementations with nine Japanese domestic Internet Service Providers (ISPs). We tested a full-service resolver configured with both fetch-limit and serve-stale enabled. This blog will explain the outcomes.

Anti-DDoS functionalities in full-service resolvers

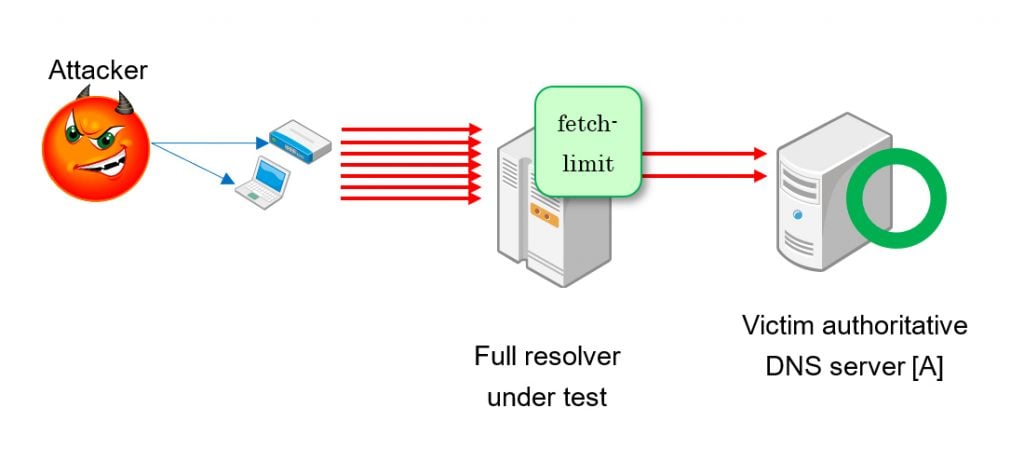

Anti-DDoS functionalities in full-service resolvers exist to absorb overwhelming floods of packets and requests and to avoid having a part in sending massive traffic to the authoritative server. If a botnet hides in your network and they send DNS queries for a target with a random suffix, your full-service resolver will pass all queries through to the victim. Fetch-limit throttles simultaneous iterative queries to an authoritative server.

We decided against aggressive use of NSEC and DNS cookies at the time. .jprs has been signed with NSEC3 opt-out so NSEC aggressive use will not work with opt-out zones because it can’t prove non-existence. With opt-out, it can only prove there is no secure delegation (with DS RRset), but insecure delegation (without DS RRset) may exist.

For DNS cookies, revision of RFC 7873 was ongoing in the DNS Operations Working Group (DNSOP) at the time as there are some issues, such as mixing multiple implementations in an anycast service and secret rollover.

Pilot scenario

We used dnsperf to send a large number of queries, simulating a DDoS attack. We weren’t interested in source IP addresses because we didn’t test against DNS Response Rate Limit. It worked well. We also found configuration knobs in implementations and some operational findings, such as log messages and output in statistics counters.

Evaluation scenario

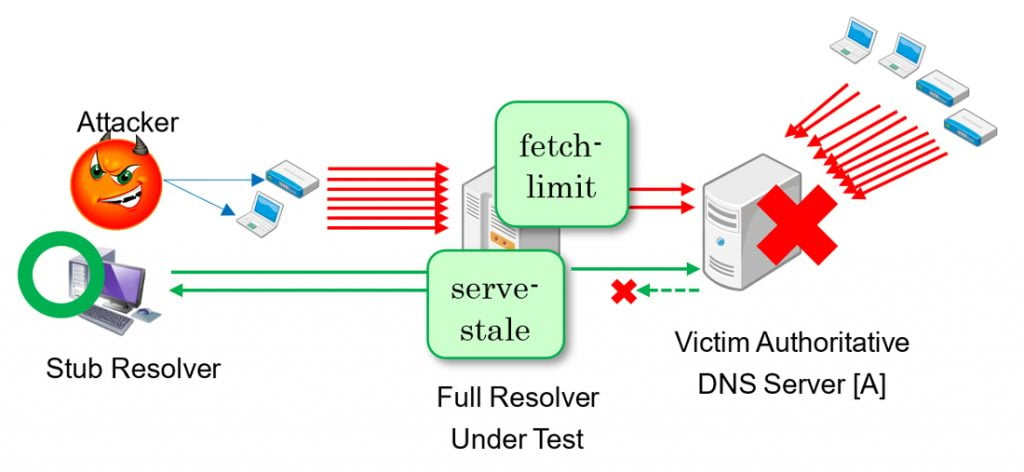

We created a scenario for evaluation of the features. The scenario assumes both fetch-limit and serve-stale are enabled. Under the configuration, we expect both features to work as expected.

Step 1: We created a situation where a domain name is under DDoS attack: rd2020-theme2.jprs. Yes, the domain name is in the real world. It is/was resolvable at the time of the experiment. We then set up a victim authoritative server [A] and [B] on the Internet.

In the second step, server [A] doesn’t respond due to the DDoS attack. While queries from our network can be absorbed thanks to the fetch limit, the botnet spreads on the Internet and sends a large number of queries. Server [A] is not large enough to handle the traffic and went down. It is simulated by dropping inbound queries with a network filter in server [A]. Our full-service resolver is unable to get an answer from server [A] so it cannot serve to the legitimate stub resolver.

After a cache entry expires, the full-service resolver sends a query to server [A]. If the server is unable to respond, the full-service resolver uses the cache entry, even if the TTL is expired.

For step 3, we simulate a circumstance that the victim switches its authoritative DNS server provider to another provider, strong enough to absorb the DDoS traffic. To achieve it, we need to switch the NS RRset in the parent zone. However, it is hard to conduct a test with all participants at the same time. We can’t make a schedule because they are real operators and some of them are working in shifts.

![Figure 4 — The victim switches to another DNS provider [B] but the stale cache entry is still used.](https://blog.apnic.net/wp-content/uploads/2021/10/Figure-4-—-The-victim-switches-to-another-DNS-provider-B-but-the-stale-cache-entry-is-still-used.-1024x472.jpg)

Instead, we prepared an authoritative server with zone content that changes periodically. Participants took the experiment when it was convenient (and I didn’t have to wake up at midnight 😊).

Results

Unbound worked as expected. Ratelimit regulates simultaneous iterative queries to the authoritative server. If server [A] is not responding, it responds with a cache entry with an expired TTL, thanks to the serve-expired functionality.

BIND 9 worked differently than expected. With fetch-limit, BIND 9 restricted the number of simultaneous iterative queries to the authoritative server as expected. However, it responds with SERVFAIL after the authoritative server becomes unresponsive, which shows that serve-stale didn’t work as expected. However, in the early experiment, we observed serve-stale working.

With help from our participants, we found that rndc dumpdb shows there is a stale cache entry for the domain name. However, there is a cache entry with expired TTL, so the server should respond with the information. Why didn’t it work?

Feedback to the implementers

We found that an older version of BIND 9 didn’t work as expected if configured with both fetches-per-{server,zone} and stale-answer-enable. I contacted the developer, Internet Systems Consortium (ISC), to explain our findings. ISC generously responded very quickly and confirmed there is a problem with the serve-stale implementation. They also said it is a known issue that was tracked as Issue #1712 in ISC’s GitLab and fixed in version 9.16.13 (ChangeLog 5573).

What happened next?

We undertook an experiment of fetch-limit and serve-stale. We found an older version of an implementation didn’t work as we expected. We reported it to the developer, and it has been fixed in the latest version.

I would like to express thanks to ISC and NLnet Labs for handling feedback from us. Also, I would like to express thanks to participating ISPs: CNCI, ENECOM, Freebit, HOTnet, HTNet, OPTAGE, OTNet, Qtnet and Softbank.

Yoshitaka Aharen is a System Engineer at Japan Registry Services Co., Ltd. (JPRS), to carry out registration and management of a ccTLD .jp.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.