This article was co-authored by Ratul Mahajan.

The question: “Is my system going to operate correctly?” is an age-old problem in computer science. Perhaps the most common way to ascertain whether a system is operating correctly today is through testing and monitoring. For example, for networks, we frequently use tools such as traceroute and ping to check whether two endpoints have connectivity. Unfortunately, such testing and monitoring is both incomplete and reactive — one can never fully test all inputs/packets and can often only find problems after they have already affected the live network and, by extension, its users.

Can we do better than testing?

Reliability through network verification

Verification, the field of study on how to formally prove the absence of bugs in a computer system, has a long history of being applied to guarantee the correctness of both hardware and safety-critical software. Recently network verification has emerged in the form of powerful tools that can proactively check the correctness of a network before deployment. Compared to testing, network verification offers several benefits:

- Exhaustiveness: Unlike common tools such as ping and traceroute, which provide visibility into the experience of individual inputs (packets), verification tools can check the behaviour for all (possibly billions of) inputs.

- Proactiveness: Because network verifiers build a model of the expected behaviour of the network, they can check for errors or issues before impacting live traffic in the network.

Today, many large cloud providers are using network verification to ensure high availability and security. A few well-known examples include:

- Microsoft uses network verification to check that data centre packet forwarding is correct.

- Amazon can verify reachability in virtual private clouds (VPCs) hosted on AWS.

- Alibaba uses network verification to check the correctness of changes to their internal access control and wide-area network policies.

However, outside of these specific settings, the vast majority of networks are still being built and operated without automated reasoning and with all of the availability and security risks that go with such an operation.

Can we make network verification tools as prevalent as ping and traceroute?

The challenge of extending verification tools

One reason why network verification tools have seen use limited to such specific settings is due to the high engineering cost of building such tools. Large providers can justify this cost as the alternative — a large network outage — is extraordinarily expensive at cloud scale. Unfortunately, it is also challenging to reuse existing verification tools in new networks as well since, if key functionality is even slightly different from the ones targeted by an existing tool, this can require non-trivial expertise to extend or adapt the existing tool.

Network verification tools are challenging to build in the first place since they must maintain a model of how all the devices and protocols in a network will operate in order to have predictive power. This includes functionality such as middleboxes, access control, tunnelling, routing, and more.

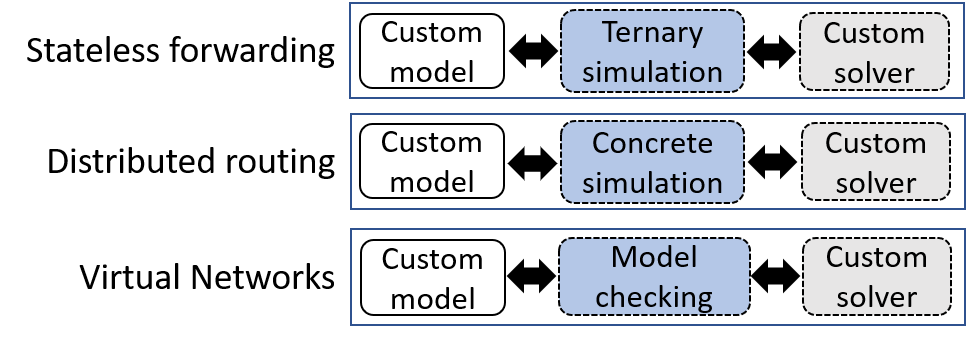

In addition, network verifiers are engineered as monoliths today. Depicted below, they have a custom model of the target network functionality (for example, IPv4 packet forwarding) and a ‘what-if’ analysis engine that is intimately tied to this model.

This coupling makes it difficult to extend network verification to more functionality that we may know how to verify analytically but the tool does not support (for example, packet forwarding with a new type of middlebox or virtual networks with underlay and overlay forwarding). Such extensions require one to develop or modify both the model of network functionality and the analysis engine. This is a tough task because of the amount of work and the broad expertise needed in networking as well as formal methods. This difficulty makes verification hard to employ in networks where key functionality is different from that targeted by an existing tool.

We have witnessed this difficulty first hand with tools that we have helped develop. At Microsoft, our engineers have developed tools to verify the safety of changes to access control lists, network security groups, and forwarding table rules. Each time we had to develop a new tool from scratch despite the similarity of these functionalities because extending the prior tool was no easier than building a new one. Similarly, extending Batfish over the years to support more network functionality or additional router vendors with slightly different semantics has required significant changes to the analysis pipeline.

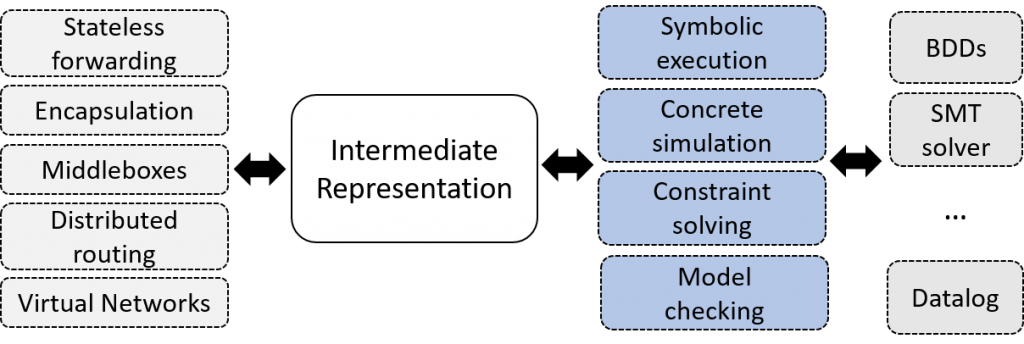

Zen: A linguistic approach

The monolithic approach was helpful for the first generation of network verification tools because the tight integration between network functionality and analysis enabled faster iteration. However, this tight coupling is no longer necessary and we can decouple network modelling from analysis. Depicted below, network functionality can be modelled in a common language and analysis engines can target the language, instead of specific functionality.

This way, extending verification to new functionality only requires modelling that functionality. Once that is done, one or more analysis engines become available to analyse that functionality.

This approach is inspired by the software analysis domain. There is a vibrant ecosystem around intermediate representations such as LLVM that can encode the semantics of programs in multiple source languages. A variety of analysis and optimization tools are then available for this intermediate representation, which benefits all source languages.

We have been building such a general purpose intermediate representation in the form of a library called Zen. Zen makes it possible for tool authors to quickly build and combine models of network functionality together. Our preliminary experiences with Zen have been promising; we have been able to reimplement various network verification tools and analyses by writing orders of magnitude less code and without sacrificing the performance of such tools (you can find more details in our paper).

An example model for network ACLs

To demonstrate this idea, we show a simple example of a model built in Zen to reason about access control lists (ACLs) such as those configured on routers. The small code snippet below shows the whole model in Zen using C#. An ACL consists of a sequence of prioritized lines that either permit or deny some packets. We describe how packets are processed by an ACL by writing a simple function that takes C# objects wrapped within a Zen type.

public class Acl

{

public AclLine[] Lines { get; set; }

public Zen<bool> Process(Zen<IpHeader> hdr, int i)

{

if (i == this.Lines.Length)

return false;

var line = this.Lines[i];

return If(line.Matches(hdr), line.Permitted, Process(hdr, i + 1));

}

}In the example, we process the ACL by going through each line in order and checking if the given packet header matches the line. If so, then we can determine if the packet is permitted based on the rule, and otherwise we must continue to the next line. An individual ACL line is implemented in a similar way:

public class AclLine

{

public bool Permitted { get; set; }

public ushort DstPortLow { get; set; }

public ushort DstPortHigh { get; set; }

public ushort SrcPortLow { get; set; }

public ushort SrcPortHigh { get; set; }

...

public Zen<bool> Matches(Zen<IpHeader> hdr)

{

return And(

hdr.GetDstPort() >= this.DstPortLow,

hdr.GetDstPort() <= this.DstPortHigh,

hdr.GetSrcPort() >= this.SrcPortLow,

hdr.GetSrcPort() <= this.SrcPortHigh,

...);

}

}While this may seem like writing normal code, going through the Zen library provides new functionality that is useful for verification:

- Simulation: As with normal code, we can ask the library to execute a test packet against the ACL to determine if the packet would be dropped or allowed. Since this is just a model, we can ensure the ACL is correctly handling packets of interest before deploying the ACL.

- Search: We can also ask Zen to exhaustively search over the model. For example, we can tell it to find a packet that is either permitted or dropped on a certain line. We can similarly ask other questions such as whether there is any packet that is dropped by one ACL but not another.

- Test generation: Zen can generate test packets for the ACL that will exercise every unique ‘path’ through the model. For instance, this would generate one test packet per ACL rule and can be used to identify software implementation and hardware implementation bugs where the ACL behaviour deviates from what is expected.

Network verification has emerged as a powerful technique to guarantee the safety of changes to networks. However, due to the high engineering cost associated with building and maintaining network verification tools, its use remains largely limited to large cloud providers such as Microsoft, Amazon, and Alibaba.

We are proposing an alternative approach to making the tools more accessible to engineers by leveraging an intermediate language in the same way LLVM is used for compilers. Hopefully solving this challenge will improve the security and reliability of our critical networking infrastructure.

Ryan Beckett is a networking and programming languages researcher at Microsoft who received his PhD in computer science from Princeton University in 2018. His research interests lie primarily at the intersection of networks and formal methods, and his work focuses on improving network reliability.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.