QUIC is a new Internet transport protocol and the foundation of HTTP/3, which promises to enhance the historically grown TCP+TLS+HTTP web stack. By combining these functionalities on top of UDP, QUIC can, by design, overcome irresolvable issues like head-of-line blocking.

Moreover, features that have been hard to deploy Internet-wide due to middleboxes, like TCP FastOpen, become accessible right out of the box. Preventing this ossification is mainly achieved by fully encrypting all protocol headers, offering no means for middleboxes to tamper with the transport layer.

One of QUIC’s main selling points is that it promises to drastically increase web performance. But do features added in QUIC really improve the speed that much? And contrarily, does the TCP stack leave that much performance on the wire?

Since it is well known that large content providers operate highly optimized TCP network stacks, we think that past measurements neglect this fact and compare QUIC configured for the web against commodity and web-optimized TCP stacks.

Read: A quick look at QUIC

So, we at RWTH Aachen University in Germany set out to compare the web-performance of TCP+TLS1.3+HTTP/2 against Google QUIC on an eye-to-eye level. We replayed 38 websites with a modified version of the Mahimahi framework (Mahimahi allows you to replicate the multi-server nature of today’s websites in a reproducible testbed) to a Chrome browser under different network conditions (Table 1).

Our TCP web stack requireed the usual two round trip times (RTTs) for connection establishment (TCP and TLS handshakes). In our testbed, QUIC always required one RTT. We think that this best replicated current and future deployments since TCP FastOpen is still hindered by middleboxes in some networks and TLS early-data was not implemented at the time of measurement.

| Network | Uplink (Mbps) | Downlink (Mpbs) | Delay (ms) | Loss (%) |

| DSL | 5 | 25 | 24 | 0 |

| LTE | 2.8 | 10.5 | 74 | 0 |

| MSS | 1.89 | 1.89 | 760 | 6 |

| DA2GC | 0.468 | 0.468 | 262 | 3.3 |

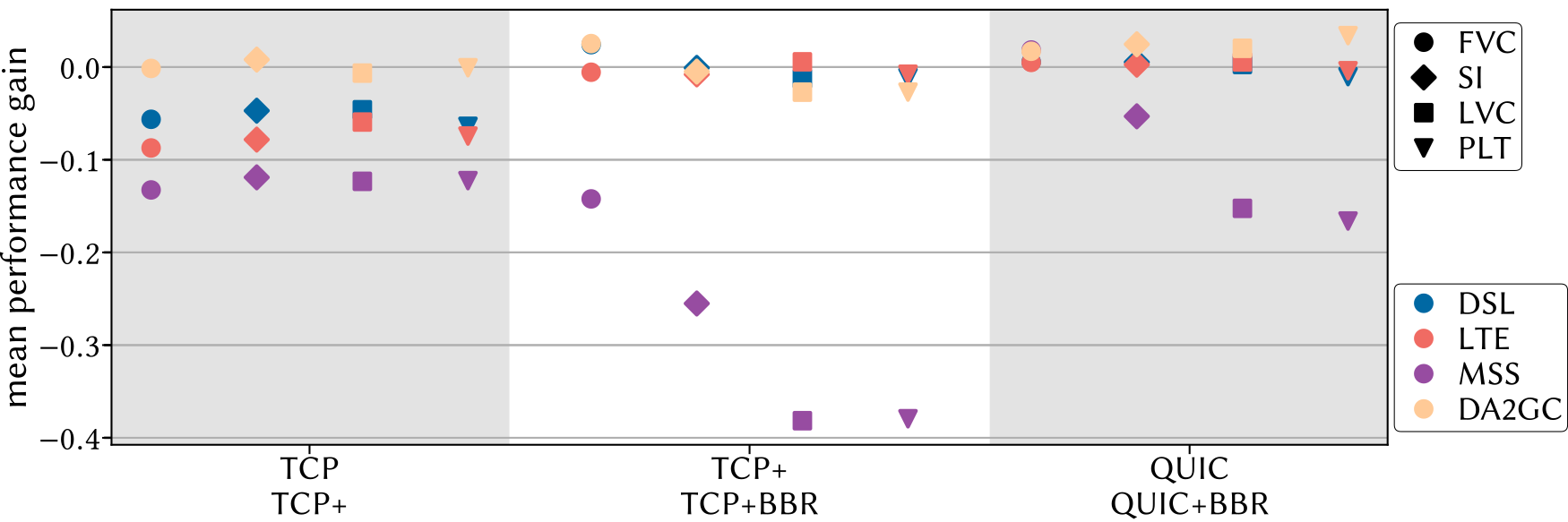

As a first step, we tested whether adjusting TCP network stack parameters already enhances performance by increasing the Initial Window (IW) from 10 to 32, enabling packet pacing, increased kernel buffers, and ‘setting no slow start after idle’. Figure 1 depicts the performance gain exemplary for a stock TCP against our tuned TCP (TCP+) calculated as (TCP+)/TCP-1. For a user-centred evaluation, we also included visual metrics aside to the widely used page load time (PLT) since it is known to predict user perception badly.

Figure 1 — Average performance gain over all 38 websites for First Visual Change (FVC), Speed Index (SI), Last Visual Change (LVC) and Page Load Time (PLT). Negative performance gains indicate that the protocol written at the bottom is faster.

Clearly, tuning is beneficial (see TCP vs. TCP+). Thus, for web stack performance comparisons, the precise configuration cannot be left out of scope.

Still, for the low bandwidth network DA2GC, our tuning does not seem to be beneficial. This might be the case due to the IW increase leading to early losses. We are aware of the fact that there are, indeed, plenty of further techniques for tuning TCP out there.

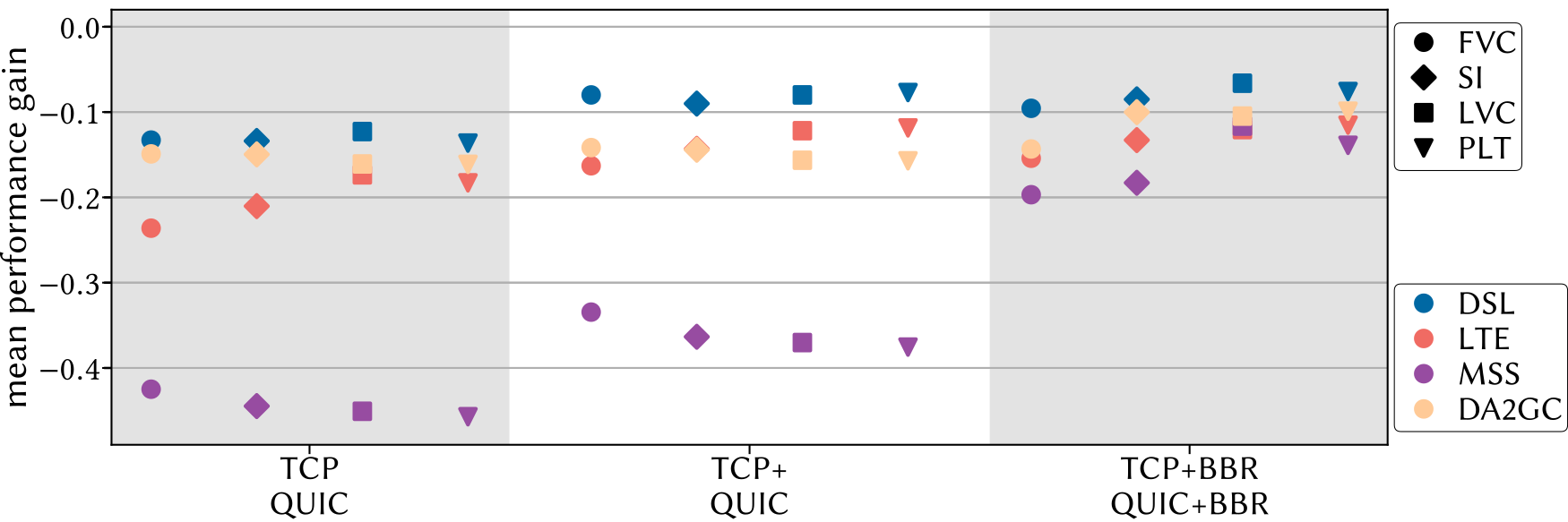

Since we expected congestion control to play a major role in the overall performance as well, we tested with BBR (a recent congestion control contributed by Google) instead of CUBIC. We observed that, independent of TCP or QUIC, results only differed in the highly loss-affected MSS network where BBR seems to be better suited since it can oversee static loss.

Figure 2 — Average performance gain over all 38 websites for First Visual Change (FVC), Speed Index (SI), Last Visual Change (LVC) and Page Load Time (PLT). Negative performance gains indicate that the protocol written at the very bottom is faster.

How does QUIC compare to that? When comparing ordinary TCP against QUIC, the performance increase was large, but tuning reduced this gap. For the MSS network, QUIC with CUBIC still achieved a large speed increase compared to TCP+.

Read: How much of the Internet is using QUIC?

Under current conditions, end users still benefit from QUIC. But it is not clear whether this gap originates solely from the RTT difference between both protocols in our measurements. So we selected two websites that rely on resources from one single server only. For these, we can subtract out one RTT for TCP+ because only one connection needs to be established. Thereby, both the protocol’s connection establishments are levelled.

| Network | Website | Difference [ms] | Difference [RTT] |

| DSL | gnu.org | 1.6 | 0.066 |

| DSL | wikipedia.org | -3.1 | -0.128 |

| LTE | gnu.org | -30 | -0.412 |

| LTE | wikipedia.org | -13 | -0.175 |

| DA2GC | gnu.org | 39 | 0.15 |

| DA2GC | wikipedia.org | -1005 | -3.834 |

| MSS | gnu.org | -1100 | -1.447 |

| MSS | wikipedia.org | -529 | -0.696 |

When doing so, (see Table 2) the difference between QUIC and TCP+ usually falls below the duration of one RTT. There are also cases where TCP+ is now slightly faster. We attribute the remaining advantage of QUIC to its ability to circumvent head-of-line blocking and larger Selective Acknowledgement (SACK) ranges — especially for slow or lossy networks.

Regarding human perception, QUIC’s benefits are minimal, especially for speedy networks, and the individual rendering order of website elements is more relevant than protocol choice.

Video 1 shows the loading processes of TCP+ and QUIC side-by-side. Depending on which elements one focuses on, it is not obvious which website loads faster. For example, QUIC loads the final font late whereas TCP+ takes time to finish loading the banner entirely.

Video 1 — Loading process of TCP+ and QUIC side-by-side for the etsy.com website in the DA2GC network.

In the past, QUIC’s web performance might have been exaggerated. We showed that a TCP web stack with simple adjustments can keep pace with QUIC. Thus, adopting QUIC need not be a top priority to bring websites up to speed and applying well-established methods for increasing web performance might be more profitable.

Nonetheless, QUIC paves the way for a fully encrypted transport protocol, stays evolvable by circumventing ossification, and is the most appropriate option for future protocol development. And, especially in bad networks, QUIC’s features seem to provide a significant advantage over TCP.

This work is based on our paper and was presented at the ANRW’19 (Applied Network Research Workshop).

Konrad Wolsing is studying for a Master of Computer Science degree at RWTH Aachen University in Germany.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.