Many of us have held a vision of the Internet as the ultimate distributed platform that allows communication, the provision of services, and competition from any corner of the world. But as the Internet has matured, it seems to have fed the creation of large, centralized entities that provide most Internet services.

The most visible aspects of this involve well-recognized Internet services, but it is important to recognize that the Internet is a complex ecosystem. There are many underlying services whose diversity, or lack thereof, are as important as that of, say, consumer-visible social networks.

Consolidation and centralization are topics that can be looked at from many different angles, but as technologists, we like to think about why these trends exist; particularly how available technology and architecture drives different market directions.

Consolidation is of course driven by economic factors relating to scale and the ability to reach a large market over the Internet, and first mover advantages. But the network effect has an even more pronounced impact. Each additional user adds to the value of the network for all users in a network. In some applications, such as the open web, this value grows for everyone, as the web is a globally connected, interoperable service that anyone with a browser can use.

Another example of such a connected service is email — anyone with an account at any email server can use it globally. However, again we have seen much consolidation in this field, both due to innovative, high-quality services but also because running email services by small entities is becoming difficult; among other things due to spam prevention practices that tend to recognize well only the largest entities.

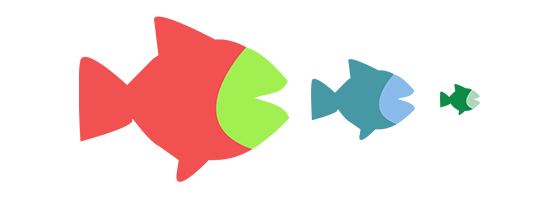

In some other applications, such as social media, the services have a more closed nature. The value of being a customer of one social media service depends highly on how many other customers that particular service has. Hence, the larger the service, the more valuable it is. And the bigger the value difference to the customers, the less practical choice they have in selecting a service.

Due to this, and the efficiency of the Internet as a marketplace, winners tend to take large market shares. It also allows asymmetric relationships to form, with the customers having less ability to affect the service than they would perhaps wish.

The winner’s advantage is only getting larger with the advent of AI and machine learning based technologies. The more users a service has, the more data is available for training machine learning models, and the better the service becomes, bringing again more users. This feedback loop and the general capital-intensive nature of the technology (data and processing at scale) makes it likely that the largest companies are ahead in the use of these technologies.

The good and bad of permissionless innovation

The email vs. social media example also highlights the interesting roles of interoperability and the ‘permissionless innovation’ principle — the idea that a network can be simple but still powerful enough that essentially any application could be built on top of it without needing any special support from anyone else.

Permissionless innovation has brought us all the innovative applications that we enjoy today, on top of a highly interoperable underlying network, along with advances in video coding and other techniques used by applications. Paradoxically, if the underlying network is sufficiently powerful, the applications on top can evolve without similar pressures for interoperability, leading to the closed but highly valuable services discussed above.

There are also security tradeoffs. Large entities are generally better equipped to move to more recent and more secure technology. For instance, the Domain Name System (DNS) shows signs of ageing but due to the legacy of deployed systems, has changed very slowly. Newer technology developed at the IETF enables DNS queries to be performed confidentially, but its deployment is happening mostly in browsers that use global DNS resolver services, such as Cloudflare’s 1.1.1.1 or Google’s 8.8.8.8. This results in faster evolution and better security for end users.

Read Geoff Huston’s post discussing the potential benefits of new DNS technologies being developed including DNS over HTTPS, and DNS over TLS.

However, if one steps back and considers the overall security effects of these developments, the resulting effects can be very different. While the security of the actual protocol exchanges improves with the introduction of this new technology, at the same time this implies a move from using a worldwide distributed set of DNS resolvers into, again, more centralized global resolvers. While these resolvers are very well maintained (and a great service), they are potentially high-value targets for pervasive monitoring and Denial-of-Service (DoS) attacks. In 2016, for example, DoS attacks were launched against Dyn, one of the largest DNS providers, leading to some outages.

There are also fundamental issues with regard to who can afford to deliver such services — due to the speed of light, any low-latency service fundamentally needs to be distributed throughout the globe in data centre networks that only the largest providers can build. Or how the biggest entities can deal with DoS attacks which small entities cannot.

And then there are other factors that depend on external effects. Assuming that one does not wish for regulation, technologies that support distributed architectures, open source implementations of currently centralized network functions, or help increase user’s control can be beneficial. Federation, for example, would help enable distributed services in situations where smaller entities would like to collaborate.

Similarly, in an asymmetric power balance between users and services, tools that enable the user to control what information is provided to a particular service can be very helpful. Such tools exist, for instance, in the privacy and tracking-prevention modes of popular browsers but why are these modes not the default, and could we develop them further?

It is also surprising that in the age of software-defined everything, we can program almost anything else except the globally provided, packaged services. Opening up interfaces would allow the building of additional, innovative services, and better match with users’ needs.

Silver bullets are rare, of course. Internet service markets sometimes fragment rather than cooperate through federation. And the asymmetric power balances are easiest changed with data that is in your control, but it is much harder to change when someone else holds it. Nevertheless, the exploration of solutions to ensure the Internet is kept open for new innovations and in the control of users is very important.

Co-authored by Brian Trammell, ETH Zurich, Switzerland

Jari Arkko is an expert on Internet Architecture with Ericsson Research in Jorvas, Finland and former Chair of the IETF.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

I would like to thank Jari for bringing out this excellent blog highlighting the new trends in the Internet ecosystem.The centralisation and consolidation of platforms for all kinds of services, search, financial transactions etc.. brings forth an important issue , whether future Internet will be confined to few global players and others will be marginalised due to shear strength of technology, data capacity and the standards helping them to monopolise the system.

Here comes the critical role of IETF, IAB ,IEEE and ISOC to work together and see to it that Internet remains open and whatever standards are made continues to encourage Permission less Innovations.

So, Jari (and Brian), how could serverless computing and services like Cloudflare Workers affecr the trends you describe?

1) Being through the old school of the traditional telecom, Internet issues have been rather perplexing to me. They seem to be unnecessarily intertwined with one another to the point of being convoluted. After ventured into studying the IPv4 address pool exhaustion challenge and came across a solution that can expand each IPv4 address by 256M (Million) fold yet without perturbing the existing setup, regionalized Internet services coexisting with the current global version become possible. We have submitted to the IETF a proposal called EzIP (phonetic for Easy IPv4) about the scheme:

https://tools.ietf.org/html/draft-chen-ati-adaptive-ipv4-address-space-03

2) Follow-up derivation from the above led us to the belief that the Internet is more like the PSTN than different. Page 12 of the following whitepaper presents this intriguing parallelism.

https://www.avinta.com/phoenix-1/home/EzIPenhancedInternet.pdf

3) If we base on this architectural perspective in reviewing the Internet issues, a more concise reference may be utilized to deal with the difficulties that we are facing today.