TCP congestion control architecture is notorious for degraded performance in numerous real-world scenarios. In this post, we present the recently proposed online learning approach to congestion control — Performance-oriented Congestion Control (PCC).

PCC’s goal is to infer which rate control actions improve performance based on live empirical evidence, avoiding TCP’s preconceptions about the network.

We discuss the limitations of traditional TCP and present the advantages of online learning with PCC.

What’s wrong with TCP?

Real-world experiments reveal that, in many cases, the performance of specially-engineered TCP variants is far from optimal [PDF 690KB] even in the environments for which these were designed.

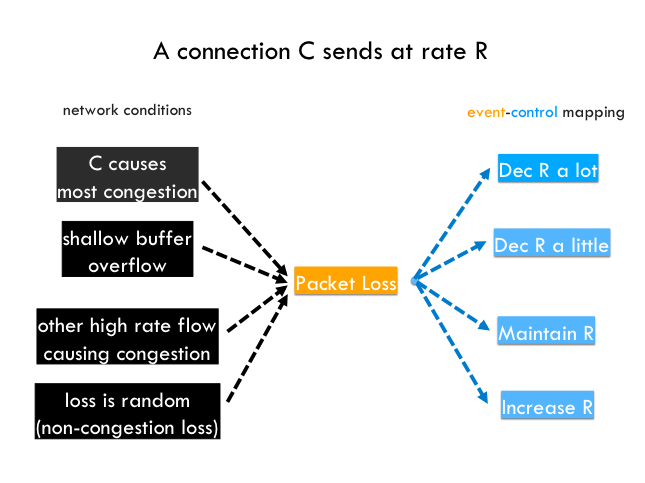

We argue this unfortunate state of affairs is an inherent consequence of TCP’s architecture and, specifically, of hardwiring predefined packet-level events to predefined control responses. Consider, for example, the behaviour of a TCP connection upon experiencing packet loss. Figure 1 depicts four potential reasons for why packet loss might have happened and the four corresponding ‘appropriate’ control reactions.

Figure 1 — Diagram depicting four potential reasons for why packet loss might have happened (black) and four corresponding ‘appropriate’ control reactions (blue).

While variants of TCP might react differently to packet loss, any TCP-sender is bound to react to all four scenarios in precisely the same manner (for example, half the congestion window), resulting in bad performance when its hardwired reaction does not match the network conditions.

Congestion control as online learning

PCC rises from where TCP fails by associating a control action (change of sending rate) directly with its effect on real performance.

Under PCC, time is divided into consecutive intervals, called Monitor Intervals (MIs), each devoted to testing the implications for the performance of sending at a certain rate. Specifically, when a PCC sender tests rate ‘r’, it sends at this rate for ‘sufficiently long’ (say, an RTT) to receive Selective Acknowledgements (SACKs), and aggregates these into meaningful performance metrics (for example, throughput, loss rate, and average latency). These are then combined into a numerical value ‘u’ via a ‘utility function’ that captures objectives such as high throughput and low loss rate.

By comparing utility values resulting from sending at different rates, the PCC sender learns how to adjust its rate to track the empirically optimal sending rate. To understand how this is accomplished, let’s revisit Figure 1.

- When packet loss is experienced because the connection is overshooting the bottleneck’s link capacity by a lot, then sending at a higher rate is expected to result in the same throughput but higher loss rate, thus producing lower overall utility.

- When packet loss is not induced by congestion (for example, is the result of PHY-layer corruption, handover in mobile networks, or routing changes), sending at a higher rate might even result in better goodput with the same or lower loss rate, thus producing higher overall utility.

Thus, a PCC sender will reach different decisions in these two scenarios (decreasing and increasing the rate, respectively), illustrating PCC’s ability to learn the ‘right’ control response in an online manner.

PCC’s learning control is selfish in nature, but, surprisingly, when using a widely applicable utility function, competing PCC senders provably converge to a fair and efficient equilibrium.

PCC in practice

In our research, we evaluated a PCC implementation in QUIC in large-scale real-world networks.

With no tweak of its control algorithm, PCC outperformed specially-engineered TCP variants by factors of 5 to 10x and beyond. We are currently developing a PCC Linux kernel module.

See our PCCproject GitHub space for an implementation of PCC in QUIC.

We also experimented with PCC and Google’s BBR in controlled environments, real residential Internet scenarios, and with video-streaming applications. We found that PCC shows improved performance in rapidly changing conditions, and faster and more stable convergence, which ultimately produces a lower video buffering time (watch a video stream comparison between TCP, UDP, and PCC below).

Beyond improved performance, PCC is able to achieve fairness, stability, and friendliness towards TCP.

Check out our papers, PCC: Re-architecting Congestion Control for Consistent High Performance [PDF 1.5 MB] and PCC Vivace: Online-Learning Congestion Control [PDF 800 KB], and watch our presentation at IETF 101 (below) to learn more about our research, or ask us questions below in the comments.

Also, stay tuned for our upcoming kernel module presentation at NetDev 0x12.

Contributor: Brighten Godfrey (University of Illinois at Urbana-Champaign)

Michael Schapira is an Associate Professor at the School of Computer Science and Engineering, Hebrew University of Jerusalem.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

I do keep hoping that we’ll see tcp researchers also applying aqm and fq approaches on the edge routers against their newfangled tcp variants.