On the night of 9 April 2018, DE-CIX Frankfurt experienced an outage. As this is one of the largest Internet Exchange Points (IXPs) in Europe, this is an interesting case to study in more depth to see what we can learn about the Internet’s robustness. We plan to update this article if new information/corrections flow in.

The information we received (via news and Twitter) about the DE-CIX Frankfurt outage indicates a component of this IXP lost power. We analyzed an outage at another very large IXP in 2015, and repeated this as another case study for “How the Internet routes around damage”.

Dear @decix customers, a massive power outage occurred at Interxion which also affects our operations (e.g. DE-CIX6/FRA5). Some DE-CIX systems for communication are currently also affected (e.g. mail). We are working hard to get them back to work. #decix #interxion #powerouttage

— DE-CIX (@DECIX) April 9, 2018

How we performed our analysis

In short, we repeated what we did for the 2015 AMS-IX outage.

First, we tried to find the traceroute paths in RIPE Atlas that indicate successful traversal of the DE-CIX Frankfurt peering LAN on the day before the outage (see breakout box for the technical details). In the data, we find a total of 43,143 source-destination pairs where the paths between them were stable and successfully traverse DE-CIX Frankfurt [1]).

For all of the RIPE Atlas traceroute data on 8 April 2018, we select the source-destination pairs that stably see the DE-CIX Frankfurt peering LANs (as derived from PeeringDB). We define this as the path between the source destination is at least measured every hour, the destination always sent packets back, and the peering LAN is always seen.

[1] This is to the extent that one can detect this in the traceroute data we collect.

To give some impression on the diversity of these pairs: there are 5,108 unique sources (RIPE Atlas probes), and 1,051 unique destinations in this data.

An important caveat at this point is, in this study, we can only report on what RIPE Atlas data shows. It is hard/impossible to extrapolate to the severity of this event for individual networks, which is likely different for different networks in different parts of the Internet topology.

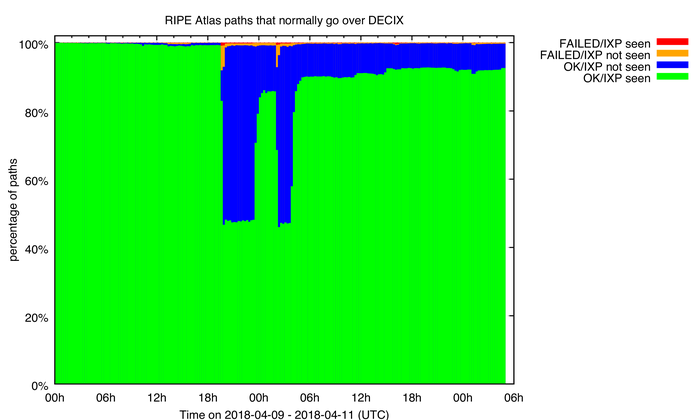

With this set of source-destination pairs, we looked at the period of the reported outage starting on 9 April 2018. The results are shown in Figure 1:

Figure 1: RIPE Atlas paths that normally reliably traverse the DE-CIX Frankfurt infrastructure.

What you see in Figure 1 is how the characteristics of paths that RIPE Atlas monitors during the DE-CIX Frankfurt outage change during and after the outage. Normally these paths will traverse DE-CIX Frankfurt and they ‘work’, that is, the destination is reliably reached, which is what we see at the beginning of 9 April as indicated in green.

Starting at around 19:30 UTC we see, in blue, that a large fraction of paths still ‘work’, but we don’t see DE-CIX Frankfurt in the path anymore. This indicates rerouting took place at that time. For a small fraction of paths (in orange and red) we see that the destination was not reached anymore, indicating a problem in Internet connectivity for these specific paths.

What our data indicates is that there were two separate outage events. One between 19:30 and 23:30 on 9 April, the other between 02:00 and 04:00 on 10 April (all times UTC). For these events, the paths mostly switched away from DE-CIX, but data also indicates the source-destination pairs still had working bi-directional connectivity, that is to say, that ‘the Internet routed around the damage’. It would be interesting to see if other data (maybe internal netflow data from individual networks?) reveals anomalies that correlate to the two outage intervals we detected.

From the information we gathered, we think these are the times the DE-CIX route-servers went offline. What’s interesting to note is that between these two outage events, many paths switched back to being routed over DE-CIX.

The other interesting thing about the graph is that more than a day after the events, 10% of paths hadn’t switched back to DE-CIX. This could be due to network operators keeping their peerings at DE-CIX Frankfurt down or, it could be the automatic fail-over in Internet routing protocols at work. For network techies, this is an example of the BGP path-selection process at work. If all of the other BGP metrics (local pref, AS path length, and so forth) are equal, the oldest path (or currently active path) is selected. So, if the DE-CIX peering session was down for a while, and another path with similar BGP metrics was available, then the BGP path selection process would select this other path.

Validation

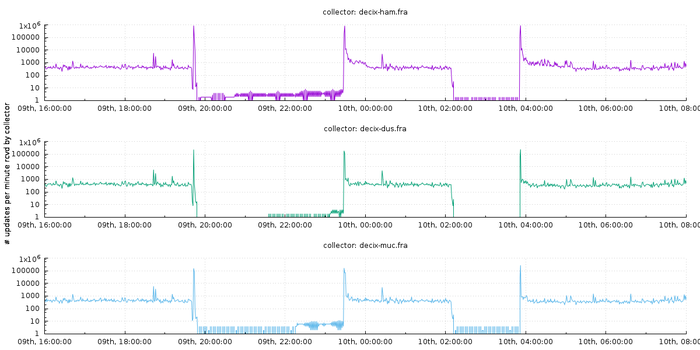

Packet Clearing House operates BGP route collectors at various IXPs. They release routing table updates from those collectors. The rate of advertisements and withdrawals around network reconfiguration is important — as routers drop connections, they will propagate updated states to their neighbours in an attempt to locate alternative paths, or cease propagating an advertisement they can no longer carry.

Without digging deep at all, the rate of updates helps us pin down exactly when the DE-CIX outage took place:

Figure 2: BGP update rates from PCH route collectors at DE-CIX infrastructure in Germany.

The signal is extremely clear: there are two periods where the collectors stop observing any updates at all: between 19:43 and 23:28 on 9 April, and between 02:08 and 03:51 on 10 April (all times UTC).

What we’d love to explore…

We intend to make data available from this analysis, so others can dig deeper into what happened here and what can be learned from this — please let us know by email if you are interested in this at labs@ripe.net.

Some specific questions of interest:

- How much of this is anycast networks being rerouted?

- Can this be correlated to other datasets?

So does it route around damage?

Back to the larger question: does the Internet route around damage? As with the 2015 event, our case study indicates it mostly does.

Feedback and comments are, as always, appreciated in the comments section — we’ll try to incorporate them into updates of this post.

Contributors: Stephen Strowes

Original post appeared on RIPE Labs.

Emile Aben a system architect/research coordinator at the RIPE NCC.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.