Low latency is coming to a network near you. In fact, it’s probably coming to your network, whether or not you realize it.

While bandwidth has always been the primary measure of a network, and cross-sectional or non-contending bandwidth for data centre fabrics, further research and reflection has taught large-scale network operators that latency is actually much more of a killer for application performance than lack of bandwidth—and not only latency but its close cousin, jitter. Why is this?

To understand, it is useful to return to an example given by Tanenbaum in his book Computer Networks. He includes a humorous example of calculating the bandwidth of a station wagon full of VHS tapes, with each tape containing the maximum amount of data possible.

For those young folks out there who didn’t understand a single word in that last sentence, think of an overnight delivery box from your favourite shipping service. Now stuff the box full of high-density solid-state storage of some kind, and ship it.

You can calculate the bandwidth of the box by multiplying the number of devices you can stuff in there by the capacity of each device, and then dividing by roughly 86,400 (the number of seconds in 24 hours).

What you will find, if you do this little exercise, is that the bandwidth of the box is greater than any link you can buy today. In fact, it’s probably greater than the bandwidth of every link available across your nation, region, or favourite ocean.

So why don’t we use boxes to ship data?

Because networks are, in the final analysis, a concession to human impatience. Until we reach the speed of human impatience, networks will always be able to improve in terms of delay.

To translate this into more practical terms, latency causes applications running on the network to run slow, and humans do not like slow applications.

Jitter, the close cousin of latency, can be even worse. Applications cannot run at the fastest speed available in the network; rather, they must run to the slowest speed available in the network. If an end-to-end path is very jittery, meaning it exhibits a wide range of delays, the application will be forced to adjust by running at the slowest round trip time. Or worse, the application will be constantly trying to guess what the next round trip time is going to be, and guessing wrong. This can cause dropped packets, out of order packet delivery, and a host of other problems.

You might think that the world of the Internet of Things (IoT) and mobile networks would be different than all of this; applications should know there is a delay, and probably jitter, so they should learn to work around it, right? If you think this, you are missing a fundamental point from above: networks are a concession to human impatience, and humans are infinitely impatient. Humans do not much care what is between them and their data; they just want their data. Now!

The problem, as noted in The Twelve Networking Truths, is:

“No matter how hard you push and no matter what the priority, you can’t increase the speed of light. No matter how hard you try, you can’t make a baby in much less than 9 months. Trying to speed this up *might* make it slower, but it won’t make it happen any quicker.”

There has been a good bit of work in reducing latency in the last several years, however. Once hyperscalers started digging into the latency problem, it quickly became obvious to other kinds of operators that they needed to take a much harder look at latency and jitter.

One result is a set of standards and ideas designed to help combat latency in mobile and fixed networks. Delivering Latency Critical Communication over the Internet catalogues and explains some of these efforts in a handy IETF draft form.

Section 3 considers the components of latency, specifically:

- processing delays

- buffer delays

- transmission delays

- packet loss

- propagation delays

The difference between propagation delays and transmission delays is this: one describes the speed of light through the cable or optical fibre, while the other describes the time required to clock a packet, which is held in a parallel buffer, onto a wire, which is (generally) a serial signalling channel.

Section 3 of this document also explains some of the reasons why low latency networking is needed; some of these might be quite surprising. For instance, massive data transfers from the IoT — essentially machine-to-machine data transfer on a large scale — seems to require low latency support. If IoT is being used for near real-time sensing, however, or unlocking the door to your house, this begins to make a little more sense. After all, when a bear is chasing you, there is some comfort in knowing there is a low latency path to the server that opens your house door.

Or… Perhaps this is another of those reasons just to stick with old-fashioned keys.

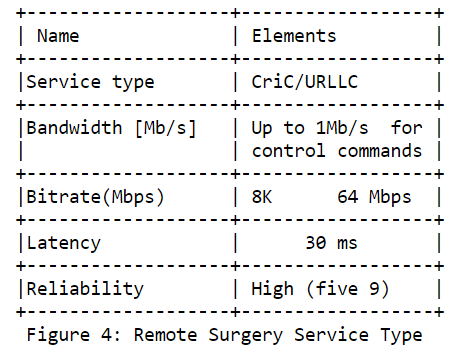

The document goes on to describe the Key Performance Indicators (KPIs) and Key Quality Indicators (KQIs) for a number of different kinds of applications that might be running over a network. For instance, figure 4 in the draft document describes the performance requirements for remote surgery:

Finally, in section 5, the document describes how Path Computation (PCE) might be used to resolve some of these problems. This is an interesting use of PCE, as it would require a complete rethinking of the way telemetry is gathered off the network and some modifications to the SPF calculation normally performed by PCE controllers. Since two metric calculations have been proven to be impossible to calculate (they are technically order-complete computations); careful design work needs to go into thinking through the problems of managing latency and bandwidth constraints at the same time.

This is a solid draft providing a good introduction into the problems and solutions around low latency networking.

Original post appeared on Rule 11.

Russ White is a Network Architect at LinkedIn.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

There are yet, a very large ultra low latency and cheap network in the world.

This network has only the electo-optical latency (200000km/s).

An know is very easy to run an IP/SIP solution over this network, this is a narrow band network,

but know is very useful becouse H.264 codec put until 4CIF30 in 128kbit/s (FULL DUPPLEX) this network

is the old TDM – ISDN

hi,

Thank you for sharing this information with us Because in your blog you have explained about low latency network very well, which is very useful for us.

yes, Latency is the amount of time it takes for a signal or packet to travel from one source to the destination and back again. Often referred to as latency, the amount of delay can be high, low, or somewhere in between. thanks for sharing the very informative information.