Distributed Denial of Service (DDoS) attacks remain an ongoing pain for the Internet community. Cisco projects a 14% compound annual growth in terms of attack numbers from an already high level in 2022. From all the DDoS traffic peaks making it to the media, the highest to date is a 3.5 Tbps attack on Microsoft Azure in 2022. While attacks of such spectacular size are rare, the average attack size of 1 Gbps is more worrisome, as this is enough to take most online services offline. The increasing adoption of concepts like the Internet of Things (IoT) continues to contribute to a worsening situation.

While DDoS mitigation solutions using scrubbing centres or mitigation appliances exist, there is no large Internet Exchange Point (IXP) providing analytics/mitigation to date. However, such a service is highly desirable. In a study from 2021, my colleagues and I from DE-CIX quantified the potential of such a solution and found 55% of the attack traffic could be dropped two or more Autonomous System (AS) hops earlier compared to filtering at the AS hosting the victim system; specifically, dropping at IXPs would reduce the stress of the edge infrastructure. Moreover, an IXP-based DDoS solution could enable DDoS analytics for many small/mid-sized Internet Service Providers (ISPs) that do not have the manpower or budget to implement it on their own.

In this research, we look at designing a DDoS mitigation solution for IXPs using a Machine Learning (ML) approach.

Main idea

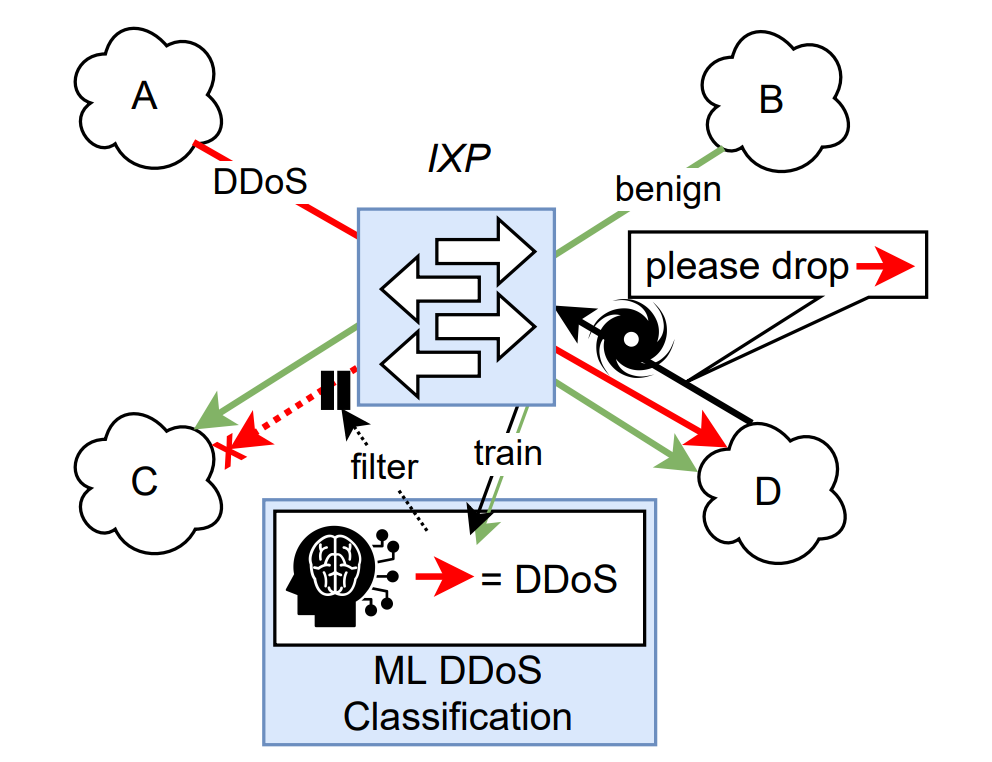

The main idea of our ML-method is depicted in Figure 1. Many IXPs implement an operational practice called Border Gateway Protocol (BGP) blackholing (RFC 7999), which allows ASes to mark traffic for a BGP route as unwanted (such as DDoS traffic). As IXPs can see both the unwanted traffic and the blackholing BGP announcements, they can correlate both to generate DDoS training sets. The training set size is only limited by the amount of available traffic data (usually as Netflow, IPFIX or similar) and BGP data (from IXP route servers). Using this method, we have generated between 3 – 24 months of DDoS training data from five IXPs in Europe and the US. We use this data to train and evaluate the IXP Scrubber ML-model, which can be applied to classify the traffic on an IXP’s platform as a service.

For IXP operators, this model has a number of advantages over existing solutions:

- Low cost: No need to buy appliances; works with given data exports and Access Control Lists (ACLs).

- Low maintenance: No manual definition of rules and triggers, self-learning.

- Member driven: No need for IXPs to provide a definition of DDoS; the IXP members express their wishes via BGP blackholing.

- Controllable: Through means of ML-design, we can limit the damage of false positives and understand the classification performance.

Data challenges

While the idea of sampling DDoS traffic from blackholed routes sounds simple, there are a number of challenges in the resulting data set(s). First, we found that the overall export of traffic data only contains a small fraction of blackholing traffic (<<1%). This is an issue because an ML-model learning a classification from the data will learn to favour the overrepresented class (non-blackhole, benign) over the underrepresented one (blackhole, DDoS), as predicting a non-blackhole label is correct with an overwhelming probability. We balance the data set by subsampling the non-blackholing part of the data until we reach a balanced share of both classes.

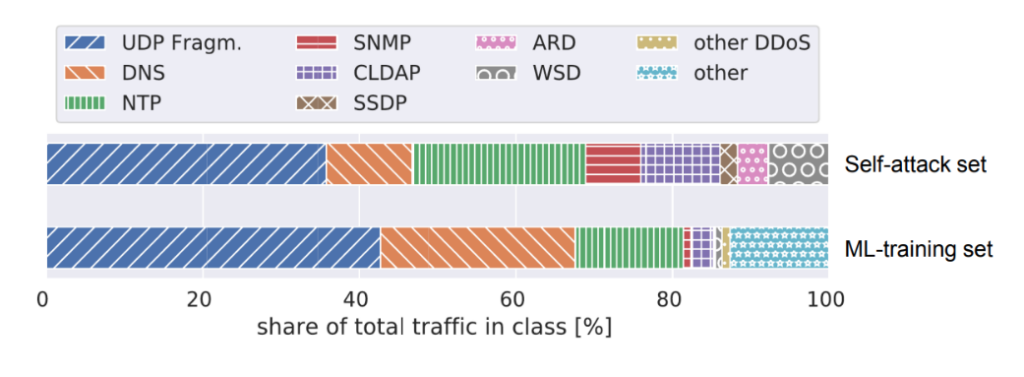

The second issue becomes evident when investigating the distribution of sending protocols as depicted in Figure 2.

Figure 2 compares two data sets, one from a previous work generated by attacking ourselves with DDoS for hire services (self-attack set) and the ML-training set generated from blackholing traffic. Both data sets contain a large amount of traffic from DDoS-susceptible protocols, such as DNS, NTP, SNMP, and so on; however, the ML-training set contains a portion of roughly 15% of traffic from other protocols (blue on the bottom right). This is because blackholing is a prefix-based mechanism. It removes all traffic to the IP(s) announced for the route, regardless of whether the victim host receives benign traffic in addition to DDoS. An ML pipeline design needs to be able to handle this type of impure training data.

ML-model design

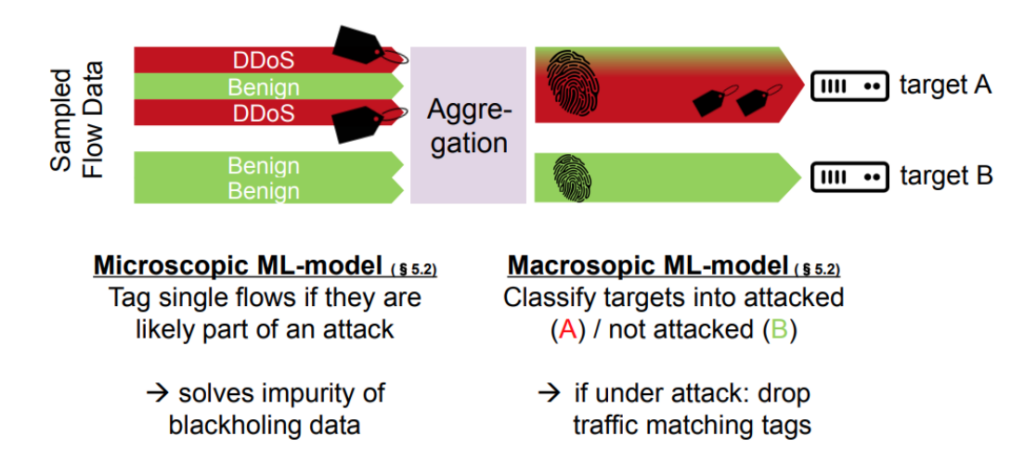

Figure 3 shows the classification process of the ML-model. The model consists of two parts, the microscopic ML-model and the macroscopic ML-model.

- The microscopic model works on the incoming traffic data. The goal of this model is to classify single flows according to their likelihood of being part of a DDoS attack. For doing so, we use algorithms for Association Rule Mining (ARM). ARM is frequently used to generate recommendations in online stores based on items that are already in the shopping cart (‘customers that bought milk also bought bread’). In our case, we only reverse the question — instead of asking for a recommendation for a certain combination of packet headers, we ask which packet headers lead to a recommendation of a blackhole. By doing so, we can come up with a set of association rules in the form of, for example, {src_port=123;packet_size=(400;500]} -> {blackhole}. The left part of this association rule (in this case NTP monlist packets) is matched against all incoming flows. If the rule matches, the flow is annotated with a tag. This way ARM helps us to solve the problem of impure blackholing data.

- In an intermediate step, we aggregate data on a per-target basis. That is, we aggregate all flows pointing to a certain target into a fingerprint representing all relevant flows to that target. On top of the fingerprint, we apply the macroscopic ML-model to classify targets into attacked (A in the figure above) or not attacked (B). If the macroscopic model classifies a target as under attack, we only drop the traffic that was tagged by the microscopic model.

Evaluation

We benchmarked five ML-classifiers for the macroscopic model, which showed a consistently very high performance (F1-scores of 0.950 to 0.988). The top-performing algorithm achieved a false negative and positive rate of both 1.2% only. Six of the seven top attack vectors are classified correctly with a 99% or higher probability.

To the best of our knowledge, this work is also the first to be able to quantify DDoS model drift over long time frames and across geographically diverse vantage points due to the large training data sets that can be generated from blackholing traffic at IXPs. Model drift refers to the phenomenon that a model may exhibit poor classification performance when the underlying data changes. For example, a model trained on day one may perform badly on day 60 and even worse later as the underlying attack patterns have changed over time. Model drift is a frequent and well-researched problem in the ML community.

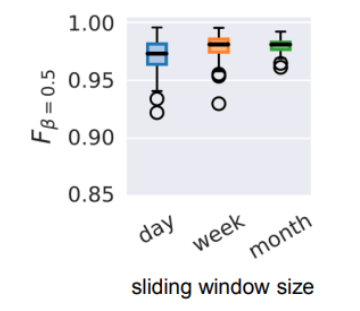

Figure 4 shows the performance of daily retraining with a sliding window size of a day, a week, and a month. One interesting finding here is that the median performance is remarkably stable, but a longer sliding window size is helpful to mitigate negative outliers. That is, days on which classification performance drops because the sliding window for training was lacking a similar attack pattern.

For the evaluation of model drift across geographies (for example, training in Europe and classifying in the US) and a lot more details on the method and results, learn more in our paper.

Watch Matthias’ colleague Christoph Dietzel discuss this topic at APNIC 54.

This blog article is a synopsis of a paper published at SIGCOMM’22 (Matthias Wichtlhuber, Eric Strehle, Daniel Kopp, Lars Prepens, Stefan Stegmueller, Alina Rubina, Christoph Dietzel, and Oliver Hohlfeld. IXP scrubber: learning from blackholing traffic for ML-driven DDoS detection at scale. ACM SIGCOMM 2022). You can download a free copy from DE-CIX.

Dr.-Ing. Matthias Wichtlhuber is a senior member of the DE-CIX research team and works on product development, system security, future network architectures for Internet exchange points, and large-scale network data analysis.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

Vpn problm