This is the final post in a four part series on LEO satellites by Ulrich Speidel.

Previous posts: Part one, part two, part three.

In this series, we have looked at what makes Low Earth Orbit (LEO) satellites interesting and what we can expect from the current systems based on constellations, gateway strategy, antennas, bandwidth, and inter-satellite routing. We’ve also posed questions we’d really like to be answered by the LEO providers. This instalment looks at the implications of the direct-to-site model used at least by two leading contenders, Starlink and OneWeb.

Why opt for a direct-to-site model, and will they support other models as well?

Starlink currently hawks its beta starter kits to individuals rather than organizations such as ISPs, building perhaps the sort of hipster cult that surrounds SpaceX’s equally famous sister company, Tesla. OneWeb’s publicity similarly addresses end users directly. While it’s possible (and in fact, likely) that some work with ISPs and large user organizations is indeed going on, there is very little information in the public domain about it. Commercial sensitivities, perhaps? But there are reasons for asking whether the direct-to-site model makes sense.

One of the reasons why the Internet hasn’t completely buckled under YouTube, Netflix, Facebook, and friends, so far is that practically all of their content now gets delivered via cloud-based Content Delivery Networks (CDNs) such as Cloudflare, Akamai, Amazon CloudFront, MS Azure CDN, to name a few. To put it simply: If your device requests content, it generally gets served from a server located close to you. This accelerates the TCP transfers involved and keeps them mostly to the bandwidth-rich links within your own metropolitan area or economy, including the more-or-less dedicated fibre path to your home, if you’re lucky enough to have one.

Such CDNs keep repeat content off the international links: If half a million New Zealanders watch the latest Netflix show, their video feed comes from a server in New Zealand (NZ) and, for almost all of the users here, travels via terrestrial networks to their device. Imagine the additional load on NZ’s international links if the feed had to come from, say, the United States.

Quite how much the Internet’s big sites have come to depend on CDNs became apparent a few days ago, of course, when CDN provider Fastly suffered a major outage. Users were unable to reach the likes of CNN, the White House, or (closer to my home) Radio New Zealand, among many others.

Read: No, the Internet was not ‘down’

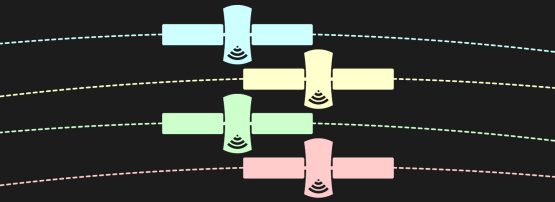

Now, put a user on a direct-to-site satellite link and it’s plainly obvious that the world’s CDN servers are on the other side of the link. So, if 1,000 satellite users want to watch the same show, it has to traverse the satellite 1,000 times.

As we saw in part three of our series, the satellites remain likely bottlenecks. For comparison: The current lit capacity of the Southern Cross Cable network is upwards of 5.4 Tb/s, while the Hawaiki cable between NZ and Hawaii is 10 Tb/s. Together with upgrades planned soon, this comes awfully close to Starlink’s worldwide system capacity, quoted to be 23.7 Tb/s worldwide or somewhere between 15 and 20 Gb/s per satellite. Except that Australia and New Zealand between them have a population of just over 30 million — and a lot of CDNs with a local presence. But there are billions of unconnected and under-connected users out there waiting for service.

Unfortunately, CDNs don’t normally establish a local point of presence unless the number of local users makes it worthwhile for them — something that is already keenly felt in many smaller Pacific Island economies. If CDNs can’t offer a server for an island with thousands of users, they certainly won’t put in a personal CDN server just for you!

Many economies in the region, from very small to giant, either operate monopoly ISPs or keep a tight rein on the Internet for reasons best discussed elsewhere. It seems likely that most of these economies will want to prohibit direct-to-site satellite solutions, even if this thwarts tangible connectivity relief to smaller and remote users. Will higher bandwidth services be offered to local ISPs where direct-to-site solutions are illegal?

Some might insist on local users being supplied via local and filtered gateways. So there’s a question to ask of LEO providers here as to what compromises they have been willing to enter in order to be able to sell into certain markets or in order to operate gateways there: Will I be able to do a Google search in the South China Sea if my cruise ship connects to your system from there, or post Facebook updates from my yacht in Papua New Guinean waters?

If a visiting Pacific Islander in New Zealand or Australia buys a kit online at the end of 2021 (travel notwithstanding), will it work in the Pacific Islands upon return? Will local customs even allow hardware to cross the border?

Past, present, and future

Satellite Internet in general has a history of unhappy end users and local ISPs who think that they pay too much for a service that doesn’t entirely meet their needs. There has been some progress over the years, from the introduction of Medium Earth Orbit systems to the recent generation of high-throughput Geosynchronous Equatorial Orbit satellites that offer more bandwidth at the same latency as before, but progress has not kept pace with that seen on fibre connections.

LEO systems are another big step forward. As of 2021, LEO Internet has clearly arrived and we will hear more about it as time progresses, technology matures, and new technology makes better use of the existing resource.

Currently, we have only one LEO provider that isn’t offering its product universally yet, and who rightfully warns its beta users that there will be gaps in the service and that achievable data rates will vary. As Starlink’s constellation grows, the gaps should all but disappear across the service, but as their user base grows, what we know so far suggests that realistically achievable data rates may stagnate or even decline. It remains to be seen at which user density level there will be a meeting of price, user tolerance, and technical achievability. Similar questions and challenges await OneWeb and any competitors that may follow in Starlink’s footsteps.

The known parameters of the current and proposed systems indicate that there are limits to what LEO systems can deliver. On the one hand, they will no doubt be able to get service to some places that are currently hard to reach (hello Scott Base!). On the other hand, it’ll still take another quantum leap in LEO technology to get affordable Internet access to a significant portion of the two billion people in the Asia Pacific region that remain offline.

No doubt, the technology will continue to evolve, but will it be able to keep pace with the growth in data usage?

Dr Ulrich Speidel is a senior lecturer in Computer Science at the University of Auckland with research interests in fundamental and applied problems in data communications, information theory, signal processing and information measurement. The APNIC Foundation has supported his research through its ISIF Asia grants.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.