A group of us within the Internet Engineering Task Force (IETF) think the time has come to give inter-domain multicast another try.

To that end, we’re proposing and prototyping an open standards-based architecture for how to make multicast traffic manageable and widely available, from any provider willing to stand up some content and some standardized services (like my employer, Akamai), delivered through any network willing to forward the traffic (running their own controlled ingest system), to any end-user viewing a web page or running an application that can make use of the traffic.

As such, we’re reaching out to the operator community to make sure we get it right, and that we build such open standards and their implementations into something that can actually be deployed to our common advantage. That’s what this post is about.

Why multicast?

When you do the maths, even at just a rough order-of-magnitude level, it gets pretty clear that today’s content delivery approach has a scaling problem, and that it’s getting worse.

Caching is great. It solves most of the problem, and it has made the bandwidth pinch into something we can live with for the last few decades. But physics is catching up with us. At some point, adding another 20% every year to the server footprint just isn’t the right answer anymore.

At Akamai, as of mid-2020, our latest publicly stated record for the peak traffic delivered is 167Tbps. (That number generally goes up every year, but that’s where we are today.) Maybe that sounds like a lot to some folk, but when you put that next to the total demand, you can see the mismatch. When we’re on the hook for delivering our share of 150GB to 35M people, it turns out to take a grand total of almost three days if you can do it at 167Tbps.

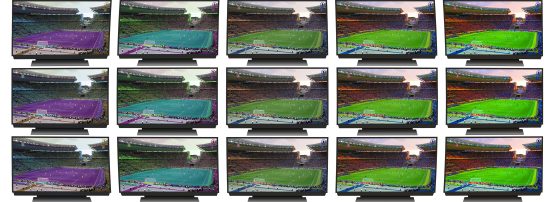

I’ll leave it as an exercise for the reader to calculate how the big broadcast video events will fare on 4K TVs as cord-cutting continues to get more popular. Scaling for these kinds of events is not just an Akamai problem, it’s an Internet delivery problem.

And these large peak events are generally solvable if you could just get those bits replicated in the network. Or in many cases, like with Gigabit Passive Optical Networks (GPON) and cable, it’ll be fewer bits on the wire, because a single packet sent in the right way can reach a bunch of users at once.

Didn’t we try this before?

We think it’s possible to do it better this time around.

In the architecture we’re proposing, network operators set up limits that make sense for their networks, and then automated network processes keep their subscribers inside those limits.

There’s no special per-provider peering arrangements needed. Discovery for tunnels and metadata provided by the sender is anchored on the DNS, so there’s no dependency on any new shared public infrastructure.

Providers can stand up content and have their consumers try to subscribe to it, and networks that want to take advantage of the shareable traffic footprint can enable transport for that traffic in the places where it makes sense for those networks.

Multicast delivery, in our view, is a supplemental add-on to unicast delivery that can make things more efficient where it’s available. Our plan at Akamai, and the one we recommend for other content providers, is to use unicast until you can see that some content is popular enough, and then have the receivers start trying to get that content with multicast. If the multicast gets through, great, use that instead while it lasts. If not, keep on using unicast.

The point is that the network can make the choice on how much multicast traffic to allow through, and expect receivers to do something reasonable whether or not they can get that traffic. Multicast is a nice-to-have, but it can save you a lot of bandwidth in the places where you’re pinched for bandwidth, making the end user’s quality of experience better both for that content and for the competing content that’s maybe less shareable.

How does it work?

Of course, there’s success already with running multicast when you control all the pieces, especially for TV services.

But our approach is also trying to solve things like software and video game delivery and to avoid the need for any new kinds of service from the network in order to support any number of various vendors’ proprietary protocols for multicast adaptive bitrate (ABR) video delivered over the top. Or even to extend into domains that we think might be on the horizon, such as point-cloud delivery for VR, where the transport protocols aren’t really nailed down yet. For this reason, the network would treat inter-domain multicast streams as generic multicast User Datagram Protocol (UDP) traffic.

But just because it’s generic UDP traffic doesn’t mean the networks won’t know anything about it. By standardizing the metadata, we hope to avoid the need for the encoding to happen within the network, so the network can focus on transparently (but safely and predictably) getting the packets delivered, without having to run software that encodes and decodes the application layer content.

It’s early days for some of these proposed standards, but we’ve got a work-in-progress Internet draft defining an extensible specification for publishing metadata, specifically including the maximum bitrate and the MSS as part of Circuit-Breaker Assisted Congestion Control (CBACC), as well as per-packet content authentication and loss detection via Asymmetric Manifest-Based Integrity (AMBI).

This approach also offloads content discovery to the endpoints. That happens at the application layer, and the app comes up with an answer in the form of a (Source IP, Group IP) pair, also called an (S,G), in the global address space, which the app then communicates to the network by issuing a standard Internet Group Management Protocol (IGMP) or Multicast Listener Discovery (MLD) membership report. (PS: We’re also working on getting this capability into browsers for use by web pages.)

To support inter-domain multicast, this architecture relies on source-specific multicast, so that no coordination between senders is needed in order to have unique stream assignment that’s a purely sender-owned decision — all the groups in 232.0.1.0 through to 232.255.255.255 and FF3E::8000:0 through to FF3E::FFFF:FFFF from different sources don’t collide with each other at the routing level. (Any-source multicast was one of the big reasons that multicast wound up with a false start last time around — it doesn’t scale well for inter-domain operation.)

Based on the source IP in the (S,G), the network can, using DNS Reverse IP AMT Discovery (DRIAD), look up where to connect an Automatic Multicast Tunneling (AMT) tunnel for ingesting the traffic, so that it comes into the network at that point as normal unicast traffic, and then gets forwarded from there as multicast. (The source IP is also used by the receiver and optionally the network to get per-packet authentication of the content.)

Get involved

We’ve reached the stage where we’re seeking involvement from network operators, to make sure we end up with something that will work well for everybody. There’s a couple of main things:

- We’re soliciting feedback on our specs. Mostly we’d love to get comments sent to the mboned mailing list at the IETF for our three active drafts in that working group (DORMS, AMBI, and CBACC), or in the issues list of our proposed w3c multicast receiver API.

- We’ll be doing some trials this year to try some of this work out and we’d love to get more partners involved. We have a prototype for the basic DRIAD ingest path, but we’d very much like to give it a spin with some operators who might want to get this stuff working, and make sure it’ll meet their needs.

So if this sounds interesting and you’re operating a network, please reach out to my colleague, James Taylor, or myself, as we’d love to answer any questions you have, and join forces to make this vision a reality.

Jake Holland is a Principal Architect at Akamai.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.