Latency is a critical determinant of the quality of experience for many Internet applications. Google and Bing report that a few hundred milliseconds of additional latency in delivering search results causes significant reduction in search volume, and hence, revenue. In online gaming, tens of milliseconds make a huge difference, thus driving gaming companies to build specialized networks targeted at reducing latency.

Present efforts at reducing latency, nevertheless, fall far short of the lower bound dictated by the speed of light in vacuum[1]. What if the Internet worked at the speed of light? Ignoring the technical challenges and cost of designing for that goal for the moment, let us briefly think about its implications.

A speed-of-light Internet would not only dramatically enhance Web browsing and gaming as well as various forms of “tele-immersion”, but it could also potentially open the door for new, creative applications to emerge. Thus, we set out to understand and quantify the gap between the typical latencies we observe today and what is theoretically achievable.

Our largest set of measurements was performed between popular Web servers and PlanetLab nodes, a set of generally well-connected machines in academic and research institutions across the World[2]. We evaluated our measured latencies against the lower bound of c-latency; that is, the time needed to traverse the geodesic distance between the two endpoints at the speed of light in vacuum.

Our measurements reveal that the Internet is much, much slower than it could be: fetching just the HTML of the landing pages of popular websites is (in the median) ~37 times worse than c-latency. Note that this is typically tens of kilobytes of data, thus making bandwidth constraints largely irrelevant in this context.

Where does this huge slowdown come from?

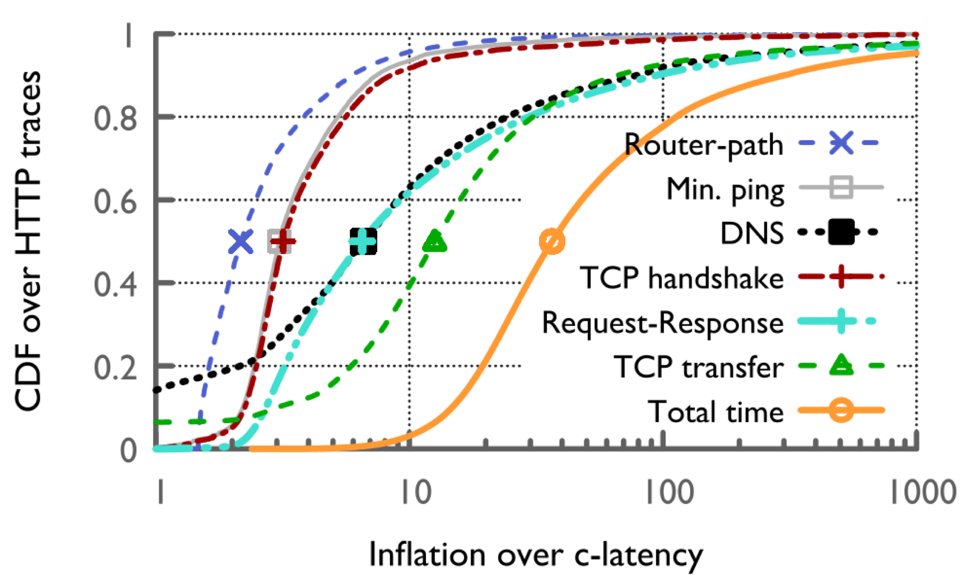

The figure below shows a breakdown of the inflation of HTTP connections. As expected, the network protocol stack — DNS, TCP handshake, and TCP slow-start — contributes to the Internet’s latency inflation. Note also, however, that the infrastructure itself is much slower than it could be: the ping time is more than 3x inflated[3].

In light of these measurements, how should the networking research community reduce the Internet’s large latency inflation? Improvements to the protocol stack are certainly necessary, and are addressed by many efforts across industry and academia. What is often ignored, however, is the infrastructural factor.

If the 3x slowdown from the infrastructure were eliminated, each round-trip-time being 3x faster would affect all the protocols above, and we could immediately cut the latency inflation from ~37x to around 10x, without any protocol modifications.

Further, for applications such as gaming, infrastructural improvements are the only way to reduce the network’s contribution to large latencies. Hence, we believe reducing latency at the lowest layer is of utmost importance towards the goal of a speed-of-light Internet.

We encourage interested readers to read our paper to understand the details of our measurement work and results, and visit our website to learn more about our ongoing work towards building a speed-of-light Internet.

1. The same is true even when we use the speed of light in fibre as the baseline.↩

2. For measurements involving actual end-users, please refer to our paper. In general, results from those data sets showed even greater latency inflation.↩

3. These results are robust against various factors including geolocation errors, transfer sizes, client and server distances, and congestion. Please refer to the paper for more details.↩

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

The concern highlighted herein has a major impact on the trust on Internet. As life clings more to Internet any slowness will start impacting the quality of life. Thanks Research team for this paper. Herein, I am little interested to understand that from your data sources collected, is it possible to look at the country for which the website was primarily targetted and analyze how slow it is from a country perspective.

We have performed experiments from over 103 PlanetLab nodes located in different countries. We also identified where each Web server is located. All websites in our URL list is tested from all the vantage points we have. Section 3.5 examines how our results differ in different geographies, i.e. countries. We also have data from real users, distributed across the globe; but this data is smaller in size and some countries have a very small number of users in it, so we haven’t used it to reach any conclusions about latencies in different countries yet. However, we will try to enlarge this real user dataset.

Even if we had a c speed internet, browsing would still suck. There is so much other cruft going on with most public webpages. Ads that need to be downloaded. Beacons that fire for analytics and silly things like the mediation services where different companies bid for your ad slot. The faster we make it the more they will clog it. I think the only solution is to have the browser abort any page that is not rendered in 1.5 seconds. Android does something like this for slow apps

Your own site takes over 6 seconds to load…

105 requests just to load this article? I think we know why it is slow.

Note that in our measurements we just fetched the HTML of the landing pages of the Web Sites. So, our analysis shows Internet is slow even when you’re downloading a single object! On top of that Web sites loads many more objects with complex dependencies as you pointed out.

Most webpages problem is all the tracking and ads, which causes lots of different requests from different servers, accumulating all the latencies, and being bottlenecked by the slower one.

Is it performed in IPv4 environment? I would like to know about how those latency generators like DNS, TCP handshake, Router-paths are responding in the IPv6 enabled network? Shows the same result or not?

Yes, these results are obtained in an IPv4 environment.

We have an analysis of inflation in minimum ping times using both IPv4 and IPv6 with RIPE Atlas probes in section 5 of the paper. You can also see my colleague Bala’s answer to a similar question here: https://labs.ripe.net/Members/mirjam/why-is-the-internet-so-slow/