Modern data centres increasingly rely on the extended Berkeley Packet Filter (eBPF) for fine-grained control over networking, security, and diagnostics. However, deploying and managing eBPF programs at scale comes with challenges, from lifecycle coupling with the kernel to inconsistent interfaces across versions.

A recent SIGCOMM paper from Meta, in collaboration with Carnegie Mellon University, chronicles how Meta addresses this challenge and tackles the question of ‘how to easily and quickly develop eBPF programs at scale?’

In this post, I’ll discuss NetEdit. This framework can orchestrate eBPF programs at the scale of millions of servers without compromising performance or availability, and the mechanisms we proposed as part of the study, along with their performance in testing.

Why eBPF is valuable

This shift towards using eBPF is spurred by the potential benefits that eBPF’s specialization enables. Table 1 highlights several of the benefits we have experienced from our five-year deployment:

| Tuning feature | Infra win | Service win |

| Initial CWND per connection | 30% faster image generation 30% drop in x-region P99 latency for storage app | |

| TCP receiver window per packet | 90% drop in rxmits for storage app | 100% faster storage reads |

| Jumbo MSS tuning | 6% lower network utilization for ads | 6% CPU saving for ads |

| Use BPF-based DCTCP for Intra-region connections | 75% drop in network retransmits | 40% improvement in P99 latency for commerce and search apps |

Although such benefits are impressive, there are many significant challenges in developing these programs, such as poor documentation, inconsistent eBPF behaviour, and constantly changing APIs.

Challenges in scaling eBPF

Ideally, the development of eBPF programs would reuse the same highly vetted software engineering principles currently used in these data centres and clouds. eBPF programs should be highly configurable to adapt to the large set of services and should be continuously integrated and continuously deployed into their infrastructure.

Unfortunately, the lifecycle of eBPF programs is configured through kernel-exposed interfaces and managed using kernel heuristics — this fate-sharing relationship restricts developer flexibility and agility.

NetEdit design goals

For any large-scale online service, development is agile, flexible, and rapidly evolving. Consequently, infrastructure optimizations that support these services must also evolve rapidly to meet changing demands.

The current eBPF ecosystem, while enabling the design of infrastructure optimizations, does not easily lend itself to agility, flexibility, and rapid evolution. In particular, the very property that makes eBPF attractive — direct access to the kernel — also makes it challenging to use at scale, for these reasons:

- Kernel-Heuristics: Since eBPF programs are directly embedded within the kernel, they are subject to the kernel’s lifecycle management heuristics, such as deleting programs that appear unused. However, some eBPF programs may appear unused from the kernel’s perspective, even though they are still needed by services.

- Kernel-BPF Interface: As the kernel evolves rapidly, the interfaces change frequently and differ across kernel versions. At Meta, we run over 10 kernel versions, which makes eBPF management especially challenging at scale.

- BPF-BPF Interface: Support for combining eBPF programs inherits the kernel’s inconsistency and frequent changes.

Decoupling eBPF from the Kernel with BPFAdapter

To address the pain point of understanding the nuances of the BPF-kernel interface and differences across attach points and kernel versions, we devised BPFAdapter.

Fundamentally, BPFAdapter is an abstraction that standardizes the management of eBPF program operations, such as attaching or configuring across different hookpoints, while hiding the complexity of each attach type. It provides a uniform interface through which the control plane can manage the lifecycle of arbitrary eBPF programs without needing to understand hookpoint-specific details.

BPFAdapter provides:

- Explicit garbage collection — freeing programs from kernel object heuristics

- Retroactive policy enforcement (for example, bpf-iter) — triggering programs during restart

- Key primitives for efficiency (lazy loading) and storage (shared maps)

Dynamic policy control with PolicyEngine

NetEdit’s PolicyEngine provides a general and flexible approach to specifying configuration and policy that dictate when and how to tune the network for different services. The PolicyEngine operates at the granularity of tuningFeature, which is an abstraction that encapsulates the set of eBPF programs that collectively achieve a specific high-level network function.

The PolicyEngine dynamically evaluates each configuration policy to determine whether to load the eBPF programs associated with a ‘tuningFeature’ and populates a BPF map with the appropriate configuration. In particular, the PolicyEngine reacts to changes in both the set of deployed services and the set of active connections. In our design, we prioritize stability and debuggability over optimality.

This dynamic capability sets NetEdit apart from other eBPF managers like Cilium by enabling dynamic loading of programs based on active services.

Rapid eBPF development with NetEdit’s capability store

To simplify and expedite the development, testing, and deployment of BPF programs, NetEdit provides a library of modular and reusable capabilities.

Over five years, we developed more than eight capabilities that simplify debugging, policy consumption, and resource sharing.

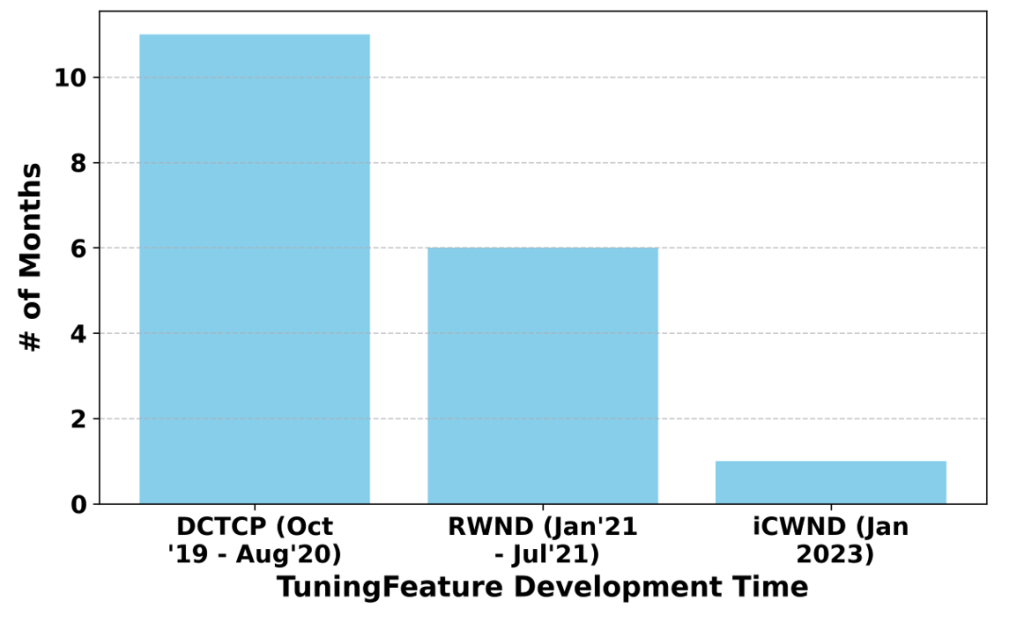

We performed a comparison of development and deployment times for three tuningFeatures (Figure 1). We observed a drop from approximately six months to just a few weeks.

This improvement comes from:

- Rich yet simple abstractions and runtime frameworks enabling faster development of the core tuningFeature

- Reuse of utilities like connection scope resolvers further reduces development time and allows developers to focus on the core business logic

- Automated testing and rollout mechanisms ensure higher confidence and faster deployment

Lessons learned and open research challenges

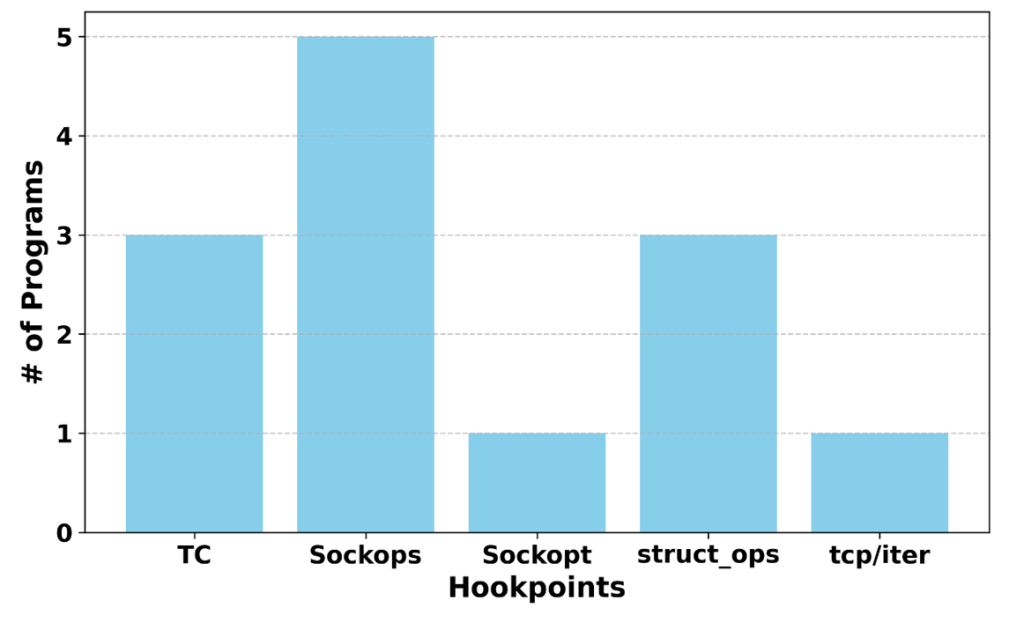

Over five years of developing and deploying more than 12 tuningFeatures, we faced several challenges and identified promising research opportunities. Figure 2 shows the number of programs running at each hookpoint (see the corresponding paper for details).

Hookpoint selection

As Figure 2 shows, tuningFeatures run at various hookpoints. Concerns for hookpoint selection include:

- Meeting correctness and functionality requirements

- Characterizing performance overhead via stress tests

- Understanding kernel functionality dependencies

At Meta, we’ve had to revisit and migrate programs across hookpoints due to CPU overhead or richer tuning needs.

Program composition

Multiple tuningFeatures at each hookpoint can lead to bugs due to program interaction — we call this program composition. We have observed several classes of bugs due to interactions between different eBPF programs, which exhibit inconsistent behaviour, programs overriding each other’s actions (which creates further inconsistencies), or failure to run required eBPF programs. This highlights the need for composition-aware frameworks and operators to effectively compose large features consisting of multiple eBPF programs.

eBPF testing frameworks

There is a need for rigorous testing frameworks, such as fuzzing-based, to expose hidden bugs in the eBPF-kernel interfaces. Unlike prior work on kernel testing, we observed that due to eBPF’s coupling, our eBPF programs either explored uncommon kernel paths or introduced new interactions that did not exist before. This highlights the need for more frameworks that analyse and test the eBPF interfaces.

Debug auto-instrumentation

Testing frameworks need dynamic instrumentation tools for automatic logging to capture sufficient information for eBPF program validation.

Conclusion and broader applicability

Finding the right balance in network tuning is particularly difficult at data centre scale, given the wide range of services and devices. By separating policy configuration from enforcement, NetEdit enables flexible tuning without being tightly coupled to services or assuming specific physical network setups.

While its main focus is on network tuning, design and interfaces, these principles may also benefit other domains using eBPF, such as storage and scheduling.

Theophilus A. Benson is a professor in the Electrical and Computer Engineering Department at Carnegie Mellon University and Carnegie Mellon University-Africa, specializing in models, algorithms, and frameworks to improve computer network performance and availability.

Prashanth Kannan, Prankur Gupta, Srikanth Sundaresan, Neil Spring, and Ying Zhang co-authored this work.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

thanks for sharing this! can you please provide a pointer to the NetEdit source code?

Great write-up! The recent SIGCOMM paper does expand on many aspects of NetEdit that were missing in the blog—like performance metrics, dynamic policy handling, and system testing. However, a few important gaps still remain:

1. No public source code or docs – NetEdit is still not open source, and there’s no SDK, GitHub repo, or usage guide available for the community.

2. Kernel compatibility is vague – While the paper mentions kernel-version-related bugs, it doesn’t include a clear matrix of supported versions or fallback strategies.

3. Integration is Meta-specific – Key components (e.g. Twine, FBFlow, infra maps) make it hard to imagine using NetEdit outside Meta without major rewrites.

4. No standardized external interface – There’s no CLI, API, or config system for external users to adopt the platform or plug into their own systems.

Appreciate the insights shared, but broader adoption will likely depend on openness and portability beyond Meta’s internal ecosystem.