Computing and communications have made astounding improvements in capability, cost, and efficiency over the past eighty years. If the same efficiency improvements had been made in the automobile industry, cars would cost a couple of dollars, would cost fractions of a cent to use for trips, and would be capable of travelling at speeds probably approaching the speed of light!

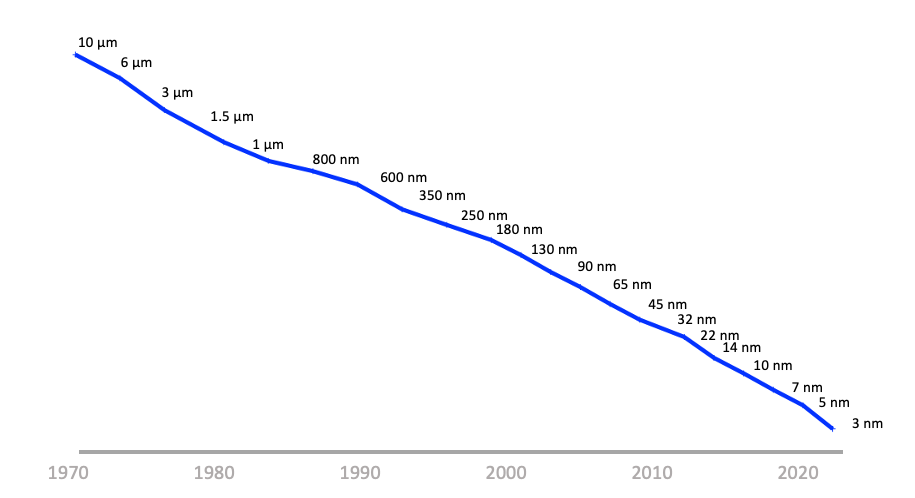

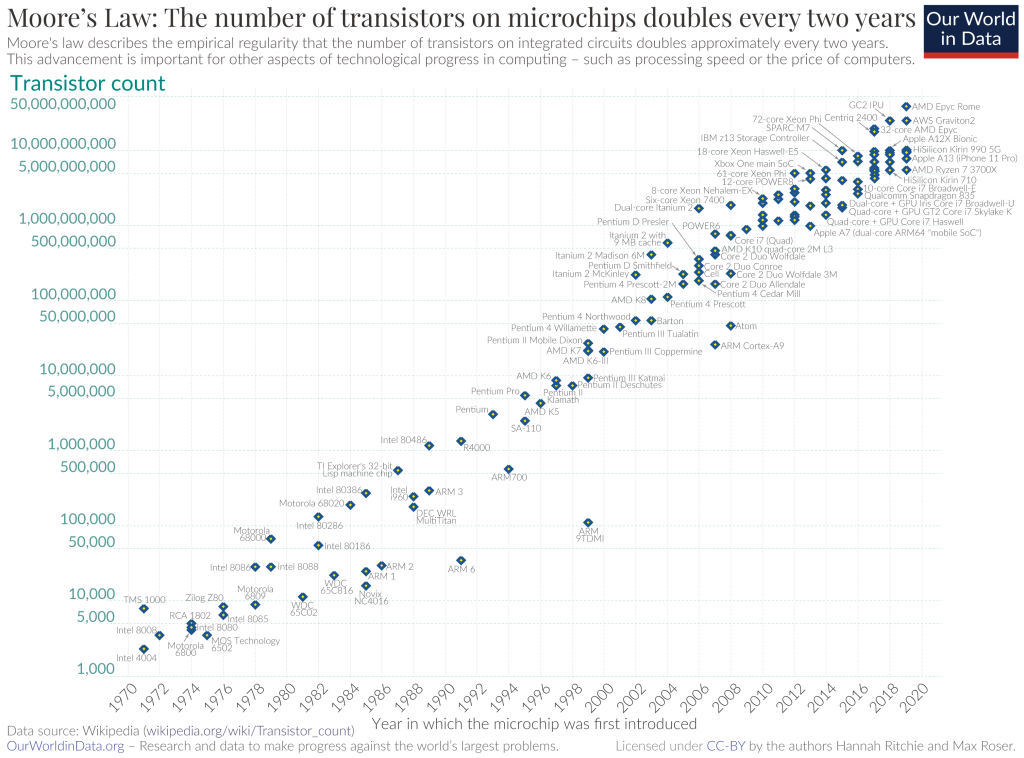

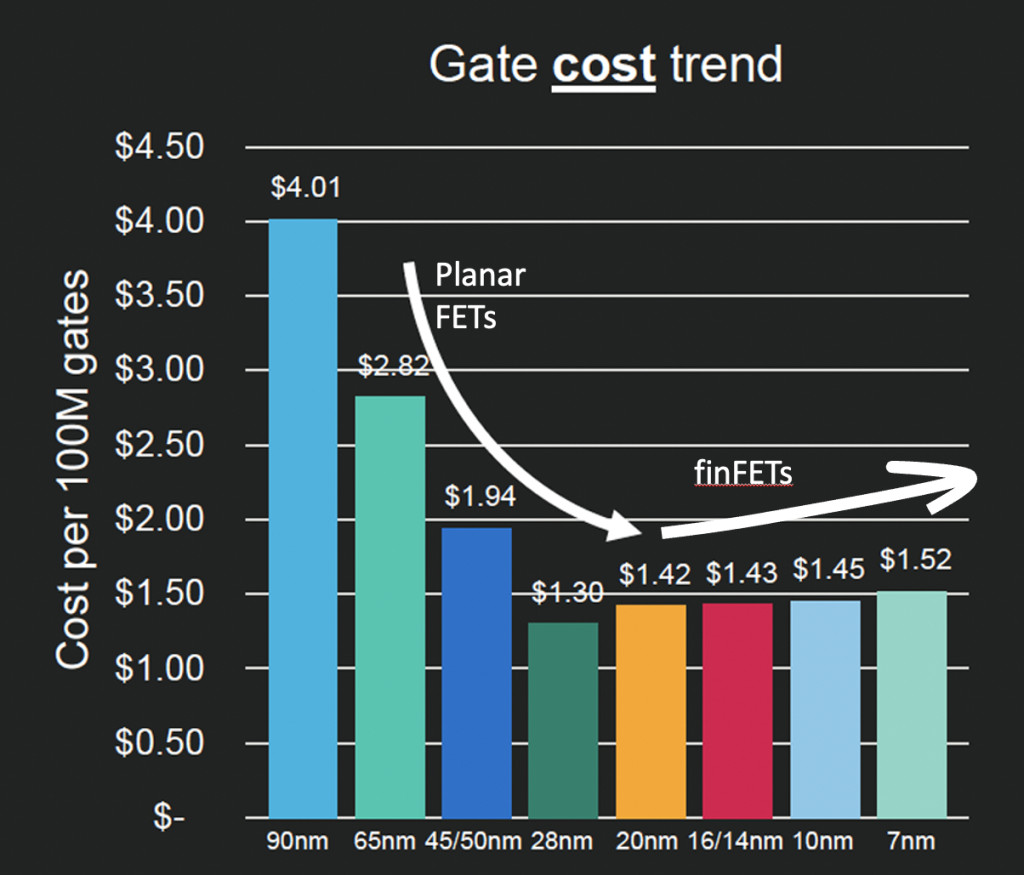

We’ve been the beneficiaries of the bounty generated by Moore’s Law for most of this period in the evolution of chip fabrication techniques, where, since the late 1950s, the transistor density of integrated circuits has been doubling every 18 months or so (Figures 1, 2). At the same time, the fabrication cost per transistor has fallen over much of this period (Figure 3). It’s only in recent years that the chip industry has moved to smaller than 7nm feature technology, and has stabilized the per-gate cost. However, during this same period, the number of transistors per chip is rapidly approaching 1 trillion transistors.

It has been more than gate density. Over the past two decades, the mobile device market has forced chip designers to create processor chips that enable the device to run all day on a single battery charge. At the same time, they’ve had to ensure the chips are energy-efficient enough to prevent them from overheating. The aim was to increase the processing capability of the chip, while at the same time keeping the total energy consumption of the chip roughly constant!

How does all this relate to the Jevons Paradox?

William Stanley Jevons

William Stanley Jevons was one of the founders of neoclassical economics in the mid-nineteenth century. He witnessed the period of railway mania — the 1840s — which saw a huge speculative bubble fund the construction of track, engines, carriages, and the expansion of coal mining.

Steam locomotion made the transportation of goods and people cheaper and faster. One of the impacts of this was the rapid expansion of coal production in Britain, where pre-railway production volumes of 20M tonnes of coal per year rose to 120M tonnes per year by 1860 and were on a trajectory to further increase in the coming years.

In 1865, Jevons published The Coal Question, which speculated on the likelihood of exhaustion of British coal deposits as a result of the rise of coal-fired steam engines. One of the key observations in his book was that, paradoxically, the consumption of coal actually increased when technological progress improved the efficiency of steam engines.

We tend to believe in the concept that improvements in efficiency in the use of a resource would naturally lead to a drop in the level of resource consumption, as the same level of activity would consume less of the resource. When a resource has a production limit, efficiency improvements should reduce total consumption enough to keep usage within sustainable production levels.

The Jevons Paradox offers a diametrically opposite view, where improvements in efficiency of resource use act as a positive incentive to increased resource consumption, and the net result is an increase in resource consumption. Efficiency lowers costs, which lowers prices, which increases demand. Sometimes, this increase in demand is disproportionately large, with the result that overall consumption actually grows. This outcome came to be known as the Jevons Paradox. The pursuit of increased efficiency does not inevitably lead to sustainability.

He observed that ways to increase the efficiency of coal-fired steam engines would not necessarily suppress the demand for coal, but, paradoxically, may well stimulate even greater levels of demand for coal.

As it turned out, Britain did not in fact run out of coal reserves. Coal production peaked in 1913 at some 280M tonnes per year, and then declined over the ensuing decades to total production levels not seen since the 1700s.

The answer to this seeming paradox lies in a concept termed the price elasticity of demand. When more efficient coal engines lowered the cost of coal-powered transportation of people and goods, the total demand for transportation increased by far more than the gains to be made from increased efficiency. In more general terms, in a price-elastic scenario, greater efficiency lowers the unit cost of a good or service, which can in turn increase demand.

Computers and communications

There is a similar story in the evolutionary path of computers and communications systems.

One of the earliest electronic computers, ENIAC, was constructed in 1945. The 30 tonnes of equipment housed 18,000 vacuum tubes and consumed 150kw of power. The inventions of the transistor in 1947, and the integrated circuit in 1959, did not result in a smaller more efficient version of ENIAC, but instead these advances facilitated the development of vastly more capable processing systems that operated at significantly greater level of efficiency, yet in absolute terms consumed far more energy than the 150kw of ENIAC.

For comparison, Nvidia’s A100 chip has a constant power consumption of 400W, and the latest H100 consumes 700W. A large AI-capable data centre with one million of these GPUs would be looking at a power consumption of around 1.5GW. Yes, they are far more energy efficient per single operation than ENIAC, but this increased efficiency has led to a vastly increased number of operations per second and a far greater level of energy consumption.

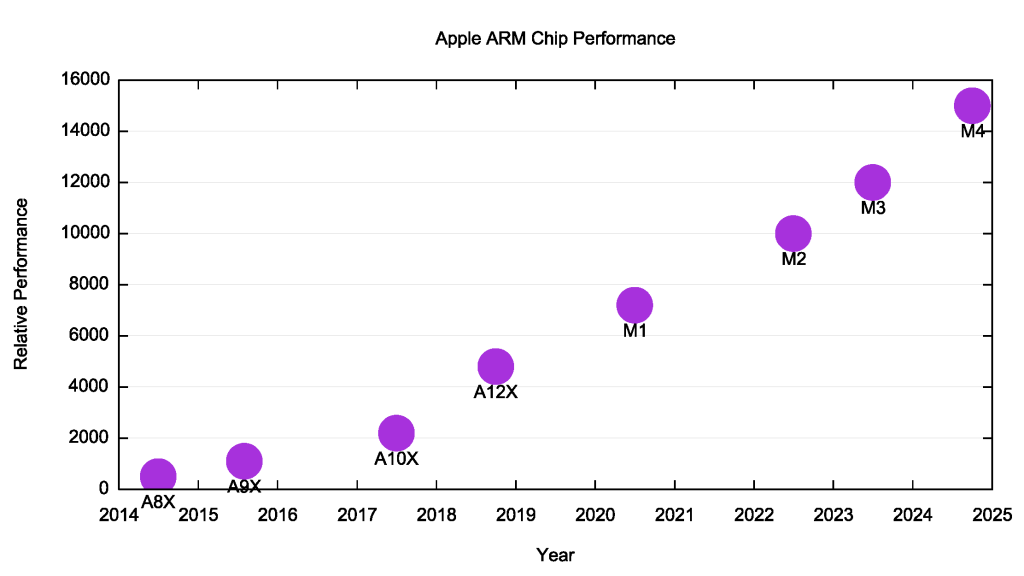

A similar picture can be seen in the evolution of Apple’s iPhone. The first iPhone, released in 2007, featured a Samsung 32-bit ARM microprocessor with a 412MHz clock, 128MB memory and a 3.7V 1,400mAh lithium-ion battery. Twenty years later, the iPhone 16 uses a pair of 64-bit ARM processors (using 3nm fabrication processes) with a 4GHz clock, 8GB of memory and a 3.89V 4,685mAh lithium-ion battery. That’s approximately three times the battery capacity for well over 100 times the performance.

These ARM processors have been used across a range of Apple devices, and the relative performance of these processors is shown in Figure 4. All these improvements in the hardware of the device, including processing power, battery technology and energy efficiency, have not resulted in a cheaper device with roughly constant performance parameters, but in significantly greater levels of capability that are intended to render superseded models obsolete and stimulate demand for the current product.

On a more general level, the efforts of the silicon chip designers and foundries to produce better chips, with progressively increased performance and greater energy efficiencies, have not reduced the requirements of devices in terms of their size and energy requirements, but conversely, has increased device capabilities and stimulated higher levels of overall demand. The same appears to be the case at the macro level when we look at the requirements of data centres, in terms of their processing capability, energy requirements, cooling capacity and footprint.

This is also evident in the application space. An example lies in the adoption of blockchain-based digital artifacts, such as Bitcoin and its derivatives, Non-Fungible Tokens (NFTs) and blockchain-based name systems. The increase in power and efficiency of computing platforms enables the viability of these services, but the ensuing service uptake may well increase total levels of resource consumption well beyond any potential gains that could be made through increased efficiency.

It has been estimated that Bitcoin mining in Iceland uses around 120MW of power, or around 85% of the 140MW of power used by Icelandic data centres in 2022. The abundance of renewable energy and cheap power has had both data centres and Bitcoin mining operations flocking to Iceland in recent years to set up shop.

Iceland’s cool climate is another benefit, as data centres produce a lot of heat that would require additional energy to cool if located in a warmer climate. Both politicians and environmental activists have questioned the benefit of Bitcoin and digital currency mining operations for the Icelandic economy as well as their impact on the environment. The article that reported this estimate also carried the comment that: “This is a waste of energy that should not be happening in a society like the one we live in today.”

The Jevons Paradox and centrality

The Jevons Paradox is counterintuitive because, other things being equal, we would normally expect higher efficiency to cause lower resource use. The Jevons Paradox occurs, however, precisely because other things are not equal. What is different is the demand. Although Jevons himself could not have known about the concept of price elasticity of demand, an economic concept whose study still lay decades in the future at the time he raised the issue, he did anticipate the essence of that idea. He also recognized that as energy became cheaper, the total rate of economic growth across society would increase as well, because energy is an input to virtually all other goods and services.

In a narrow technical sense, the Jevons Paradox only happens if a technology makes an existing process more efficient, and only if demand is highly price-sensitive. But new technology often obviates the old way of doing things entirely. The Jevons Paradox might be taken to mean that new technologies always create new problems of their own. For example, combustion engine vehicles might have solved the environmental problem of copious quantities of horse manure in metropolitan environments, but they created their own problems of air pollution and greenhouse gas emissions. (Never mind the fact that we would need more than 10 trillion horses to move freight and passengers as many miles as we now do with vehicles!).

But can we explain today’s headlong rush into AI using the Jevons Paradox alone? Is it the outcome of technological evolution crossing a threshold of price and capability that has unleashed formerly pent-up user demand? Did we always want AI-based tools in our IT environment, but could not afford to access such tools until now? Or are other pressures at play?

The disturbing characteristics of today’s digital environment are the dominant position of a small clique of digital behemoths whose collective agenda appears to be based more on the task of ruthless exploitation of everyone else in the singular pursuit of the accumulation of unprecedented quantities of capital and social power.

The continual pressures of technical evolution through the application of Moore’s Law to chip fabrication have a lot to do with this. The underlying platform of every digital service will likely be twice as powerful, and, paradoxically, half the price of the current platform in as little as two years. This would conventionally force providers to constantly upgrade their platforms to stay ahead of their potential competition.

There is another path, however, that is based on complete market domination. Such a position allows the dominant incumbent to shape the market according to their own desires, and push products and services to consumers at price points that are inaccessible to any competitors. This ‘winner take all’ form of supply-side push in markets is one that reinforces the position of dominant incumbents.

Are the large digital service entities engaged in a huge investment program to build AI data centres simply responding to an unprecedented demand due to huge volumes of demand for AI-generated content and services? Or are they motivated by a desire to occupy this space before any potential competitor can amass sufficient volumes of capital and momentum to effectively challenge the position of the incumbents?

I strongly suspect we are in the latter situation. The Jevons Paradox appears to apply in demand-pull markets, which are price elastic. Marginal efficiencies gained in the production of the good or service are reflected in unit price drops, which unleashes a disproportionate level of further demand. What we appear to have in today’s highly centralized digital space is supply-push dynamics, where the current incumbents push novel goods and services onto the market at a price point that bars any new market entrant from competing.

These supply-side push markets work to not only pre-empt future demand by anticipating it far in advance over any potential competitor, but to influence the nature of demand that consumers are inured to desire the service being offered. This circularity works to further reinforce the position of the incumbent providers.

It’s challenging to see a way through the current situation. Regulatory efforts to disrupt the operation of these large providers are often either ineffectual or risk voter displeasure, while a lenient stance on the part of public regulatory agencies achieves little other than ensuring that the current incumbents maintain their entrenched position for many decades to come.

But this is not a stable situation. Physics has a lot to say when the feature size of elements in a silicon wafer gets down to around 1nm in size. Further reductions in the size of what is essentially a planar process can’t be achieved due to physical behaviours such as electron tunnelling. Our current efforts to address this have been to introduce three-dimensional structures onto the chip, such as FinFETS, and to explore other materials instead of silicon as the chip substrate, including Gallium Nitride, and the somewhat hazy prospect of quantum computing. However, further radical improvements in the size, complexity and energy efficiency of computer chips are by no means assured. If we cannot keep on doubling down every two years or so in the production of processing chips, then the technological innovation pressures on the industry will inevitably weaken.

It’s a bit like the childhood game of musical chairs, where the aim is to claim your seat when the music stops. The music of technical innovation in chip fabrication is still playing, but it’s clear that its tempo is slowing down. What happens after the music stops remains anyone’s guess, but the conventional thinking is that at such a time, you really should’ve claimed your seat!

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.