I wrote an article in May 2022, asking ‘Are we there yet?’ about the transition to IPv6. At the time, I concluded the article on an optimistic note, observing that we may not be ending the transition just yet, but we are closing in. I thought at the time that we wouldn’t reach the end of this transition to IPv6 with a bang but with a whimper. A couple of years later, I’d like to revise these conclusions with some different thoughts about where we are heading and why.

The state of the transition to IPv6 within the public Internet continues to confound us. RFC 2460, the first complete specification of the IPv6 protocol, was published in December 1998, over 25 years ago. The entire point of IPv6 was to specify a successor protocol to IPv4 due to the prospect of depleting the IPv4 address pool. We depleted the pool of available IPv4 addresses more than a decade ago, yet the Internet is largely sustained through the use of IPv4. The transition to IPv6 has been underway for 25 years, and while the exhaustion of IPv4 addresses should have created a sense of urgency, we’ve been living with it for so long that we’ve become desensitized to the issue. It’s probably time to ask the question again: How much longer is this transition to IPv6 going to take?

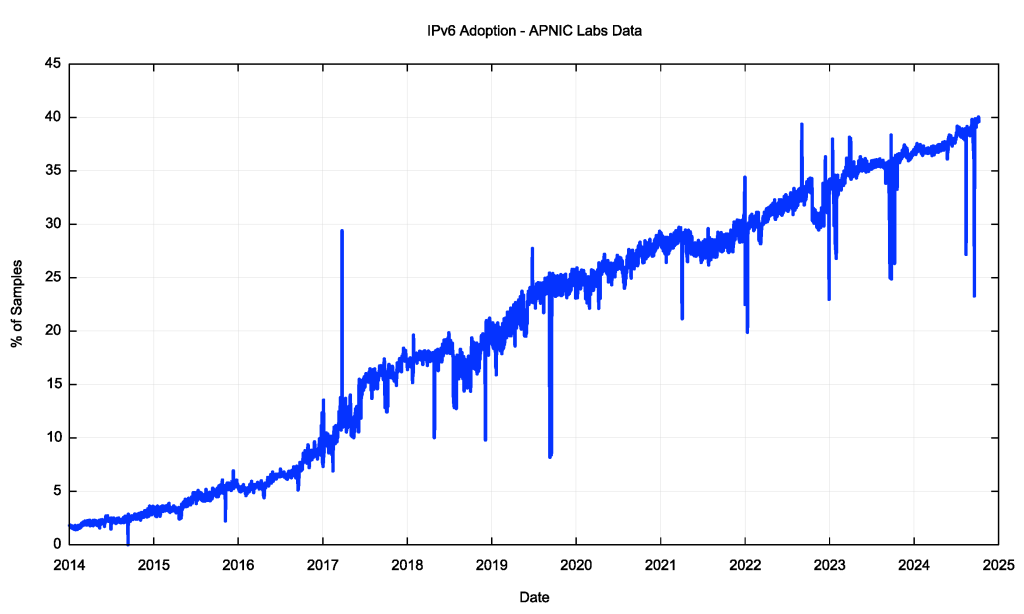

At APNIC Labs, we’ve been measuring the uptake of IPv6 for more than a decade now. We use a measurement approach that looks at the network from the perspective of the Internet’s user base. What we measure is the proportion of users who can reach a published service when the only means to do so is by using IPv6. The data is gathered using a measurement script embedded in an online ad, and the ad placements are configured to sample a diverse collection of end users on an ongoing basis. The IPv6 adoption report, showing our measurements of IPv6 adoption across the Internet’s user base from 2014 to the present, is shown in Figure 1.

On the one hand, Figure 1 is one of those classic ‘up and to the right’ Internet curves that show continual growth in the adoption of IPv6. The problem is in the values in the Y-axis scale. The issue here is that in 2024 we are only at a level where slightly more than one-third of the Internet’s user base can access an IPv6-only service. Everyone else is still on an IPv4-only Internet.

This seems to be a completely anomalous situation. It’s been over a decade since the supply of ‘new’ IPv4 addresses has been exhausted, and the Internet has not only been running on empty but also being tasked to span an ever-increasing collection of connected devices without collapsing. In late 2024 it’s variously estimated that some 20B devices use the Internet, yet the Internet’s IPv4 routing table only encompasses some 3.03B unique IPv4 addresses.

The original ‘end-to-end’ architecture of the Internet assumed that every device was uniquely addressed with its own IP address, yet the Internet is now sharing each individual IPv4 address across an average of seven devices, and apparently, it all seems to be working! If end-to-end was the sustaining principle of the Internet architecture then as far as the users of IPv4-based access and services are concerned, then it’s all over!

IPv6 was meant to address these issues, and the 128-bit wide address fields in the protocol have sufficient address space to allow every connected device to use its own unique address. The design of IPv6 was intentionally very conservative. At a basic level, IPv6 is simply ‘IPv4 with bigger addresses’. There are also some changes to fragmentation controls, changes to the Address Acquisition Protocols (ARP vs Neighbor Discovery), and changes to the IP Options fields, but the upper-level transport protocols are unchanged. IPv6 was intended to be a largely invisible change to a single level in the protocol stack, and definitely not intended to be a massive shift to an entirely novel networking paradigm.

In the sense of representing a very modest incremental change to IPv4, the IPv6 design achieved its objective, but in so doing it necessarily provided little in the way of any marginal improvement in protocol use and performance. IPv6 was no faster, no more versatile, no more secure than IPv4. The major benefit of IPv6 was to mitigate the future risk of IPv4 pool depletion.

In most markets, including the Internet, future risks are often heavily discounted. The result is that the level of motivation to undertake this transition is highly variable given that the expenditure to deploy this second protocol does not realize tangible benefits in terms of lower cost, greater revenue or greater market share. In a networking context, where market-based coordination of individual actions is essential, this level of diversity of views on the value of running a dual-stack network leads to reluctance on the part of individual actors and sluggish progress of the common outcome of the transition. As a result, there is no common sense of urgency.

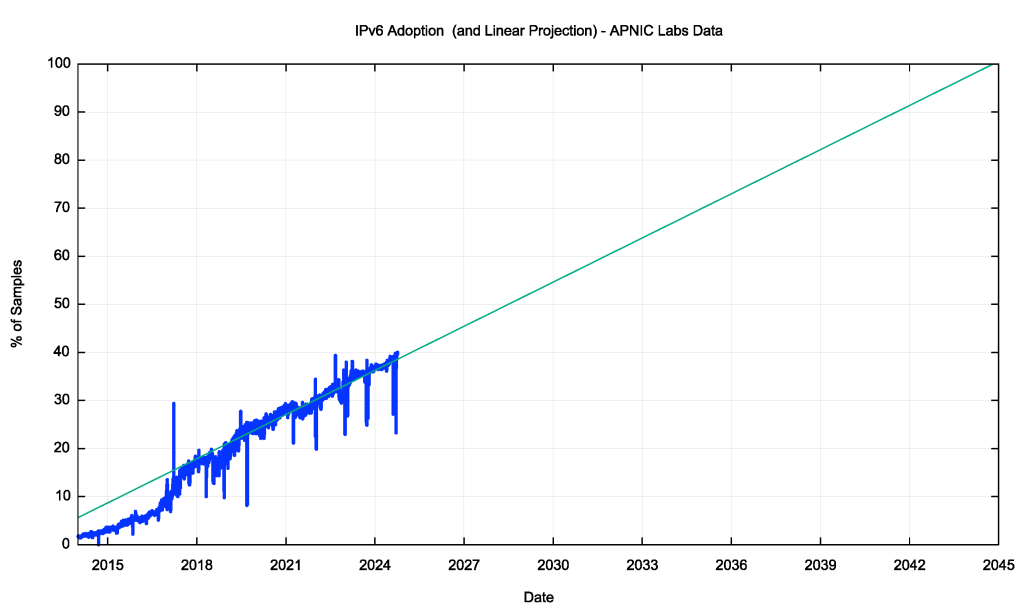

To illustrate this, we can look at the time series shown in Figure 1 and ask the question: ‘If the growth trend of IPv6 adoption continues at its current rate, how long will it take for every device to be IPv6 capable?’ This is the same as looking at a linear trend line placed over the data series used in Figure 1, looking for the date when this trend line reaches 100%. Using a least-squares best fit for this data set from January 2020 to the present day, and using a linear trend line, we can come up with Figure 2.

This exercise predicts that we’ll see completion of this transition in late 2045, or some 20 years into the future. It must be noted that there is no deep modelling of the actions of various service providers, consumers, and network entities behind this prediction. The only assumption that drives this prediction is that the forces that shaped the immediate recent past are unaltered when looking into the future. In other words, this exercise simply assumes that ‘tomorrow is going to be a lot like today.’

The projected date in Figure 2 is less of a concern than the observation that this model predicts a continuation of this transition for a further two decades. If the goal of IPv6 was to restore a unified address system for all Internet-connected devices, but this model of unique addressing is delayed for 30 years (from around 2015 to 2045), it raises questions about the relevance and value of such a framework in the first place! If we can operate a fully functional Internet without such a coherent end-device address architecture for three decades, then why would we feel the need to restore address coherence at some point in the future? What’s the point of IPv6 if it’s not address coherence?

Something has gone very wrong with this IPv6 transition, and that’s what I’d like to examine in this article.

A little bit of history

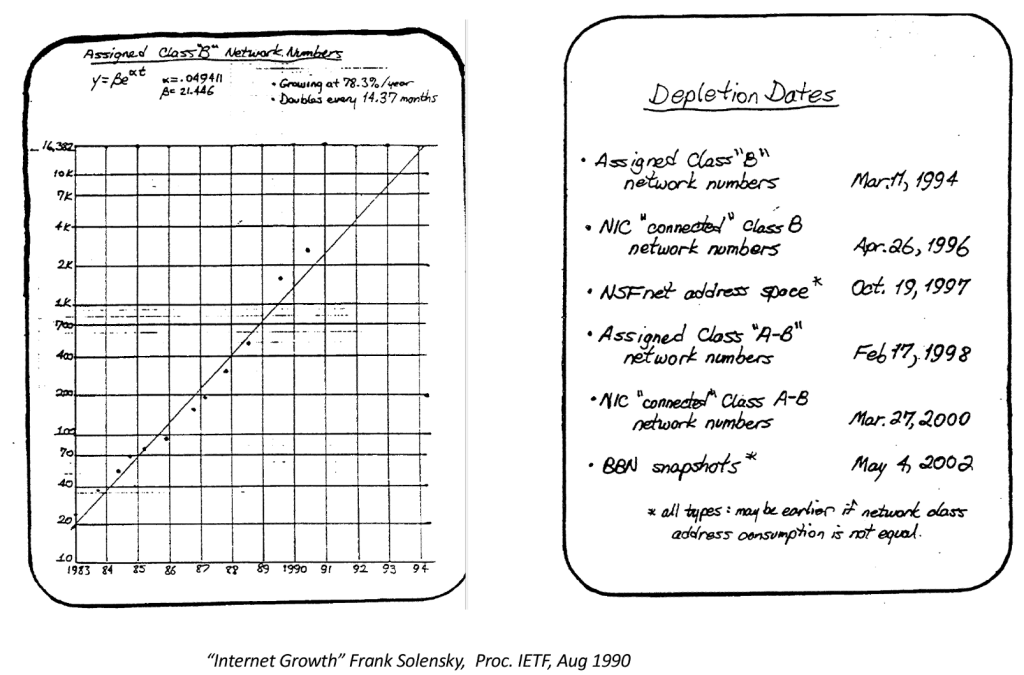

By 1990 it was clear that IP had a problem. It was still a tiny Internet at the time, but the growth patterns were exponential, doubling in size every 12 months. We were stressing out the pool of Class B IPv4 addresses and in the absence of any corrective measures this address pool would be fully depleted in 1994 (Figure 3).

We were also placing pressure on the routing system at the time. The deployed routers in 1992 only had enough memory to support a further 12 to 18 months of routing growth. The combination of these routing and addressing pressures was collectively addressed in the IETF at the time under the umbrella of the ROAD effort (RFC 1380).

There was a collection of short- medium- and longer-term responses that were adopted in the IETF to address the problem. In the short term, the IETF dispensed with the class-based IPv4 address plan and instead adopted a variably sized address prefix model. Routing protocols, including BGP, were quickly modified to support these classless address prefixes. Variably sized address prefixes added additional burdens to the address allocation process, and in the medium term, the Internet community adopted the organizational measure of the Regional Internet Registry (RIR) structure to allow each region to resource the increasingly detailed operation of address allocation and registry functions for their region.

These measures increased the specificity of address allocations and provided the allocation process with a more exact alignment to determine adequate resource allocations that permitted a more diligent application of relatively conservative address allocation practices. These measures realized a significant increase in address utilization efficiency. The concept of ‘address sharing’ using Network Address Translation (NATs) also gained some traction in the ISP world. Not only did this dramatically simplify the address administration processes in ISPs, but NATs also played a major role in reducing the pressures on overall address consumption.

The adoption of these measures across the early 1990s pushed a two-year imminent crisis into a more manageable decade-long scenario of depletion. However, they were not considered to be a stable long-term response. It was thought at the time that an effective long-term response really needed to extend the 32-bit address field used in IPv4. At the time the transition from mainframe to laptop was well underway in the computing work and the prospect of further reductions in size and expansion of deployment in smaller embedded devices was clear at the time. An address space of 4B was just not large enough for what was likely to occur in the coming years in the computing world.

But in looking at a new network protocol with a vastly increased address space, there was no way that any such change would be backward compatible with the installed base of IPv4 systems. As a result, there were a few divergent schools of thought as to what to do. One approach was to jump streams and switch over to use the Connectionless Transport profile of the Open Systems Interconnection (OSI) protocol suite and adopt OSI Network Service Access Point (NSAP) addresses along the way. Another was to change as little as possible in IP except the size of the address fields. And several ideas were being thrown about in proposing significant changes to the IP model.

By 1994 the IETF had managed to settle on the minimal change approach, which was IPv6. The address field was expanded to 128 bits, a Flow ID field was introduced, fragmentation behaviour was altered and pushed into an optional header and ARP was replaced with multicast.

The bottom line was that IPv6 did not offer any new functionality that was not already present in IPv4. It did not introduce any significant changes to the operation of IP. It was just IP, with larger addresses.

Transition

While the design of IPv6 consumed a lot of attention at the time, the concept of transition of the network from IPv4 to IPv6 did not.

Given the runaway adoption of IPv4, there was a naive expectation that IPv6 would similarly just take off, and there was no need to give the transition much thought. In the first phase, we would expect to see applications, hosts and networks adding support for IPv6 in addition to IPv4, transforming the Internet into a dual-stack environment. In the second phase, we could then phase out support for IPv4.

There were several problems with this plan. Perhaps the most serious of these was a resource allocation problem.

The Internet was growing extremely quickly, and most of our effort was devoted to keeping pace with demand. More users, more capacity, larger servers, more content and services, more responsive services, more security, better defence. All of these shared a common theme — scale. We could either concentrate our resources on meeting the incessant demands of scaling, or we could work on IPv6 deployment. The short and medium-term measures that we had already taken had addressed the immediacy of the problems of address pool depletion, so in terms of priority, scaling was a more important priority for the industry than the IPv6 transition. Through the decade from 1995 to 2005, the case for IPv6 quietly slumbered in terms of mainstream industry attention. IPv4 addresses were still available, and the use of classless addressing (CIDR) and far more conservative address allocation practices had pushed the prospect of IPv4 depletion out by more than a couple of decades. Many more pressing operational and policy issues for the Internet absorbed the industry’s collection attention on the day.

However, this was merely a brief period of respite. The scaling problem accelerated by a whole new order of magnitude in the mid-2000s with the introduction of the iPhone and its brethren. Suddenly, this was not just a scale problem of the order of tens or even hundreds of millions of households and enterprises, but it transformed into a scale problem of billions of individuals and their personal devices and added mobility into the mix. As a taste of a near-term future, the production scale of these ‘smart’ devices quickly ramped up into annual volumes of hundreds of millions of units.

The entire reason why IPv6 was a necessity was coming to fruition. But at this stage, we were just not ready to deploy IPv6 in response. Instead, we rapidly increased our consumption of the remaining pools of IPv4 addresses and we supported the first wave of large-scale mobile services with IPv4. Dual-stack was not even an option in the mobile world at the time. The rather bizarre economics of financing 3G infrastructure meant that dual-stack infrastructure in a 3G platform was impractical, so IPv4 was used to support the first wave of mobile services. This quickly turned to IPv4 and NATs as the uptake of mobile services gathered momentum.

At the same time, the decentralized nature of the Internet was hampering IPv6 transition efforts. What point was there in developing application support for IPv6 services if no host had integrated IPv6 into its network stack? What point was there in adding IPv6 to a host networking stack if no ISP was providing IPv6 support? And what point was there in an ISP deploying IPv6 if no hosts and no applications would make use of it? In terms of IPv6 at this time, nothing happened.

The first effort to try and break this impasse of mutual dependence was the operating system folk, and fully functional IPv6 stacks were added to the various flavours of Linux, Windows, and MAC OS, as well as in the mobile host stacks of iOS and Android.

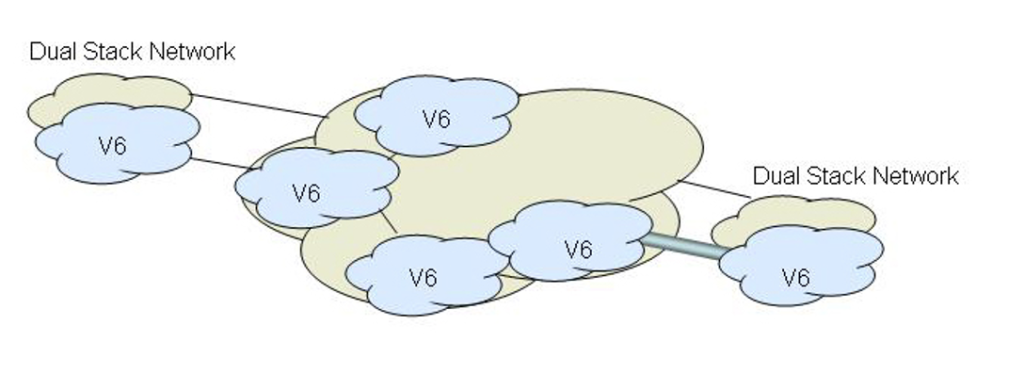

But even this was not enough to allow a transition to achieve critical momentum. It could be argued that this made the IPv6 situation worse and set back the transition by some years. The problem was that with IPv6-enabled hosts there was some desire to use IPv6. However, these hosts were isolated ‘islands’ of IPv6 sitting in an ocean of IPv4. The concentration of the transition effort then fixated on various tunnelling methods to tunnel IPv6 packets through the IPv4 networks (Figure 4). While this can be performed manually when you have control over both tunnel endpoints, this approach was not that useful. What we wanted was an automated tunnelling mechanism that took care of all these details.

The first such approach that gathered some momentum was 6to4. The initial problem with 6to4 was that it required public IPv4 addresses, so it could not provide services to IPv6 hosts that were behind a NAT. The more critical problem was that firewalls had no idea how to handle these 6to4 packets, and the default action when in doubt was to deny access. So 6to4 connections encountered an average of a 20 to 30% failure rate on the public Internet, which made it all but unusable as a mainstream service. NAT traversal was also a problem, so a second auto-tunnel mechanism was devised that performed NAT sensing and traversal. This mechanism, Teredo, was even worse in terms of failure rates, and some 40% of Teredo connection attempts were observed to fail.

Not only were these Phase 1 IPv6 transition tools extremely poor performers, as they were so unreliable, but even when they worked the connection was both fragile and slower than IPv4. The result was perhaps predictable, even if unfair. It was not just the transition mechanisms that were viewed with disfavour, but IPv6 itself also attracted some criticism.

Up until around 2011 IPv6 was largely ignored as a result in the mainstream of the public Internet. A small number of service providers tried to deploy IPv6, but in each case, they found themselves with a unique set of challenges that they and their vendors had to solve. Without a rich set of content and services on IPv6, then the value of the entire exercise was highly dubious! So, nothing much happened.

Movement at last!

It wasn’t until the central IPv4 address pool managed by IANA was depleted at the start of 2011, and the first RIR, APNIC, ran down its general allocation pool in April of that year, that the ISP industry started to pay some more focused attention to this IPv6 transition.

At around the same time the mobile industry commenced its transition into 4G services. The essential difference between 3G and 4G was the removal of the Point-to-Point Protocol (PPP) tunnel through the radio access network from the gateway to the device and its replacement by an IP environment. This allowed a 4G mobile operator to support a dual-stack environment without an additional cost component, and this was a major enabler for IPv6. Mapping IPv4 into IPv6 (or the reverse) is fragile and inefficient for service providers compared to native dual-stack. In the six years, from 2012 to the start of 2018, the level of IPv6 deployment rose from 0.5% to 17.4%. At this stage, IPv6 was no longer predominately tunnelled, as many networks supported IPv6 in a native node (Figure 5).

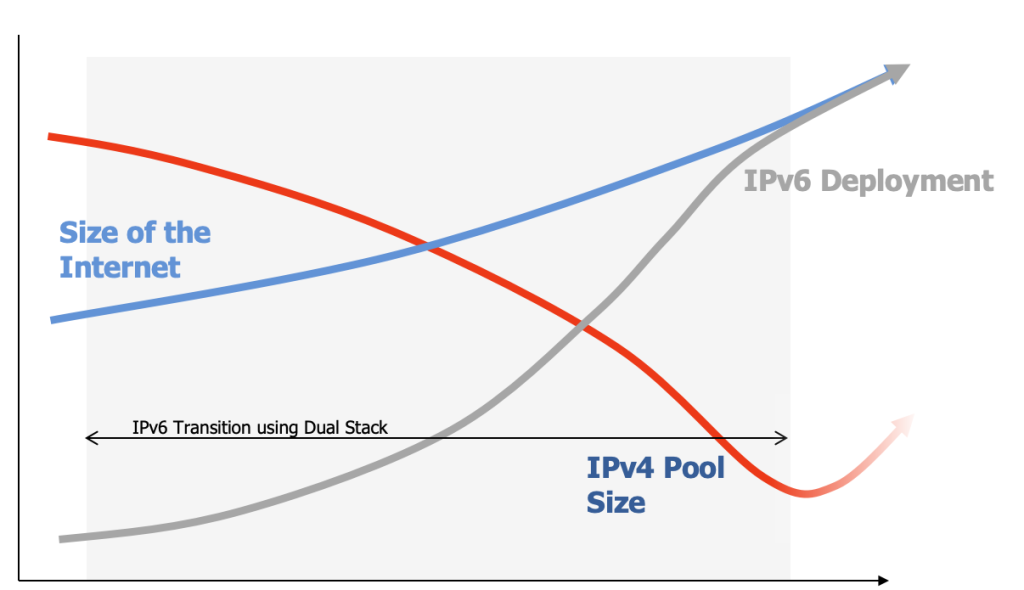

The issue was that this phase of the transition started too late. The intention of this transition was to complete the work and equip every network and host with IPv6 before we ran out of IPv4 addresses (Figure 6).

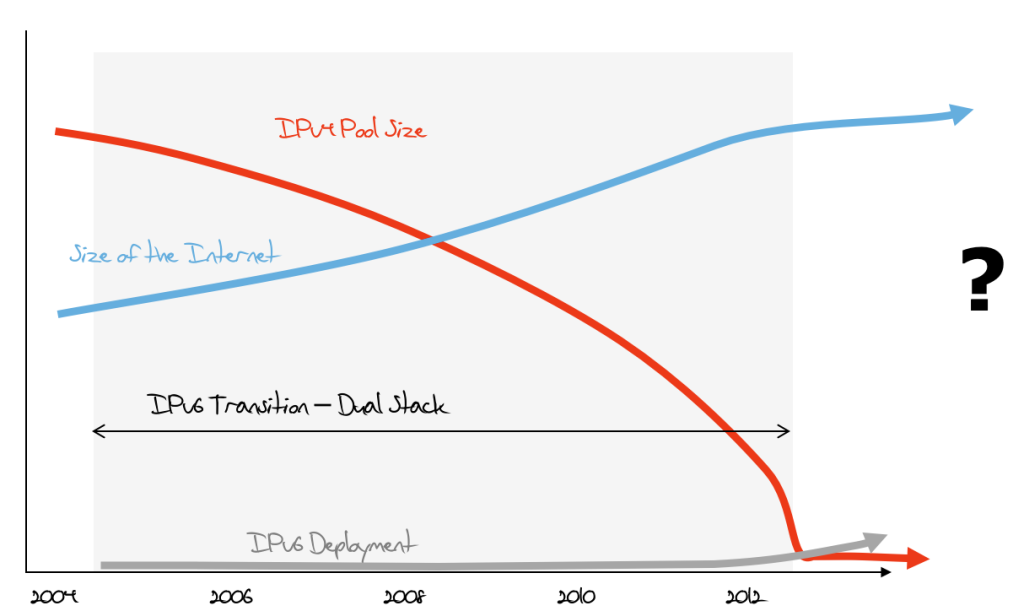

By 2012 we were in a far more challenging position. The pools of available IPv4 address space were rapidly depleting and the regional address policy communities were introducing highly conservative address allocation practices to eke out the remaining address pools. At the same time, the amount of IPv6 uptake was minimal. The transition plan for IPv6 was largely broken (Figure 7).

NATs

At this point, there was no choice for the Internet, and to sustain growth in the IPv4 network while we were waiting for IPv6 to gather momentum we turned to NATs. NATs were a challenging subject for the IETF. The entire concept of coherent end-to-end communications was to eschew active middleware in the network, such as NATs. NATs created a point of disruption in this model, creating a critical dependency upon network elements. They removed elements of network flexibility from the network and at the same time reduced the set of transport options to TCP and UDP.

The IETF resisted any efforts to standardize the behaviour of NATs, fearing perhaps that standard specifications of NAT behaviour would bestow legitimacy on the use of NATs, an outcome that several IETF participants were very keen to avoid. This aversion did not reduce the level of impetus behind NAT deployment. We had run out of IPv4 addresses and IPv6 was still a distant prospect, so NATs were the most convenient solution. What this action did achieve was to create a large variance of NAT behaviours in various implementations, particularly concerning UDP behaviours. This has exacted a cost in software complexity where an application needs to dynamically discover the type of NAT (or NATs) in the network path if it wants to perform anything more complex than a simple two-party TCP connection.

Despite these issues, NATs were a low-friction response to IPv4 address depletion where individual deployment could be undertaken without incurring external dependencies. On the other hand, the deployment of IPv6 was dependent on other networks and servers also deploying IPv6. NATs made highly efficient use of address space for clients, as not only could a NAT use the 16-bit source port field, but by time-sharing the NAT binding, NATs achieved an even greater level of address efficiency. A major reason why we’ve been able to sustain an Internet with tens of billions of connected devices is the widespread use of NATs.

Server architectures were evolving as well. With the introduction of Transport Layer Security (TLS) in web servers, a step was added during TLS session establishment where the client informs the server of the service name it intends to connect to. Not only did this allow TLS to validate the authenticity of the service point, but this also allowed a server platform to host an extremely large collection of services from a single platform (and a single platform IP address) and perform individual service selection via this TLS Server Name Indication (SNI). The result is that server platforms perform service selection by name-based distinguishers (DNS names) in the session handshake, allowing a single server platform to serve large numbers of individual servers. The implications of the widespread use of NATs and the use of server sharing in service platforms have taken the pressure off the entire IPv4 address environment.

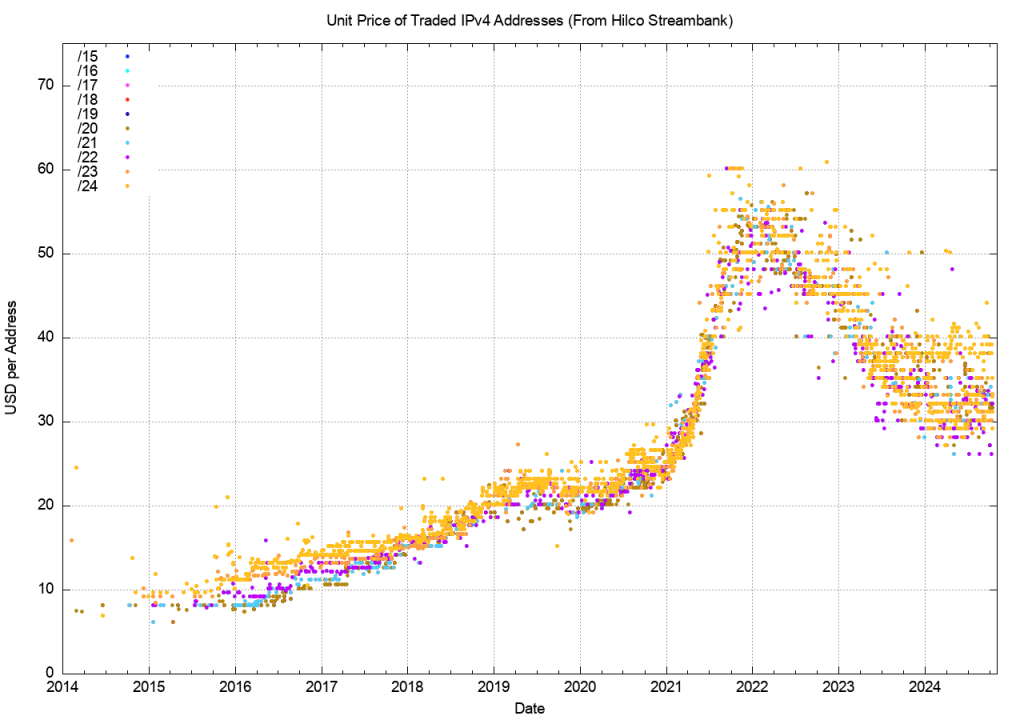

One of the best ways to illustrate the changing picture of address scarcity pressure in IPv4 is to look at the market price of address transfers over the past decade. Scarcity pressure is reflected in the market price. A time series of the price of traded IPv4 addresses is shown in Figure 8.

The COVID-19 period coincided with a rapid price escalation over 2021. Since then, prices have declined to a range of USD 30 to USD 40 per address. While fluctuations from USD 26 to USD 42 have been observed, the price has remained stable within this range in 2024. This price data indicates that IPv4 addresses are still in demand in 2024, but the level of demand appears to have equilibrated against available levels of supply, implying that there is no scarcity premium in evidence in the address market in 2024. This data points to the combination of the efficacy of NATs in extending the efficiency of IPv4 addresses by making use of the 16 bits of port address space plus the additional benefits of using shared address pools.

However, it’s not just IPv4 that has alleviated the scarcity pressure for IPv4 addresses. Figure 1 indicates that over the past decade, the level of IPv6 adoption has risen to encompass some 40% of the user base of the Internet. Most applications, including browsers, support Happy Eyeballs, which is a shorthand notation for preferring to use IPv6 over IPv4 if both protocols are available for use in support of a service transaction. As network providers roll out IPv6 support, the pressure on their IPv4 address pools for NAT use is relieved due to the applications’ preference to use IPv6 where available.

How much longer?

Now that we are somewhere in the middle of this transition, the question becomes: How much longer is this transition going to take?

This seems like a simple question, but it does need a little more explanation. What is the ‘endpoint’ when we can declare this transition to be ‘complete’? Is it a time when there is no more IPv4-based traffic on the Internet? Is it a time when there is no requirement for IPv4 in public services on the Internet? Or do we mean the point when IPv6-only services are viable? Or perhaps we should look at the market for IPv4 addresses and define the endpoint of this transition at the time when the price of IPv4 addresses completely collapses?

Perhaps we should take a more pragmatic approach and, instead of defining completion as the total elimination of IPv4, we could consider it complete when IPv4 is no longer necessary. This would imply that when a service provider can operate a viable Internet service using only IPv6 and having no supported IPv4 access mechanisms at all, then we would’ve completed this transition.

What does this imply? Certainly, the ISP needs to provide IPv6. But, as well, all the connected edge networks and the hosts in these networks also need to support IPv6. After all, the ISP has no IPv4 services at this point of completion of the transition. It also implies that all the services used by the clients of this ISP must be accessible over IPv6. Yes, this includes all the popular cloud services and cloud platforms, all the content streamers and all the content distribution platforms. It also includes specialized platforms such as Slack, Xero, Atlassian, and similar. The data published on Internet Society’s Pulse reports that only 47% of the top 1,000 websites are reachable over IPv6, and clearly a lot of service platforms have work to do, and this will take more time.

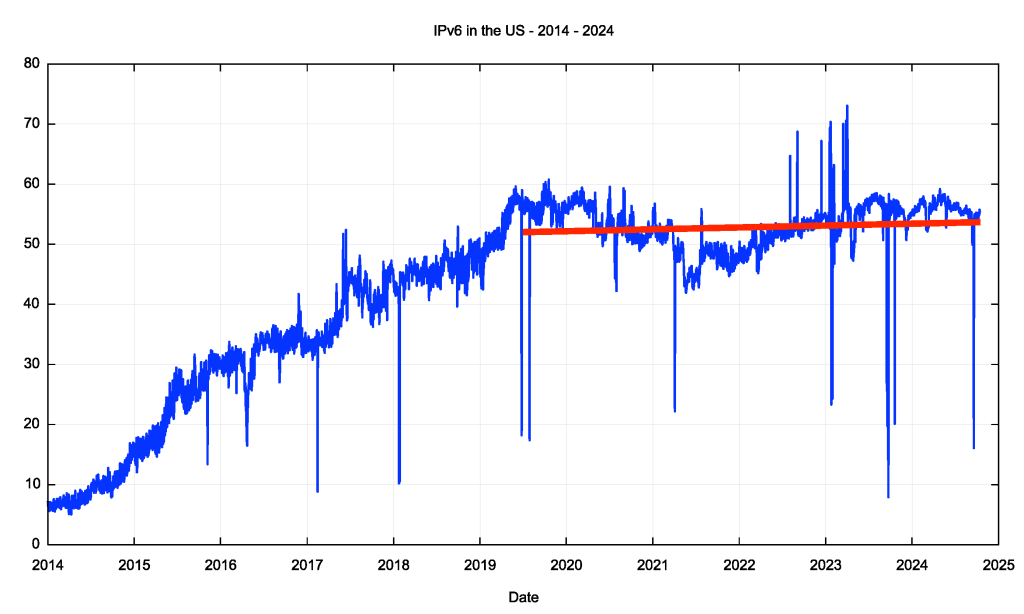

When we look at the IPv6 adoption data for the US there is another somewhat curious anomaly (Figure 9).

The data shows that the level of IPv6 use in the US has remained constant since mid-2019. Why is there no further momentum to continue with the transition to IPv6 in this part of the Internet? I would offer the explanation that the root cause is a fundamental change in the architecture of the Internet.

Changes to the Internet architecture

The major change to the Internet’s architecture is a shift away from a strict address-based architecture. Clients no longer need the use of a persistent unique public IP address to communicate with servers and services. And servers no longer need to use a persistent unique public IP address to provide clients with access to the service or content. Address scarcity takes on an entirely different dimension when unique public addresses are not required to number every client and every distinct service.

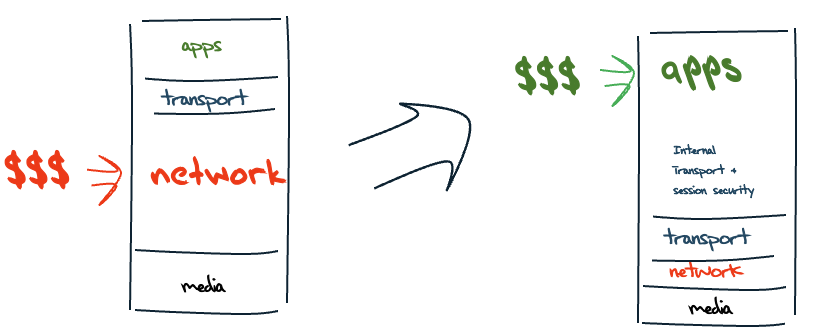

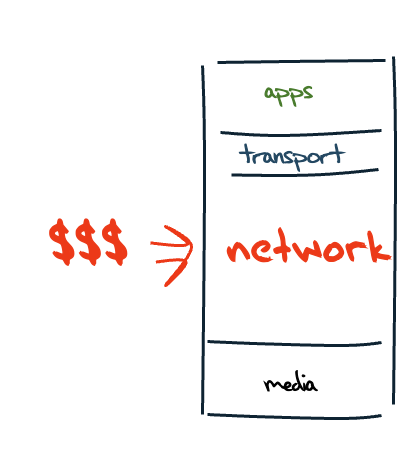

Some of the clues that show the implications of this architectural shift are evident when you look at the changes in the internal economy of the Internet. The original IP model was a network protocol that allowed attached devices to communicate with each other. Network providers supplied the critical resources to allow clients to consume content and access services. At the time, the costs of the network service dominated the entire cost of the operation of the Internet, and in the network domain distance was the dominant cost factor. Whoever provided distance services (so-called ‘transit providers’) were the dominant providers. It’s little wonder that we spent a lot of our time working through the issues of interconnection of network service providers, customer/provider relationships and various forms of peering and exchanges. The ISPs were, in effect, brokers rationing the scarce resource of distance capacity. This was a classic network economy (Figure 10).

For many years the demand for communications services outstripped available inventory, and price was used as a distribution function to moderate demand against available capacity. However, all of this changed due to the effects of Moore’s Law consistently changing the cost of computing and communications.

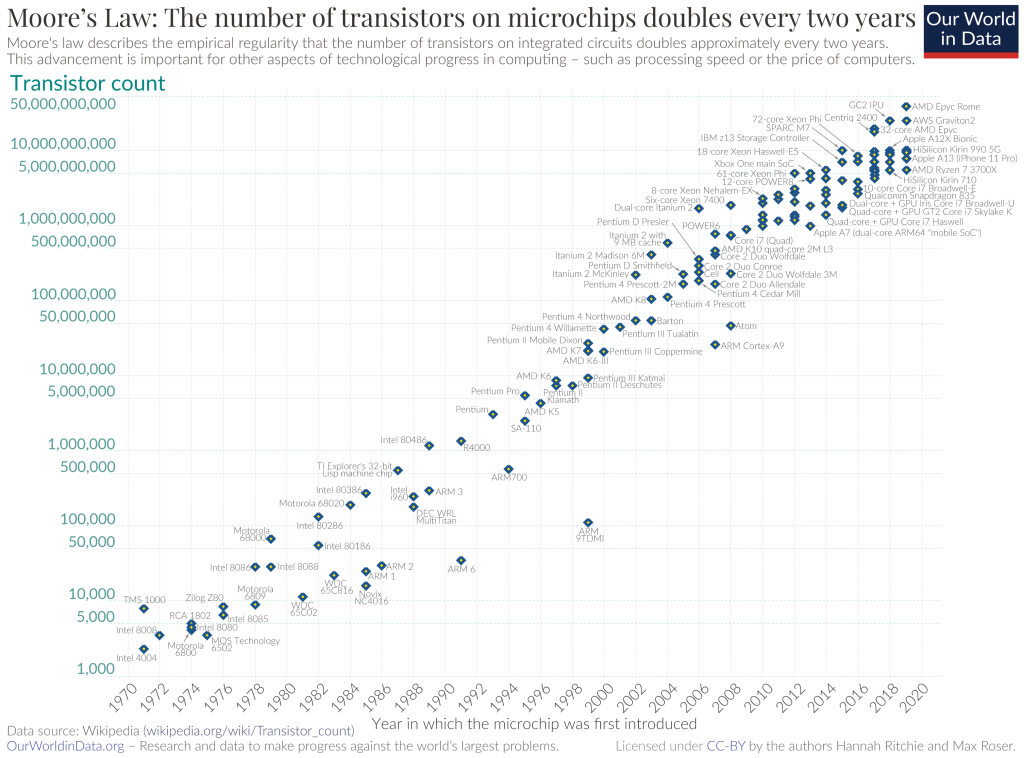

The most obvious change has been in the count of transistors in a single integrated circuit. Figure 11 shows the transistor count over time since 1970.

The latest production chips in 2024 are the Apple M3, a 3nm chip with up to 92B transistors. With perhaps the possible exception of powering AI infrastructure, these days processing capability is an abundant and cheap resource.

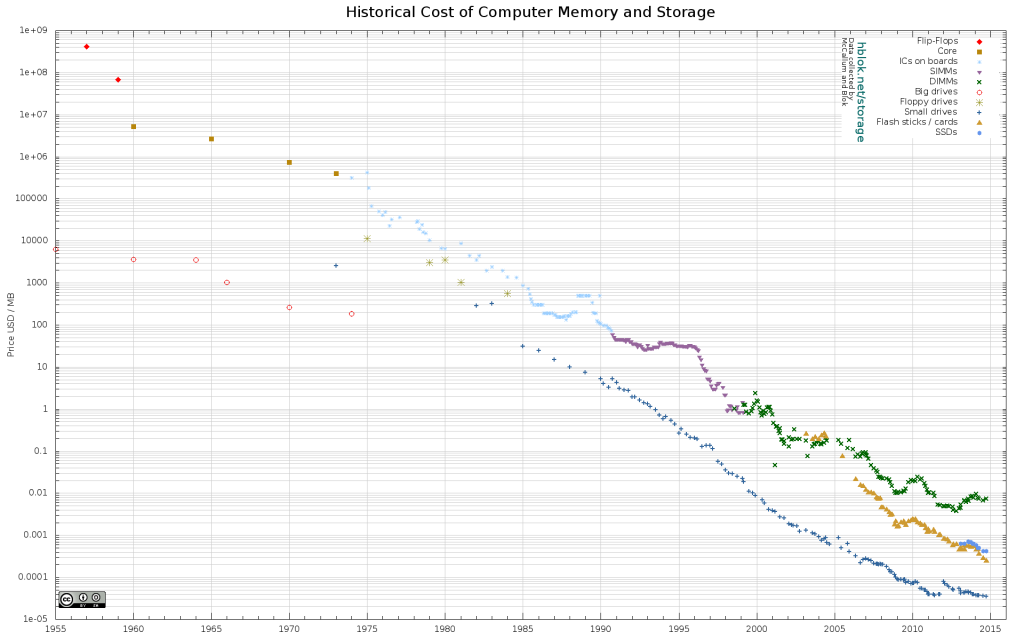

This continual refinement of integrated circuit production techniques has an impact on the size and unit cost of storage (Figure 12). While the speed of memory has been relatively constant for more than a decade, the unit cost of storage has been dropping exponentially for many decades. Storage is also an abundant resource.

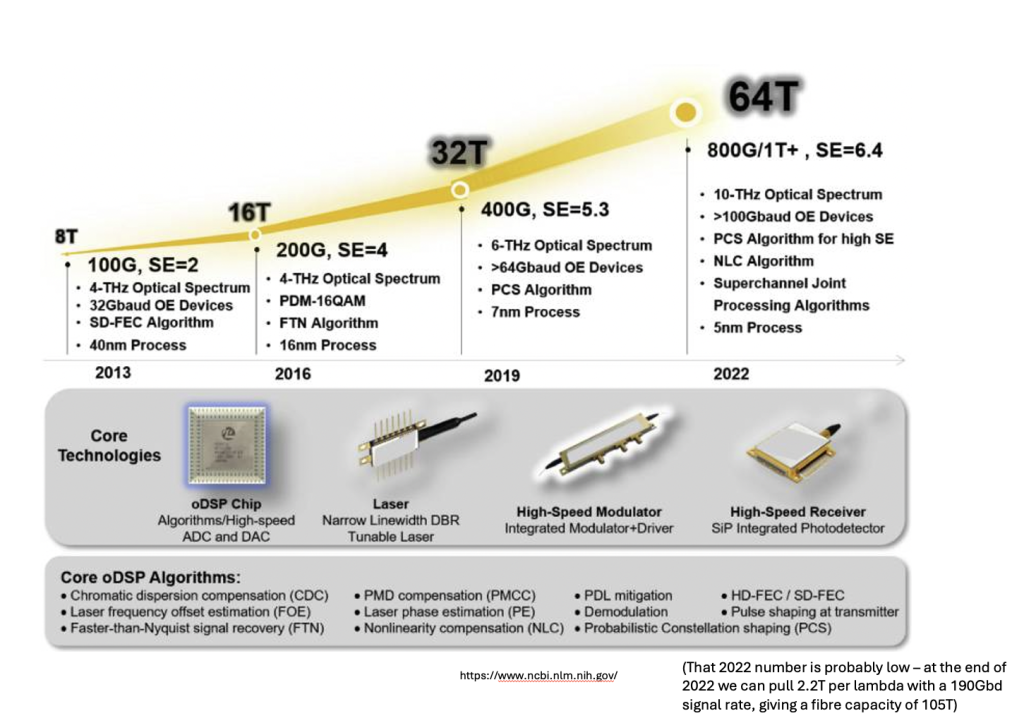

These changes in the capabilities of processing have also had a profound impact on communications costs and capacities. The constraining factor in fibre communications systems is the capabilities of the digital signal processors and the modulators. As silicon capabilities improve, it’s possible to improve the signal processing capabilities of transmitters and receivers, which allows for a greater capacity per wavelength on a fibre circuit (Figure 13).

The shift from scarcity to abundance in processing, storage, and transmission capacity has profoundly impacted the Internet service model. The model has changed from an ‘on-demand’ pull to a ‘just in case’ model of pre-provisioning. These days we load replicas of content and services close to the edge of the network where the users are located and attempt to deliver as much of the content and service as possible from these edge points of presence to the users in the adjacent access networks. These changes in the underlying costs of processing and storage have provided the impetus for the expansion of various forms of Content Distribution Networks (CDNs), which now serve almost the entirety of Internet content and services. In so doing, we’ve been able to eliminate the factor of distance from the network and most network transactions occur over short spans.

The overall result of these changes is the elimination of distance in pushing content and services to clients. We can exploit the potential capacity in 5G mobile networks without the inefficiencies of operating the transport protocol over a high-delay connection. Today’s access networks operate with greater aggregate capacity, and the proximity of the service delivery platform and client allows transport protocols to make use of this capacity, as transport sessions that operate over a low latency connection are also far more efficient. Service interactions across shorter distances using higher capacity circuitry result in a much faster Internet!

As well as ‘bigger’ and ‘faster’ in this environment of abundant communications, processing and storage capacity is operating in an industry where there are significant economies of scale. Much of this environment is funded by leveraging a shared asset — the advertising market — that can’t be effectively capitalized on by individuals alone. The outcome of these changes is that a former luxury service accessible to just a few has been transformed into an affordable mass-market commodity service available to all.

However, it’s more than that. This shift into an abundance of basic inputs for the digital environment has shifted the economics of the Internet as well. The role of the network as the arbiter of the scarce resource of communication capability has dissipated. In response, the economic focus of the Internet economy has shifted up the protocol stack to the level of applications and services (Figure 14).

Now, let’s return to the situation of the transition to IPv6. The responsibility falls on networks and network operators to invest in transitioning to a dual-stack platform initially, with the eventual goal of phasing out IPv4 support. But this change is not really visible, or even crucial, to the content or service world. If IPv4 and NATs perform the carriage function adequately, then there is no motivation for the content and service operators to pay a network a premium to have a dual-stack platform.

It’s domain names that operate as service identifiers, and its domain names that underpin the user tests of authenticity of the online service. It’s the DNS that increasingly is used to steer users to the ‘best’ service delivery point for content or service. From this perspective addresses, IPv4 or IPv6, are not the critical resource for a service and its users. The ‘currency’ of this form of CDN network is names.

So where are we in 2024? Today’s public Internet is largely a service delivery network using CDNs to push content and service as close to the user as possible. The multiplexing of multiple services onto underlying service platforms is an application-level function tied largely to TLS and service selection using the SNI field of the TLS handshake. We use DNS for ‘closest match’ service platform selection, aiming for CDNs to connect directly to the access networks where users are located. This results in a CDN’s BGP routing table with an average AS-PATH length designed to converge to just 1! From this aspect the DNS has supplanted the role of routing! While we don’t route ‘names’ on today’s Internet, it functions in a way that is largely equivalent to a named data network.

There are a few additional implications of this architectural change for the Internet. TLS, like it or not (and there is much to criticize about the robustness of TLS), is the sole underpinning of authenticity in the Internet. DNSSEC has not gathered much momentum to date. DNSSEC is too complex, too fragile and just too slow to use for most services and their users. Some value its benefits highly enough that they are prepared to live with its shortcomings, but that’s not the case for most name holders and most users, and no amount of passionate exhortations about DNSSEC will change this! It supports the view that it’s not the mapping of a name to an IP address that’s critical. What is critical is that the named service can demonstrate that it is operated by the owner of the name. Secondly, RPKI, the framework for securing information being passed in the BGP routing protocol, is really not all that useful in a network where there is no routing!

The implication of these observations is that the transition to IPv6 is progressing very slowly not because this industry is chronically short-sighted. There is something else going on here. IPv6 alone is not critical to a large set of end-user service delivery environments. We’ve been able to take a 1980s address-based architecture and scale it more than a billion-fold by altering the core reliance on distinguisher tokens from addresses to names. There was no real lasting benefit in trying to leap across to just another 1980s address-based architecture (with only a few annoying stupid differences, apart from longer addresses!).

Where is this heading in the longer term? We are pushing everything out of the network and over to applications. Transmission infrastructure is becoming an abundant commodity. Network sharing technology (multiplexing) is decreasingly relevant. We have so many network and computing resources that we no longer must bring consumers to service delivery points. Instead, we are bringing services towards consumers and using the content frameworks to replicate servers and services. With so much computing and storage the application is becoming the service rather than just a window to a remotely operated service.

If that’s the case, then will networks matter anymore? The last couple of decades have seen us stripping out network-centric functionality and replacing this with an undistinguished commodity packet transport medium. It’s fast and cheap, but it’s up to applications to overlay this common basic service with its own requirements. As we push these additional functions out to the edge and ultimately off the network altogether, we are left with simple dumb pipes!

At this point, it’s useful to ask — what ‘defines’ the Internet? Is the classic response, namely, “a common shared transmission fabric, a common suite of protocols and a common protocol address pool’ still relevant these days? Or is today’s network more like ‘a disparate collection of services that share common referential mechanisms using a common namespace?’

When we think about what’s important to the Internet these days is the choice of endpoint protocol addressing really important? Is universal unique endpoint addressing a 1980s concept whose time has come and gone? If network transactions are localized, then what is the residual role of a unique global endpoint addressing clients or services? And if we cannot find a role for unique endpoint addressing, then why should we bother? Who decides when to drop this concept? Is this a market function, so that a network that uses local addressing can operate from an even lower cost base and gain a competitive market edge? Or are carriage services so cheap already that the relative benefit in discarding the last vestiges of unique global addresses is so small that it’s just not worth bothering about?

And while we are pondering such questions, what is the role of referential frameworks in networks? Without a common referential space then how do we usefully communicate? What do we mean by ‘common’ when we think about referential frameworks? How can we join the ‘fuzzy’ human language spaces with the tightly constrained deterministic computer-based symbol spaces?

Certainly, there is much to think about here!

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

There are definitely some costs for CDNs running on v4 – in that they need at least a /24 for metro they operate in. If they fully embrace anycast maybe that goes away but they need to deal with a bunch of edge cases there.

Similarly for ISPs, ever denser CG-NAT boxes cost money, as do the v4 blocks they need to operate. And introduce complexity in the topology.

The costs may not be enough to tip the world to the alternative, but they do exist.

Another cost to consider is handling users behind CGNAT. A flood of traffic from a single address could indicate CGNAT, or it could indicate a malicious bot and it’s difficult to differentiate short of aggressively tracking users (which brings up its own privacy concerns).

This results in extra costs for the CDN, and inconvenience for the users who find themselves banned from services or forced to complete CAPTCHA.

The question about anycast and CDNs is an interesting one. If the density of the anycast server constellation is dense enough then anycast is incredibly effective, and given that there are only some 6,000 transit ASes in the IPv4 network, its reasonable to assume that once a CDN deployment gets up to 1,500 individual points of presence, anycast will be highly effective. So the larger CDN, paradoxically, need fewer unique addresses as they can relay on local route propagation to perform “steering” its only the smallr CDNs and those historically wedded to the concept (such as Akamai) that perform DNS steering.

As usual in the Internet these days, the bigger you get the lower the unit costs get.

> and apparently, it all seems to be working!

It’s *not* working.

It’s resulted in massive centralization. It’s made it nearly impossible for anything but the Web to work well, and the Web is not the Internet… or at least the Web is not what the Internet is supposed to be. CDNs fix the Web, not the Internet.

You can only shift away from an “address based architecture” if you’re willing to accept that delivering mostly HTTP traffic, basically entirely to-or-through huge centralized services, is the only important goal.

I’m not willing to accept that. The Internet is supposed to be a communication service, not a “content delivery” service. It’s only working correctly when any node can spontaneously communicate with any other node, without having to agree on some choke point.

I appreciate (and to some extent share) your frustration as to how all this has turned out. The aspirations that were behind “the open Internet” and the associated ideals of open societies built upon universally accessible communications have been largely abandoned these days. And that’s a deep disappointment to many who valued that original broad vision.

But you have to bear in mind that noone is in “in charge” here, and the Internet is shaped by a set of commercial actors who are responding to their perceptions of user needs. The internet in which we find ourselves is the cumulative result of individual actors responding to percieved needs of customers and at the same time attempting to establish a strong market position for themselves. The result of this is an environment which has become massively centralised, largely privatised, and in terms of technical diversity more and more of a monoculture. Yes its the CDN-delivered WEB and not much else.

But how could you or I change that outcome? Individually my purchasing decisions have absolutely no impact on these Internet behemoths. We end up relying solely on regulatory relief and the saga of the past decade of regulatory responses has done little other than to reveal the impotence of regulatory measures when pitched against such massive behemoths.

Yes the Internet of the 1980’s was a completely different place. Completely. And nostalgia for that earlier environment is fine and completely understandable. But its not where we are today and noone seems to know if its even possible to take a path that leads back there and more importantly how the market would naturally dismantle all the power and influence that it has accreted to these large players over the past 2 – 3 decades to achieve such an outcome!

I’m seriously dissatisfied with the near-feudal political economy that’s taken hold in a now-major part of human affairs. I find I feel a little insulted to have that called “nostalgia”.

I think one point that was implied but glossed over is the niche of peer-to-peer connectivity. IPv6 simplifies connecting to your home NAS from your phone while on the road, your home becoming a service provider. Your home ipv6 will hopefully be relatively static and rarely change if ever. But it doesn’t simplify the reverse. How do you connect to your phone to push a notification directly?

You still need a broker somewhere in the middle to keep track of what address your phone is currently available on as it roams across cellular and Wi-Fi networks. Dynamic DNS is one option but a bad one due to propagation time being measured potentially in hours. That puts the requirements back onto the application anyway to provide that pairing service and you are no longer truly P2P.

But you also want to ensure you are talking to who you think you are when established an encrypted tunnel. So that means your central pairing service also needs to be in charge of handing out keys or signing certificates for all the parties.

But now you’re just describing a peer-to-peer VPN with a central orchestrator. IPv6 solved everybody getting an IP address, but it didn’t end up solving any of the issues of peer-to-peer connectivity that really matter. And the solutions all involve a service that mostly obviates the need for end to end addressing.

Dynamic DNS, though imperfect, can work fine.

Set the TTLs to zero and there will be no “propagation” delay. Though I know Geoff’s not a fan of that either 🙂

To misquote Pirates of the Carribean, TTLS are more what you’d call “guidelines” than actual rules! 🙂

For notifications…

Make a connection and leave the connection idle until something is received. If the address of the device changes, reopen the connection.

This is how MS ActiveSync works as well as other protocols. If there is no state tracking firewall or NAT gateway in the way to close the idle connection (because it occupies space in the finite size state table) then this approach works well.

That certainly would work but I’m not sure I would consider Microsoft Exchange ‘peer to peer’. You also don’t really have a need for ipv6 in such an arrangement.

Very interesting piece. I suppose a major sharp edge remaining is ipv4 identifier reputation when it comes to things like email transmission (which is now basically a closed shop for a handful of providers) and AAA/Data retention requirements for ISPs and legal requests for identifying requests which CGNAT and TLS muddy significantly.

IPv6 traffic will inevitably increase because of 2 factors:

First of all, we are at the very start of the Private Cloud Compute “AI for your phone without killing your battery but preserving privacy”which means *much* more native v6 phone traffic -orders of magnitude more.

Secondly, the current model of NAT’d infrastructure behind a router kinda assumes that people and businesses have physical homes with power and inet which isn’t necessarily going to be the case in surprisingly few years once we go above 2C warming. (1.6C this year so probably before 2045)

IPv6 makes more sense when your eyeballs aren’t safely behind their fttp but are instead utilising emergency decentralised networking. So just give it time.

Meanwhile those of us that have been running in native v6 for the last few decades can continue to enjoy the lower latency and better responsiveness of the internets due to the majority of eyeballs being kept on v4 using increasingly torturous methods by telcos still running IOS 11.x on their core routers.

Daniel J. Bernstein (author of djbdns and qmail) wrote his article “The IPv6 mess” about 20 years ago. He was right, It’s a mess.

Thank you for the article Geoff.

I’m wondering if the same shift regarding the transformation of the network economy from network level to application level also applies to 3rd world countries, like countries in Africa?

I’m located in South Africa, and 46% of the population are not yet covered for Fibre access internet.

Does this perhaps means the view of transition to IPv6 for 3rd world countries is different?

very nice article. there is a typo:

“the 32-bt address field” – 32-BIT 🙂

Thanks for catching that Reinhard. We’ve corrected it.

I always took IPv6 inevitability for granted. This article opened my eyes for a different perspective. Thanks.

IPv6 is a replacement for IPv4. Eventually IPv4 would no longer be needed. This is because of the hourglass model. IP is at the core at the network layer — there is only one network protocol. This is why the transition from IPv4 to IPv6 has been difficult. However, it was thought of, how the two could coexist, at least temporarily, But those strategies have been successful in favour of retaining IPv4 for too long.

“I would offer the explanation that the root cause is a fundamental change in the architecture of the Internet.”

That is not right. The architecture IPv4 has remained the same. It is the way we use it that has changed.

The hourglass model itself is in the layered network architecture. The layered network architecture also came from OSI. OSI got the layered approach from X.25, which as far as I know was devised as a 3-layer system (hardware/physical, network protocol, application protocol) by Peter Linnington of University of Cambridge in Kent.

Now OSI detractors made the point that OSI was overblown with 7 layers, and TCP/IP is simpler with 5. However, the upper three layers of OSI are just the application layer of TCP/IP. IEEE is only interested in the lower 4 layers. W3C is one of the organisations interested in the application layer, and for most architectures, there are actually 3 sublayers in the TCP/IP application layer, as a session layer, presentation layer, and the layer for application protocols themselves.

IP is at the core in the neck of the hourglass (layer 3). To run an internet, things must be common and standard at this core. Layer 4 is the transport layer, in which several transport protocols can exist, UDP being the most basic and a thin connectionless layer on top of IP. TCP provides a connection-oriented layer for reliability. This is where religious wars between connection-oriented and connectionless people arose. It was all ridiculous and based on little understanding of requirements for quickness with no guarantee of reliability or more time spent of reliability (often needed). There are also streaming protocols (for semi reliability). On top at layer 5 (which itself is split into 5, 6, and 7) are the myriads of potential applications and distributed systems.

Below the hourglass neck is the layer of protocols like Ethernet and Wi-Fi. Layer 1 is the physical media of copper wire, fibre, wireless. Each layer provides an abstraction for the layer above. Protocols only relate between immediate layers. TCP has no interaction with Ethernet or Wi-Fi. This ensure flexibility and independence, being able to adopt different physical media and different protocols. That is except at layer 3, the network layer and hourglass neck.

This has been the fundamental architecture of the Internet, it has not changed.

“The bottom line was that IPv6 did not offer any new functionality that was not already present in IPv4. It did not introduce any significant changes to the operation of IP. It was just IP, with larger addresses,” Huston wrote.

That is not correct IPv6 was more than just being IPv4 with larger addresses. It saw the adoption of better protocols (from OSI as it turns out). In fact, OSI did not die, it just became IPv6. Perhaps that was psychologically too much for some of those who disparaged OSI. This is rather typical of this industry where people feel they must join the cult of certain technologies, not see the shortcomings, but disparage the other contenders, even if superior. That has certainly been the case with the C/C++ cult.

IPv6 has not just the larger address space, but new streamlined protocols, simpler headers that include a next header field to include a processing agenda and means we can insert extra functionality without changing the IP(v6) header — a very clever change. Support for flows, jumbograms, extra authentication and privacy, support for unicast, multicast, anycast, etc (See Douglas Comer ‘Internetworking with TCP/IP’).

Thus I think some of Huston’s assumptions and assertions are wrong. However, that does not necessarily disprove his assertion that IPv6 does not need to be adopted, although I think it should be, since it provides many advantages over-and-above expanded addressing.

Transition

“While the design of IPv6 consumed a lot of attention at the time, the concept of transition of the network from IPv4 to IPv6 did not.

“Given the runaway adoption of IPv4, there was a naive expectation that IPv6 would similarly just take off, and there was no need to give the transition much thought.”

I don’t believe that is true, but I can’t say when the transition strategies were devised, but they are clear involving the dual-stack approach, translation, and tunnelling. These have allowed there to be no clear transition date, and perhaps that is the problem of how IPv4 can hang around far beyond when it should have been replaced by IPv6. The other explanations are the hourglass model as above, and simply the resistance of those who already have their IPv4 infrastructure and who don’t want any expense. So in the meantime the rest of the world must bear the expense of a slower transition.

While clever methods of extending IPv4, including NAT, are around, they really are just band-aid solutions. Eventually, we should go with the permanent fix, not only for the larger address space, but other advantages as well.

However, this is a strange industry. While the ‘soft’ software should make us adaptable to change, flexible, and able to embrace new ideas, it is often that last point that we fail on and computing people themselves are often the ones most resistant to adopting better technologies. One reason is the worse a technology, the tighter the lock in, both technically and psychologically.

This is why inferior products are often rushed to market in order to achieve lock in. While IPv4 was not rushed to market, it has achieved lock in due to the hourglass model.

Thank you Ian.

Very interesting.