This article is a repost from Pavel Odintsov’s blog and summarizes the decade-long experience accumulated during development of FastNetMon, a high performance lightning fast DDoS detection tool with an open source core. Part 2 examines the eBPF implementation.

Detecting DDoS attacks

To detect DDoS attacks in a telecommunications network we need to see the traffic that traverses the network, and we have multiple protocols for that purpose:

- IPFIX

- Netflow v9

- sFlow v5

- PSAMP

All these protocols are UDP-based and uni-directional. Each UDP packet may include information of about 1 to 20 packets or flows observed by the network equipment.

Fortunately, all these protocols use some kind of sampling (for instance, select 1 packet from 1,000 and send it to the collector) or aggregation (collect multiple packets that belong to the same 5-tuple and report them as a single multi-packet flow once per period, typically 15 to 30 seconds). Even for huge networks with terabits of capacity we will see only a low 10 to 100k packets per second of UDP traffic inbound to the collector.

Scaling is hard, with only one thread

Our initial UDP server implementation was very basic and used a single thread to process all traffic from the network equipment. I will use C++ for my examples but all described capabilities are part of the C syscall API provided by Linux and can be used from any language with the ability to run system calls. The complete source code for this example is available.

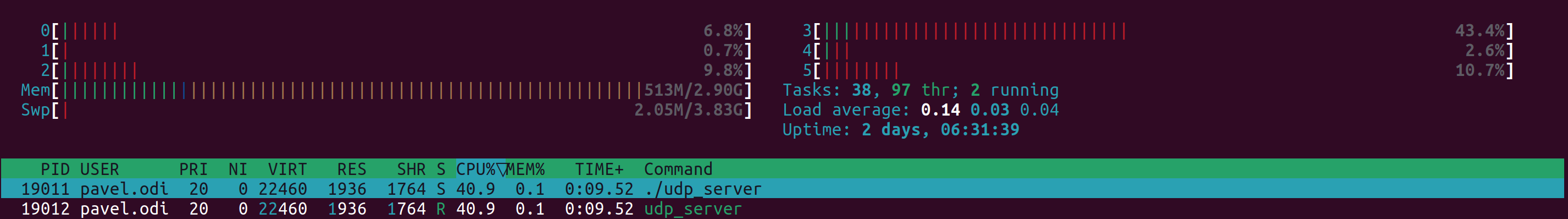

This implementation handles all UDP traffic using a single thread:

Started capture

79903 packets / s

80942 packets / s

80880 packets / s

79562 packets / s

80228 packets / s

As you can see even with our example UDP server, which does not execute any packet parsing or data processing, we used half of a CPU core. Due to the single thread approach we have only 50% of the CPU on this core available for all other tasks, which is clearly not enough. For these tests, Xeon E5-2697A v4 was used.

First improvement — two threads and load balance by hand

What is the most obvious approach to increase the amount of available CPU power for our tasks? We can run another thread on another port and switch part of the network equipment to use another port. We used this approach for many years. It wasn’t very user friendly as you need to guess the amount of telemetry per device and do load balancing manually.

Second improvement — SO_REUSEPORT

What is the best way to improve experience and process traffic towards the same port using multiple threads? We can use the capability called SO_REUSEPORT, which is available in Linux starting from version 3.9.

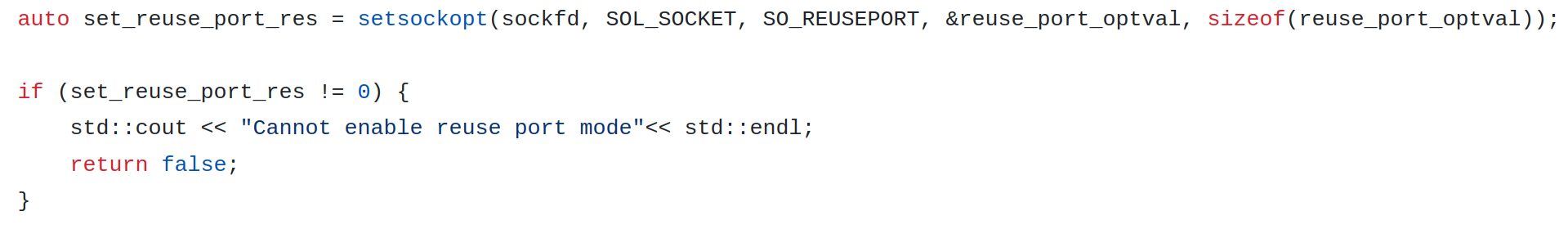

How does it work? It’s implemented in a very intuitive way. We spawn multiple threads, and from each of them we create their own UDP server sockets and bind them to the same host and port. The only difference is that we need to set the special socket option to SO_REUSEPORT. Otherwise Linux will block such attempts with an error:

"errno:98 error: Address already in use".The Linux kernel distributes packets between these sockets using a hash function created from the 4-tuple (host IP & port + client IP & port). In a scenario where we have several network devices, it will work just fine.

You can find all source code for this SO_REUSEPORT example in this repository. The majority of changes in comparison with the single thread implementation are about adding logic to spawn Linux threads and are not related to the network stack at all. The only change required for socket logic was the following (Figure 2):

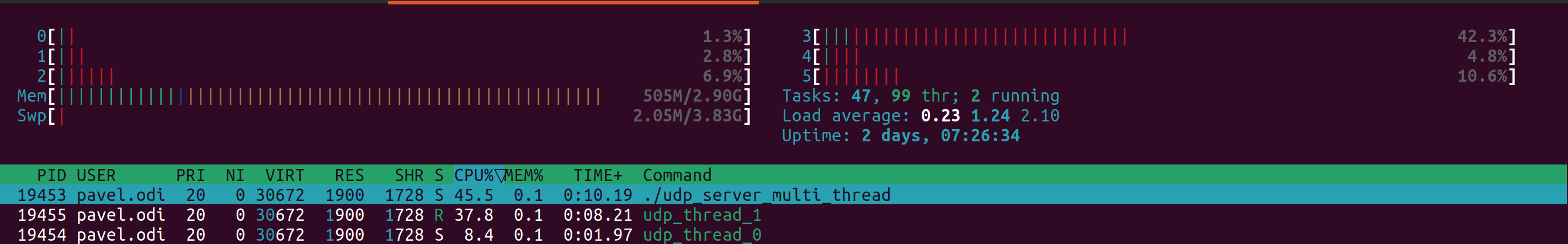

When we run this example multi-threaded server it will distribute traffic towards the same UDP port in the following way:

Thread ID UDP packets / second

Thread 0 10412

Thread 1 71115

Thread 0 10344

Thread 1 69908

Thread 0 10268

Thread 1 69760

Clearly, the distribution is not perfect and it does not help in our particular case. But the question is, will it help in your case?

Will this use of SO_REUSEPORT help? Yes!

Yes, it is very likely to help. Linux kernel uses load balancing, which is based on IP addresses and source ports of clients. If you have several unique clients for your service then traffic will be distributed evenly over all available threads.

Why it does not work well in our particular case?

Let’s look on tcpdump:

22:15:45.039055 IP 10.0.0.17.50151 > 10.16.8.21.2055: UDP, length 158

22:15:45.039056 IP 192.168.1.105.50151 > 10.16.8.21.2055: UDP, length 158

22:15:45.039066 IP 192.168.1.105.50151 > 10.16.8.21.2055: UDP, length 122

22:15:45.039070 IP 192.168.1.105.50151 > 10.16.8.21.2055: UDP, length 98

22:15:45.039098 IP 192.168.1.105.50151 > 10.16.8.21.2055: UDP, length 156

What are the issues here?

One of the issues is that all the network devices use the same source port number for sending our telemetry. We even checked vendor documentation, but did not find an option to change it anywhere. Apparently it’s hardcoded, which is not great but we still have unique device addresses to make the 4-tuple hash different for each device.

The second issue is that the majority of traffic is coming from a single router and the hash function will be the same in each case. The Linux kernel will feed all traffic from this router to a specific thread and will overload it that way.

Typical modern telecom equipment will be an example of ‘cutting edge’ technology and you may replace dozens of old routers or switches by a single device with incredible throughput on the scale of tens of terabits. Thus, several discrete and different measurement points become one single ‘behemoth’ feed of UDP data.

That’s exactly what happened on this specific deployment. Can we make anything to spread traffic evenly over all available threads?

Can we do better? Yes we can!

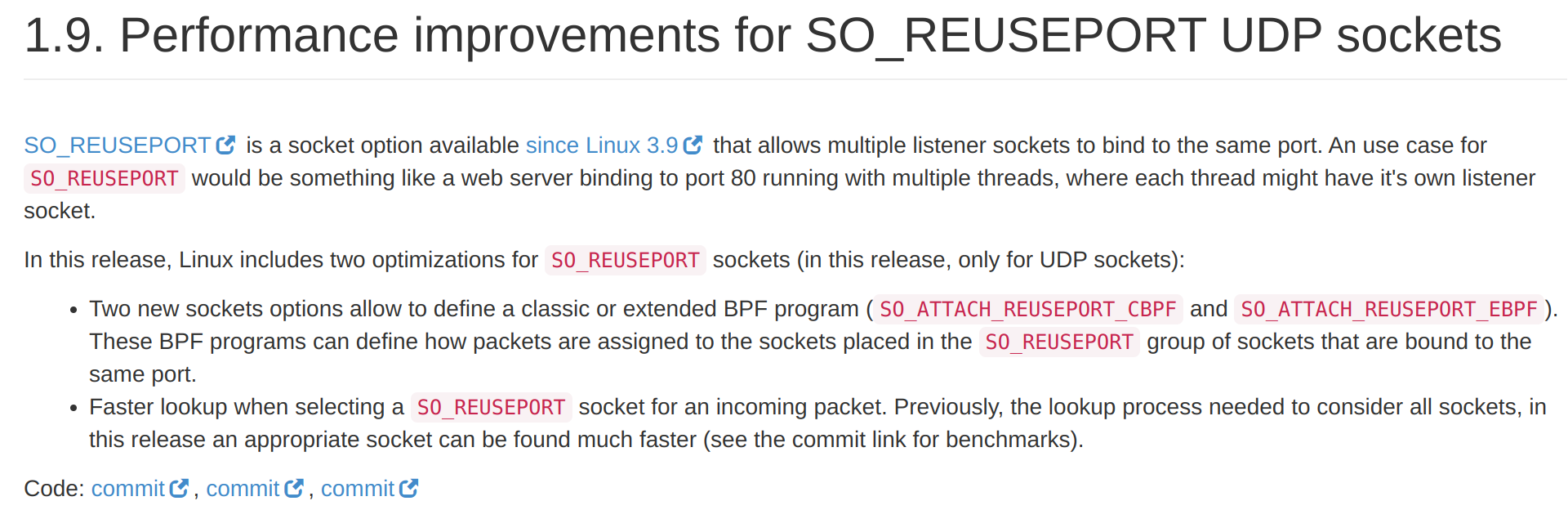

In Linux Kernel 4.5 we got a new capability to replace the logic used previously by the Linux kernel to distribute packets between multiple threads in a reuse_port group, by our own BPF-based microcode using socket options called: SO_ATTACH_REUSEPORT_CBPF and SO_ATTACH_REUSEPORT_EBPF.

In the second part of this article, we will provide a complete example of how we can implement the eBPF microcode in the kernel, which will distribute our traffic evenly between the threads.

Pavel is the author of FastNetMon, an open source DDoS detection tool with variety of traffic capture methods and works in software development and community management.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.