The IETF recently approved the TLS certificate compression extension, an optimization that promises to reduce the size of the Transport Layer Security (TLS) handshake by compressing its largest part.

At the same time, the QUIC headline feature is its built-in low latency handshake that is more reliant on small handshake sizes than is widely understood. QUIC implementations are now faced with the decision of whether or not updating their embedded TLS code to support certificate compression is urgent.

In this post, I’ll use real (anonymous and aggregated) data from the Fastly network to illustrate how important compression is in practice for achieving the fast startup performance that QUIC promises.

The problem

QUIC’s low-latency handshake is its headline feature. It achieves its speed by overlapping the functionality of both the Transmission Control Protocol (TCP) and TLS into one exchange rather than doing them serially. It’s therefore expected to complete in half the time of TCP and TLS stacked on each other.

However, the amount of data transferred by those optimized handshakes is constrained due to security considerations. In many cases that limit may be too small to hold all of the complete handshake in the fast part. Handshakes that are too big won’t complete any faster than their TCP predecessors. Keeping the handshake small is essential to delivering on QUIC’s promise.

If you’re not familiar with the format, diagrams like this one can accidentally imply that there is one packet flowing from the server to the client with the Initial, Hello, Cert, and Fin. In reality, it is showing one flight of data in that direction. Depending on the amount of data, the flight can comprise multiple IP datagrams back to back. There are security rules, discussed below, that limit the amount of data a QUIC server can send in that flight without adding a roundtrip delay. TLS certificate chains are pretty big and if they exceed this limit it will screw up the promise of a fast handshake.

It is not possible for QUIC to rely on the results of client address validation (the property provided by the SYN handshake in TCP) while sending the first flight of TLS handshake data because the two things are integrated together and being done at the same time. To mitigate the threat of an amplification attack, the QUIC server is limited to transmitting 3x the data received from the client before completing address validation. Handshakes that don’t fit must wait an extra round trip to complete the address validation before proceeding, and therefore, forfeit a major performance advantage of QUIC. Handshake performance is normally bound by latency so the only factor that is controllable about how fast they complete is the number of round trips in the handshake. Adding an extra round trip (or ‘flight’) is a big deal.

Prior to validating the client address, servers MUST NOT send more than three times as many bytes as the number of bytes they have received. This limits the magnitude of any amplification attack that can be mounted using spoofed source addresses. In determining this limit, servers only count the size of successfully processed packets.

Section 8.1 of the draft QUIC Specification

Amplification attacks are designed to trick servers into sending large amounts of data to an unwitting third party victim. Simply put, the attacker pretends to be the victim on the first datagram they send to the server and then the server responds with a much bigger reply directly to the victim. The attack is called an amplification because the reply data is designed to be much larger than the attacker’s original data and thus allows the attacker to utilize more bandwidth through this reflection than if they sent data directly to the victim. The QUIC limitation of a 3x factor keeps the amplification threat modest. TLS handshakes over TCP do not have this limitation because the TLS handshake only happens after the TCP setup is complete. The address validation prevents reflection to a third party.

Most parts of the QUIC handshake are small and only vary in size across a narrow range depending on exactly what features are being used. The QUIC framing, the TLS Server Hello, Encrypted Extensions, and Finished make up most of these kinds of bytes. They are usually around 200 bytes in the parts of the handshake that don’t vary in size much.

There is one large source of variable-sized content that dominates the byte count in the QUIC Handshake: the TLS certificate chain. The certificate chain may be just a few hundred bytes or it might be over 10 kilobytes. The size of the included certificates largely determines whether or not the handshake can be sent in a single flight.

Certificate compression has the potential to ameliorate the problem of exceeding the 3x amplification factor by reducing the size of the handshakes to a size compatible with the security restriction. Indeed, the original (that is, pre-standardization) Google QUIC contained a similar mechanism and the introduction of standards-based handshake compression for TLS is designed to restore this property.

Simply reducing the bytes in the handshake is not sufficient; it is obvious that certificate compression will accomplish that. We need to see if the compression commonly impacts whether or not a handshake will fit within the 3x amplification budget. This raises two specific questions I want our Fastly data set to answer:

Q: How often are handshakes with uncompressed certificate chains too large to fit in the first QUIC flight?

Q: How often would handshakes with compressed certificate chains be too large to fit in the first QUIC flight?

The dataset

The content of a TLS certificate chain is the same over TCP and QUIC. That allowed me to take samples from our widely deployed TCP-based HTTP stack to determine the distribution of certificate chain sizes seen in the real world. It was important to survey actual usage in order to weight the data according to the impact on end-users. No secret or protected data is used in this data set.

In order to make sure the data represented different geographic usage patterns, about 125 thousand handshakes were sampled from 9 cities on 6 continents and were collected in two batches, 12 hours apart. These samples are exclusively full handshakes because resumed handshakes do not contain certificate chains, and therefore, are not susceptible to the problem being considered.

The draft QUIC specification defines how to encode a certificate chain in a handshake. If we make a few reasonable assumptions about the contents of the less variable parts of the handshake we can then determine how big each collected sample would have been expressed in QUIC.

The last step in preparing the data set is to compress the certificate chain for each sample and encode that result in QUIC format. This reveals the number of bytes saved through compression. The TLS certificate compression specification allows any of the deflate, brotli, or zstd formats for compression. Anecdotally, the differences between them were very small compared to the difference between compressed and uncompressed representations. My dataset used deflate compression at level 7.

The analysis

Recall that the amplification mitigation rule restricts the first flight of handshake data to be 3x the number of bytes received from the client. There are several factors that complicate an exact analysis of which samples in our dataset comply with that rule because it is expressed in bytes of data relative to a variable amount of data the client may have sent. However, the approximate principle is intuitive: you receive one datagram so you may send three or less in response.

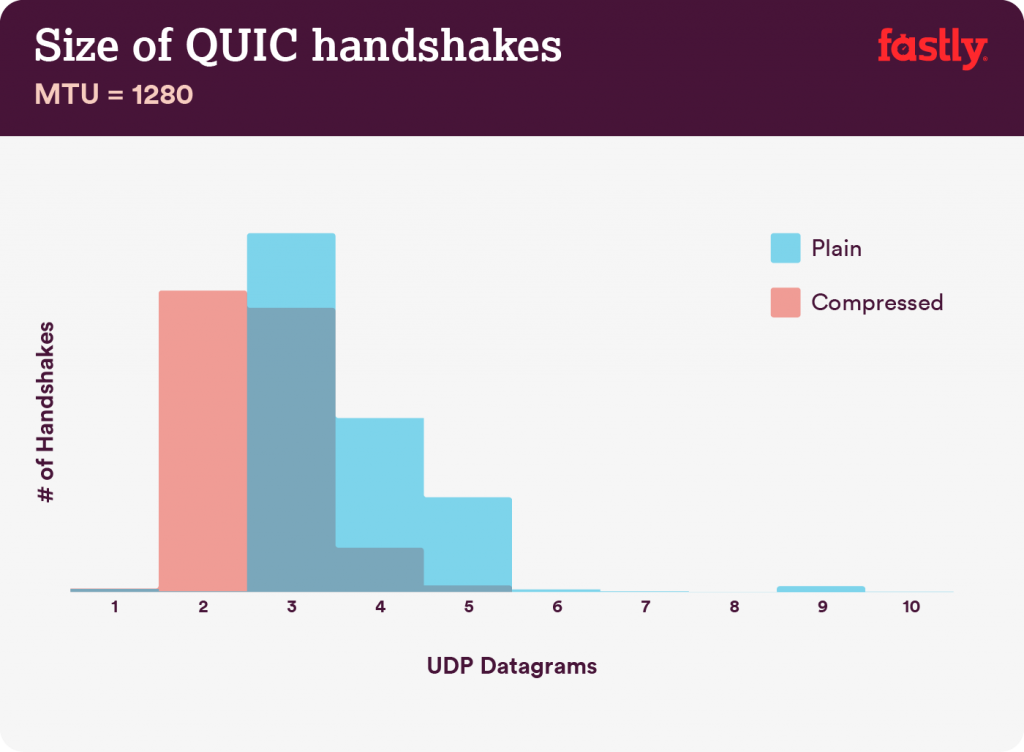

Let’s see how the data looks using this simplified datagram counting approach. I ran it first using a pessimistic datagram size of 1280 because all networks that support IPv6 must support a Maximum Transmission Unit (MTU) of at least 1,280 and the QUIC specification requires this as a minimum for the same reason. These charts show a histogram of the number of UDP datagrams that would be required for each handshake in our dataset both with and without compression.

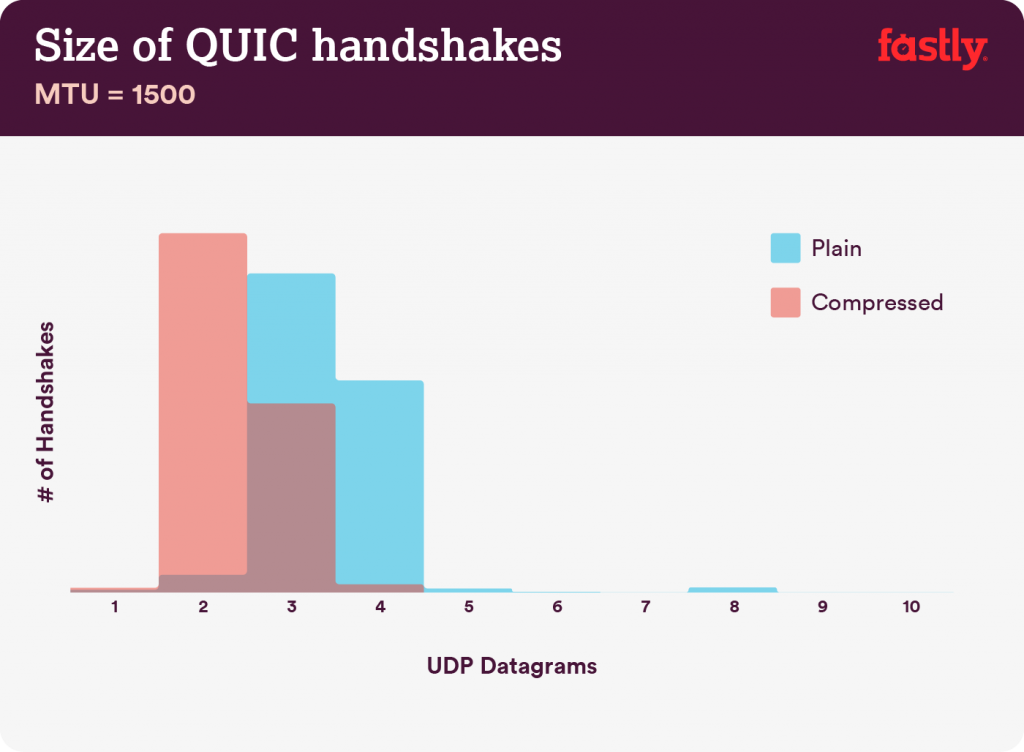

For an MTU of 1,280, a casual glance at that chart indicates that many more of the samples in the uncompressed (blue) certificate chain set exceeded the three datagram budget than in the compressed (red) data set. Before breaking down the exact numbers, let’s look at the same dataset organized into 1,500 byte datagrams. Fifteen hundred is the most common MTU on the Internet.

The overall distribution of datagram lengths for when using compression has clearly moved significantly towards fewer datagrams in comparison with the uncompressed one. Obviously, using larger datagrams with a 1,500-byte MTU means you need to send fewer of them than with an MTU of 1,280, but the portion of handshakes changed to be compliant with the amplification rule when adding compression is similar for both MTUs.

We can draw a couple of key conclusions at this stage of the investigation. First, between 40% and 43% (depending on MTU) of the observed uncompressed certificate chains are too large to fit in a single QUIC flight of three datagrams. That is a very large fraction of handshakes that will suffer a performance penalty because of their size.

Second, after applying compression to the certificate chains, only 1% to 8% of them (again depending on MTU) are too large to fit within the three-datagram budget. Compression shows an impressively meaningful improvement through this lens.

This is extremely promising but we should still look at the data based on the amplification limit expressed in bytes rather than datagrams as the specification requires. This time we can focus specifically on the key threshold implied by the application factor rather than the entire distribution of sizes.

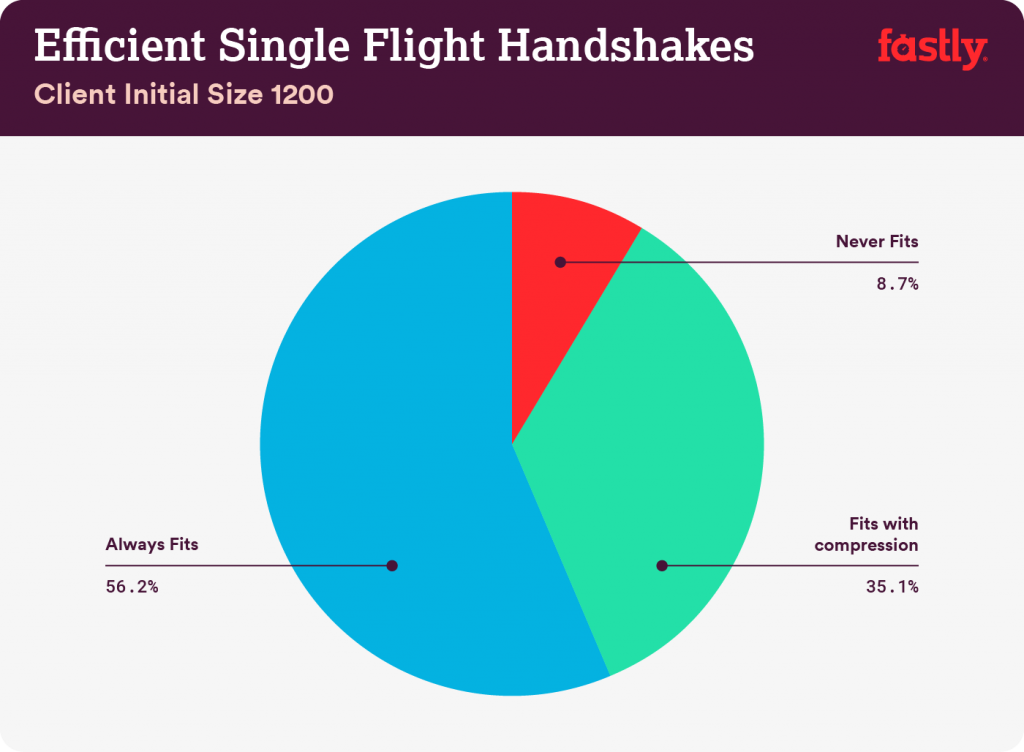

Unfortunately, this introduces a variable that is outside the control of the server implementation: the number of bytes in the initial client datagram. While the client reliably sends only one datagram, its exact size is up to the client’s implementation.

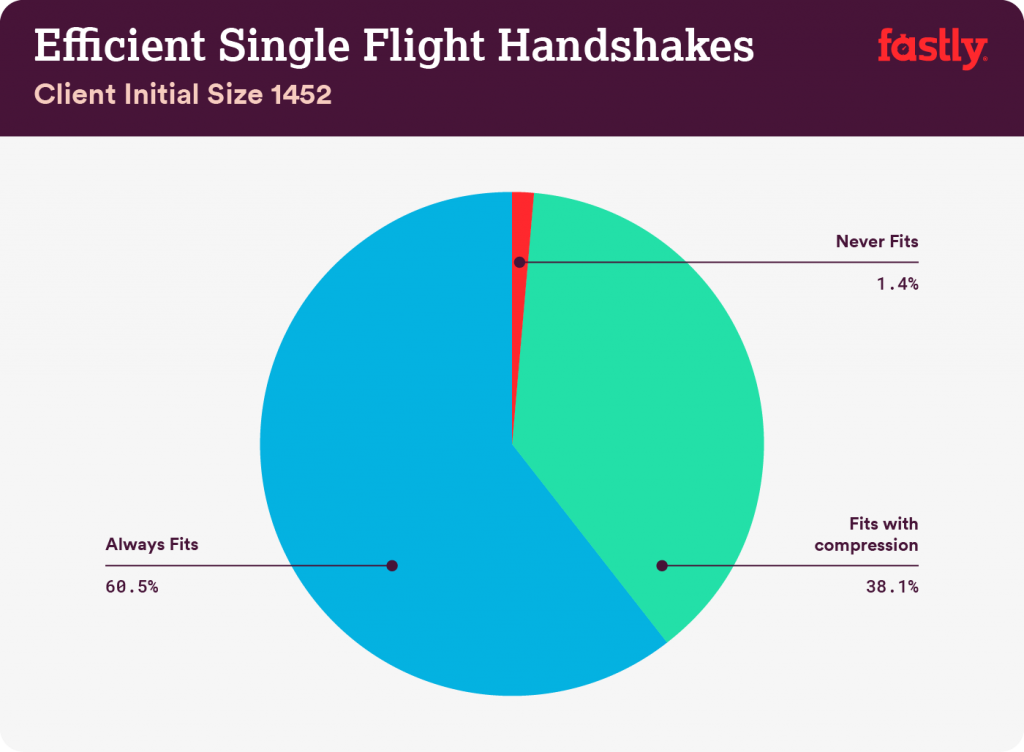

The minimum size a client’s initial datagram can be is 1,200 bytes (on top of UDP/IP) and realistically the maximum would be based on the client’s MTU. So, let’s use 1,452 as the top end based on a full 1,500-byte IPv6 datagram. Google’s Chrome has been using a 1,350-byte MTU in the middle of the range. I laid out the QUIC packets using these datagram sizes and the same 200 bytes of non-certificate handshake data plus QUIC framing bytes that we used previously. The result was a certificate chain budget for an efficient handshake between 3,333 and 4,356 bytes depending on whether the initial client packet size was at the low or high end of the range.

Based on those thresholds I placed each sample into one of three categories: fits under budget even without compression, needs compression to fit within the budget, or never fits within the budget. For samples that did not fit under the threshold without compression, the median compression savings was 34% and that was often enough of a reduction to change the state of the sample to be ‘needs compression to fit within budget’.

Clients that use 1,452-byte initial packets would be able to complete an even higher fraction of their handshakes efficiently because they are allowed up to 4,356 of certificate chain information.

It’s time to revisit our initial questions one last time based on this refined byte-size based data. Fortunately, they tell a story very similar to the simplified datagram-based analysis.

Between 40% and 44% (depending on the size of the client’s initial packet) of the observed uncompressed certificate chains are too large to fit in a single QUIC handshake flight that conforms to the 3x amplification rule. That is a very large fraction of handshakes that will suffer a performance penalty due to their size.

Second, after applying compression to the certificate chains only 1% to 9% of them (again depending on how many bytes the client initiated the connection with) are too large to fit within the 3x amplification budget. Compression not only reduces the byte count, but it does so in a way that interacts extremely favourably with the security rules.

It’s likely that these results could be improved a bit further by using a more aggressive compression algorithm such as brotli instead of deflate. The right balance will depend on the execution environment. Brotli generally has better compression ratios, but at greater expense to create. Servers that can pre-compute certificate chains may find that an attractive option though we seem to be reaching the point of diminishing returns.

The conclusion

First, the TLS certificate compression extension has a very large impact on QUIC performance. Even though the extension is new and being introduced fairly late in the process when compared to overall QUIC deployment schedules, it seems quite important for both clients and servers to implement the new extension so that the QUIC handshake can live up to its billing. Without some help, 40% of QUIC full handshakes would be no better than TCP, but compression can repair most of that issue. I have heard of other non-standardized approaches to reducing the size of the certificate chain, and they seem reasonable, but this is a problem worth addressing immediately with the existing compression extension.

Second, clients should use large initial handshake packets in order to maximize the number of bytes the server is allowed to reply with and therefore maximize the number of handshakes that can be completed in one round trip. This does require the client to implement a path MTU discovery mechanism for cases where the local MTU is greater than is supported on the path to the server, but the return on investment is strong. An extra 200 bytes of padding in the client initial will halve the handshake time for 7% of connections in the dataset considered here.

Lastly, data from the real world again proves to be more insightful than intuition and is invaluable in making protocol design and implementation decisions. When I started this work I expected the impact of compression to be positive but marginally focused on a few edge cases. The data shows this optimization lands right on the sweet spot that ties configurations, and the QUIC specification together and impacts a large portion of QUIC handshakes. My thanks to the authors of the compression extension.

Adapted from original post which appeared on the the Fastly Blog.

Patrick McManus is a Fastly Distinguished Engineer, major contributor to the Firefox Networking stack, Co-Chair of the IETF Dispatch Working Group, and co-author of several Internet Standards including DNS over HTTPS.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.