Low Earth Orbit (LEO) satellites form the new frontier of networking and sensing research. In recent years, multiple companies such as SpaceX, Amazon, Planet, and Capella Space have invested billions of dollars launching large constellations into the LEO region (typically less than 1,000 kilometres above Earth).

Recently, the United States allocated a USD 900 million subsidy to SpaceX for delivering satellite-based broadband connectivity in rural areas. The new constellations, comprising of hundreds of satellites, focus on two key applications:

- Internet connectivity for underserved areas, for example, Kuiper by Amazon and Starlink by SpaceX.

- Earth observation, for example, constellations by Planet Inc. and Capella Space.

Earth observation constellations will grow from three to hundreds

Earth observation satellites provide high resolution imagery of Earth and are key enablers for many applications such as precision agriculture, disaster management, modelling the spread of diseases, geopolitical analytics, climate monitoring, and aircraft tracking.

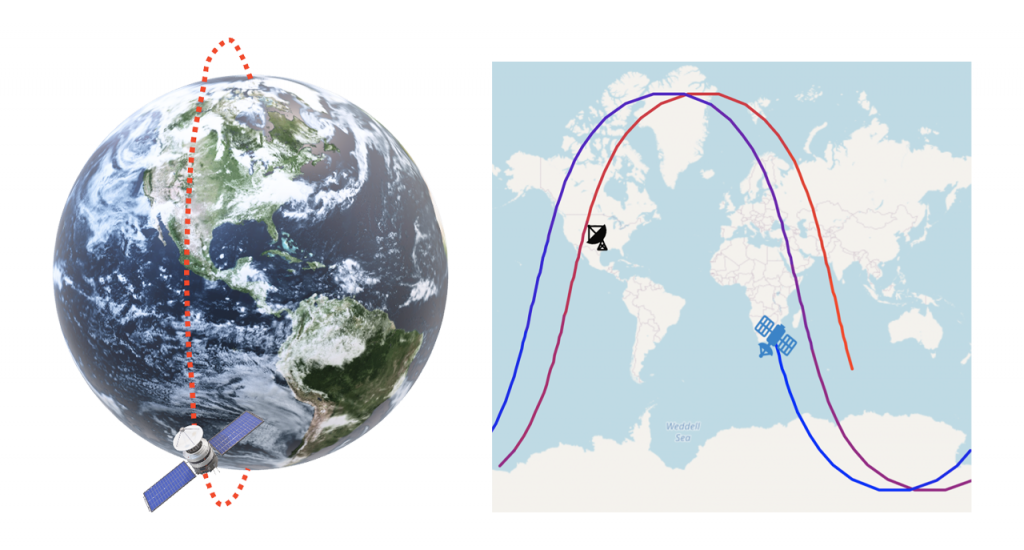

Today, nearly half the satellites in low orbits are meant for Earth observation alone. As shown in Figure 1, these satellites orbit around the Earth’s poles. As the planet rotates under them, these satellites scan different parts of Earth. The low orbit of these satellites enables high resolution imagery, for example, resolutions of one square metre (1 m2) per pixel.

Traditionally, Earth observation relied on constellations of two to three satellites, for example, Sentinel-1, a popular Earth imaging platform by the European Space Agency has two satellites. However, due to their orbital motion and Earth’s rotation, these satellites revisit a location on Earth only once in multiple days (for example, six to twelve days per satellite for Sentinel-1).

This image capture latency is sufficient for some applications such as soil type classification, monitoring polar ice caps, and so forth. But it’s insufficient for latency-sensitive applications such as disaster management (for example, tracking floods and forest fires), airspace monitoring (for example, tracking lost planes or aircraft intrusion), ocean monitoring (for example, tracking maritime smuggling and illegal fishing), and weather nowcasting. These applications demand image updates on the scale of minutes.

Motivated by this demand, satellite companies have launched constellations that comprise hundreds of satellites and plan to launch hundreds more in the next few years. The planned constellations reduce the frequency of image capture from days to hours or minutes.

Bottleneck emerging back on Earth

As large satellite constellations emerge, the bottleneck shifts from space to the Earth.

Downloading data from space is challenging due to the scale and orbital dynamics of LEO satellites. One image of Earth at 1 m2 resolution consists of 500 terapixels. Therefore, constellations generate multiple terabytes of data per day that must be transported back to Earth, hundreds of kilometres away.

Read: Everything you wanted to know about LEO satellites, part 1: The basics

What makes this even more challenging is that the contact between LEO satellites and ground station receivers on Earth is short-lived. Due to the low orbit, a satellite passes through the sky in ten minutes from horizon to horizon (imagine sunrise to sunset in ten minutes) with only four to six such contacts in a day. To download large volumes of data in such short intervals, constellation operators deploy high-end multi-million-dollar ground stations that operate at high frequencies. Even with such highly optimized ground stations, data downlink suffers from a latency of several hours and is prone to weather-induced disruptions.

The key challenge for downloading data from satellites stems from the ground station architecture itself. Today, satellite constellations are supported by around five multi-million-dollar ground stations using highly specialized equipment and huge dish antennas. Because these ground stations must download large data volumes within small contact times they have with the satellites, most research has focused on getting as much performance as possible from individual satellite-ground station links.

However, these designs are fundamentally prone to large latencies and failures. A satellite must wait for hours before it comes in contact with a ground station to be able to download its imagery. These links also act as single points of failure and can be disrupted by bad weather or hardware errors.

More satellites need more ground stations

This has led to multiple efforts in industry and academia to increase the distribution of ground stations while reducing the pain involved in setting up new ones. For example, Amazon (AWS Ground Station), Microsoft (Azure Orbital), KSAT, and others have set up ground stations that satellite companies can rent to increase their footprint on the ground, reducing download latencies, and easing data transfer to the cloud.

Read: Starlink’s high-speed satellite Internet: What’s the catch?

Academia has also seen new proposals on this front. Recently, we at the University of Illinois along with Microsoft evaluated a distributed ground station architecture called L2D2. L2D2 utilizes hundreds of inexpensive receive-only ground stations and commodity hardware deployed on rooftops and backyards and can improve robustness and reduce latency to minutes. In some ways, this architecture mirrors past shifts in computing, such as the one from large supercomputers to data centres that consist of hundreds of commodity devices.

However, as the satellite networking space evolves, we have multiple questions to think about. Not all data that these satellites transmit is useful, but it is hard to tell what data might be needed a few months down the line.

How do we build edge computing frameworks that run on satellites or ground stations and prioritize data transfer for latency-sensitive data (such as for forest fires)?

Given multiple ground station options to download data from satellites, how does a satellite operator balance its downlink across the different possibilities to optimize for cost, latency, and robustness?

How do we make it easy for developers to access these datasets and build tools on top?

I believe all these questions will be answered over the course of the next decade, which is bound to prove exciting for all of us.

Deepak Vasisht is an Assistant Professor in Computer Science at the University of Illinois Urbana-Champaign.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

Super insightful article! Thanks for sharing!