In this post I will talk about my experience implementing TCP Fast Open (TFO) while working on PowerDNS Recursor. Why TFO? Normally the DNS protocol works over UDP, and each transaction is a single request followed by a single reply. In theory, UDP packets can be quite large but in practice the limit is much lower, since delivery of fragmented UDP packets is both unreliable and poses a security risk.

If the answer is too big for UDP, DNS falls back to TCP and this fall-back is used more often these days. With the emergence of DNSSEC and TXT records, large answers are more common than they used to be.

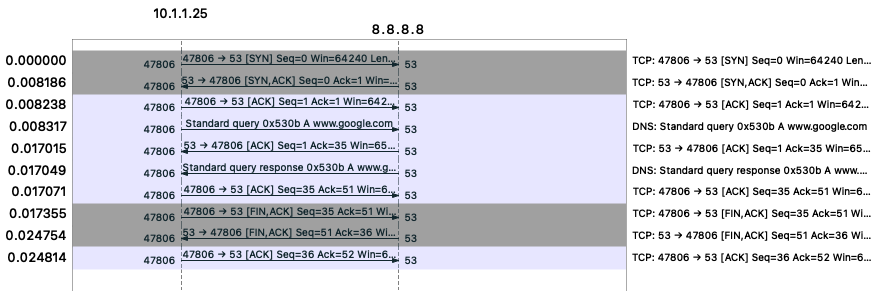

TCP adds quite an overhead as it not only needs both peers to keep state, but there is also extra data exchanged compared to a UDP request-reply. It is a three-way handshake (3WHS), followed by the request and reply, their ACKs, and then the teardown. A typical TCP DNS request-reply exchanges at least 10 packets over the network.

In comes TFO. It allows the initial SYN packet to contain data, and that data is immediately passed to the application upon reception.

If a client never communicated before with the target server it will add a TCP option requesting a TFO cookie to the initial SYN packet. The server then generates a TFO cookie based on a secret, its local address, port, and the client’s address. The TFO cookie will be sent to the client in the SYN-ACK.

If a client has a TFO cookie from an earlier connection it can use it for a new connection, sending the cookie and the request data with the initial SYN. The server validates the TFO cookie and if it is accepted, the 3WHS is considered completed immediately.

RFC 7413 goes into more detail, but essentially a TFO cookie tells the server that the client is willing and able to do a 3WHS and can skip the 3WHS on subsequent connections. Always accepting data and sending it to the application on the initial SYN would open the possibility of all kinds of SYN flood attacks.

The nice thing is that all the details are taken care of by the TCP stack. Linux, MacOS, and FreeBSD have supported TFO for quite a while. The only thing an application must do is to enable either passive TFO for servers, or active TFO for clients, and things should work. But we will see that things are not as simple as they seem.

The examples below assume a Linux machine is being used for testing.

Offering TFO as a server

To enable TFO for a server application, you just set the TCP_FAST_OPEN option on the listening socket to a value greater than zero (the queue size). Sadly, this socket option is not documented properly and it is not clear what a ‘good’ value for the queue size would be. Also, when testing, you will see that it does not work at all. You also need to set the net.ipv4.tcp.fastopen sysctl to enable server side TFO. This sysctl’s default value disallows server side TFO.

PowerDNS Recursor has an option to specify the queue size. As I expect this is quite a common mistake to make, I added a warning if this queue size is greater than zero, but the sysctl setting does not allow TFO.

Other DNS servers implement server side TFO as well, but it is not always clear from the documentation if an option should be set or not. A 2019 survey by Deccio and Davis showed that few DNS servers offer TFO. It would be nice to repeat this survey to see what the current state of affairs is.

Using TFO as a client

In contrast to authoritative servers, a recursor can also act as a TCP client when getting data from authoritative servers. Until recently, PowerDNS Recursor did not use TFO as a TCP client, so I set out to implement it.

A simple way to enable TFO is to set the TCP_FASTOPEN_CONNECT socket option on the socket before calling connect. This socket option also lacks documentation, but the pull request that introduced it into the Linux kernel gives some details. If your code already handles non-blocking socket I/O, you are basically done.

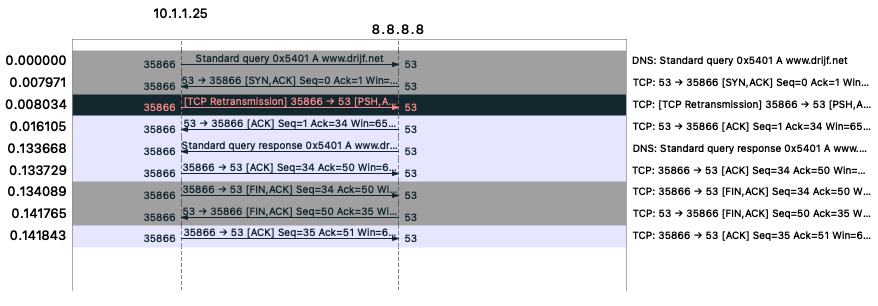

However, my first test showed that while TFO cookies were exchanged, a variation of the 3WHS did occur. Plus, the client did a retransmit of the request!

After some head scratching it turns out that I was using the wrong servers to test. I was using Google’s 8.8.8.8 service as a forwarding target. Google does send TFO cookies but does not accept the cookies it sent earlier. This is a known issue since 2017 and is caused by the anycast cluster not sharing the secret used to generate cookies between nodes, but this can be done by setting a sysctl or a specific option on the listening socket.

It is a bit frustrating to see that both the TFO RFC and the Linux implementation originate from Google, but for some reason they do not implement TFO properly. This is also true for their authoritative servers.

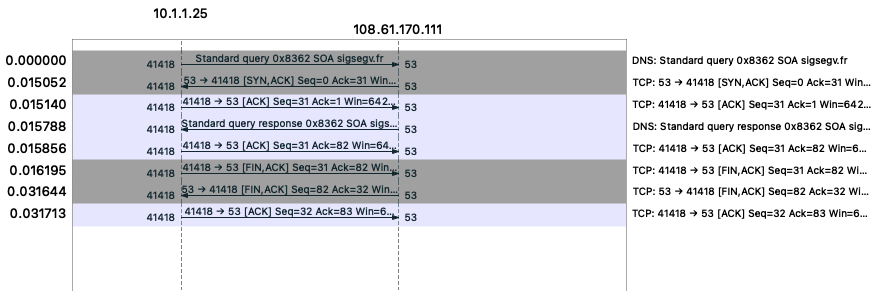

After realizing that I should use other servers to test TFO, I saw the correct request and use of TFO cookies for small-scale testing. But when I used it in a larger test, TFO was often not used for servers that properly used it before.

It turns out that Linux is extremely conservative using TFO. It will disable TFO for all outgoing connections — even for ones that used it correctly before — if a single TFO-enabled TCP connect fails because of timeout. This is to likely avoid issues with middle boxes not understanding TFO and dropping packets, but on a DNS recursor it causes TFO to be switched off for a long time (an hour or more). Since authoritative DNS servers unreachable over TCP are quite common, we can use netstat -s to output several counters related to TFO:

$ netstat -s | grep TCPF

TCPFastOpenActive: 1

TCPFastOpenActiveFail: 3

TCPFastOpenBlackhole: 1

However, the exact conditions under which the ‘black holing’ occurs are not documented. To make the kernel keep using TFO, I had to set the net.ipv4.tcp_fastopen_blackhole_timeout_sec sysctl to 0.

TFO is neat, but…

While TFO is a neat mechanism to reduce TCP overhead, implementing it properly is harder than it should be. Even if almost all the work is already done in the kernel, TFO implementation is challenging due to:

- Lack of documentation on counters and how to interpret them

- Lack of documentation on the socket options involved

- Sysctl defaults that do not allow passive TFO

- An extremely aggressive black holing mechanism

TFO may be fast but adoption is slow. To increase TFO adoption these issues need to be addressed.

Otto Moerbeek is PowerDNS Senior Developer working on PowerDNS Recursor. He is also active as an OpenBSD developer, spending most of his time on OpenBSD’s malloc implementation.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of APNIC. Please note a Code of Conduct applies to this blog.

Hi Otto,

Very interesting read. I would be interested in conducting some more research regarding TFO, especially considering its adoption. For that, it would be very nice to be able to contact you. Is that possible in some way?

I would also like to contact you……